8 Charts that Explain Enterprise Generative AI Adoption in 2024

OpenAI and open-source have momentum

Corporate buyers are increasing generative AI budgets, mostly selecting OpenAI and Google models, and expect to use more open-source solutions because they want control over their technology stack. These are some of the results of Andreessen Horowitz's (a16z) recently conducted interviews with 70 corporate generative AI buyers about their generative AI plans. According to the study’s authors:

We were shocked by how significantly the resourcing and attitudes toward genAI had changed over the last 6 months. Though these leaders still have some reservations about deploying generative AI, they’re also nearly tripling their budgets, expanding the number of use cases that are deployed on smaller open-source models, and transitioning more workloads from early experimentation into production.

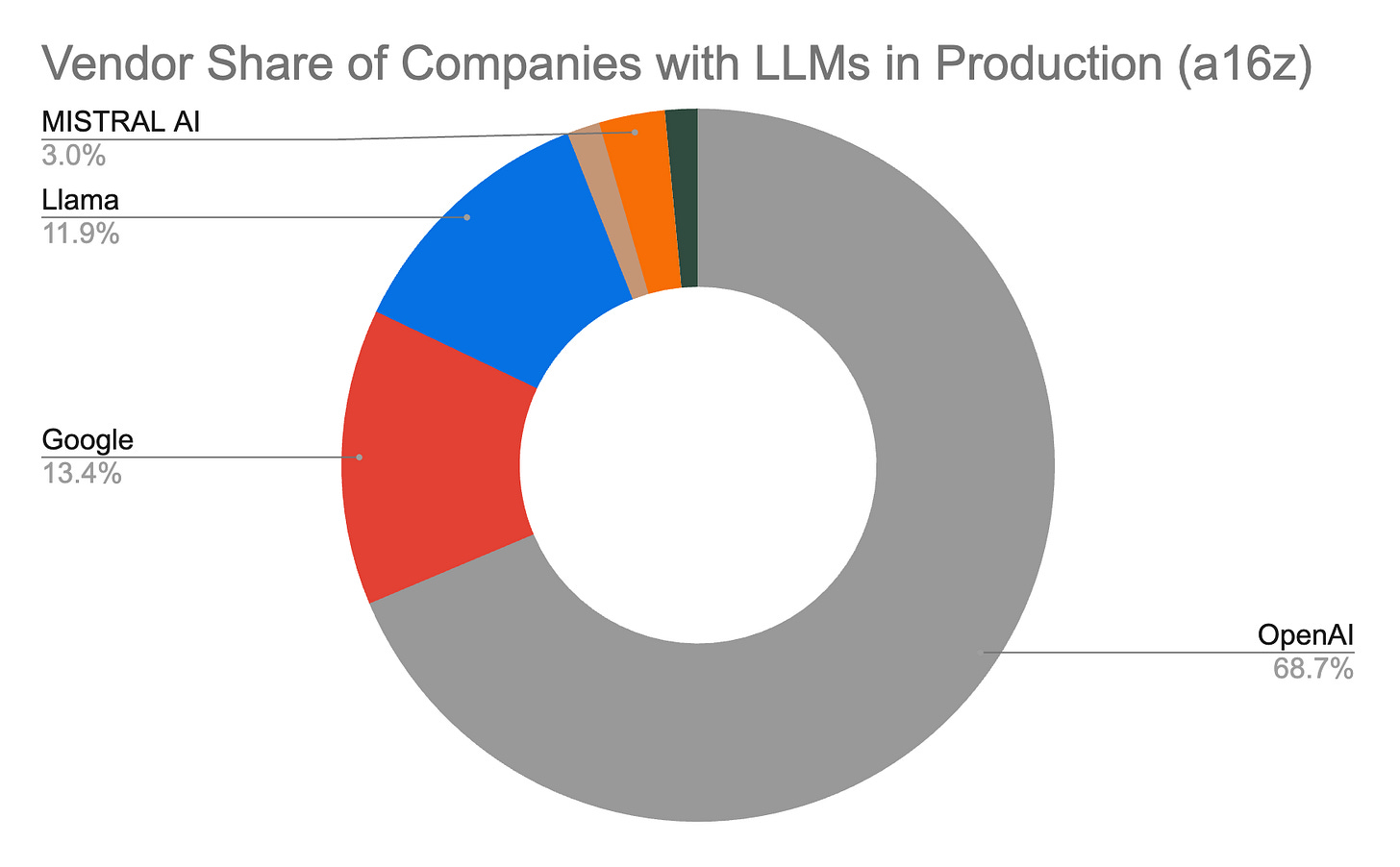

1. OpenAI and Everyone Else

It is no surprise that OpenAI is the most widely used large language model (LLM) among survey participants. All of them were at least testing OpenAI models, compared to 63% for Google and 41% for Meta’s Llama. This data reinforces Synthedia’s analysis that OpenAI is the default LLM provider, and the other foundation model providers are vying to become the primary alternative.

However, this only tells part of the story. It appears that Google is in nearly two-thirds of the enterprises as OpenAI. That may be true when considering testing. It doesn’t take much to test a model. Going into production is a commitment.

Of a16z’s survey participants, OpenAI commands about 69% of the relative market share of companies with at least one generative AI model used in production. This compares to just 13% for Google and 12% for Meta’s Llama.

Granted, these figures likely understate OpenAI’s dominance. The data only indicates if these models are in production for at least one use case. Some companies have multiple generative AI use cases in production. The share of individual use cases in production that employs one of these models almost certainly skews even more heavily towards OpenAI. That is a benefit of being first to market and taking an early lead in product quality.

It’s a Multi-model World

According to a16z, none of the companies interviewed use a single LLM provider across their pilots and production solutions, and only 7% use just two. Thirty-six percent are employing models from three vendors and 57% are using four or more. But why? a16z’s Sarah Wang and Shangda Xu wrote:

Just over 6 months ago, the vast majority of enterprises were experimenting with 1 model (usually OpenAI’s) or 2 at most. When we talked to enterprise leaders today, they’re are all testing—and in some cases, even using in production—multiple models, which allows them to 1) tailor to use cases based on performance, size, and cost, 2) avoid lock-in, and 3) quickly tap into advancements in a rapidly moving field. This third point was especially important to leaders, since the model leaderboard is dynamic and companies are excited to incorporate both current state-of-the-art models and open-source models to get the best results.

Synthedia can confirm similar sentiments were expressed in conversations with other large enterprise executives. The general understanding of the first reason cited by a16z is generally nascent. There are clearly companies choosing to deploy with OpenAI’s GPT-3.5 over GPT-4 due to cost and latency considerations. However, very few are training their own models or have the talent internally to manage a private instance of an open-source model.

Our expectation is that most companies will employ a primary vendor across the majority of production generative AI use cases, and those may be split among 2-3 different LLMs from that provider based on cost and performance considerations. It is likely that many of those companies will have a second LLM provider for a handful of use cases.

Open-Source Rising

The secondary LLM vendor for many companies is likely to be from open-source providers. Over 80% of company executives expressed an interest in expanding their use of open-source LLMs, and 46% preferred them over proprietary models. a16z said:

We estimate the market share in 2023 was 80%–90% closed source, with the majority of share going to OpenAI. However, 46% of survey respondents mentioned that they prefer or strongly prefer open source models going into 2024…In 2024 and onwards, then, enterprises expect a significant shift of usage towards open source, with some expressly targeting a 50/50 split—up from the 80% closed/20% open split in 2023.

The key reason for open-source preference comes down to control. While a16z places control at 60% for the primary reason behind company executive preferences, customizability is a closely related concept. Customization is directly related to control.

Only 10% of the survey participants cited cost as a primary driver. That may be surprising to some people who recognize generative AI as a significant new expense for companies. However, it is unclear how much less expensive the total cost of ownership is for proprietary versus open-source models.

Prices are lower for open-source models like Llama 2 and Mistral in cloud hosting providers than for comparable proprietary models from OpenAI or Anthropic. However, it is not remarkably less in many instances, given the performance sacrifice. In addition, the inference price differences do not account for other costs, such as less support and more scarcity in terms of talent familiar with the models.

Company executives are cognizant that they may have limited opportunities to switch out models from applications. They also understand that there is vendor risk related to asynchronous technology innovation, security vulnerabilities, future financial viability, and potential shifts in their terms related to pricing or data privacy factors. Open-source LLMs provide some protection for at least the last two factors because corporate users can control their fate even if vendors disappear or are no longer viable options.

Cloud Leads the Way

Over half of the executives interviewed said their companies are accessing LLMs from a cloud service provider compared to 38% connecting directly through a model provider. Nearly three-quartes are accessing LLMs through a model endpoint as opposed to self-hosting. This data points to convenience as a big motivator for company model selection. The companies using self-hosting are largely choosing that approach because it is necessary to run many open-source models.

Spending is Growing

a16z only spoke with 70 companies actively engaged with generative AI. While the study’s authors indicated that technology, telecom, CPG, banking, payments, healthcare, and energy companies were included, no details were provided regarding the relative mix across these industries. Therefore, you should not assume this is a representative sample across all companies and industries. The fact that survey participants spoke with a16z partners suggests the companies are probably on the early adopter side of the technology adoption lifecycle spectrum for their respective industries. However, it is useful data about companies testing or employing LLMs in production.

The companies interviewed are increasing their LLM spending in 2024 to an average of $18 million, up from just $7 million in 2023. That is a 2.5x increase and indicates an increasing commitment to the technology. It also reflects a planned shift from testing to production for more use cases.

Internal Use Cases Lead the Way

All that new corporate cash for generative AI projects is headed predominantly for internal use cases in 2024. a16z lists “text summarization” and “enterprise knowledge management” as key internal use cases. However, the “customer service,” “marketing copy,” “software development,” and “contract review” use cases are also internally facing. While the content generated by LLMs will eventually find its way to external audiences, these “copilot” solutions are human-in-the-loop productivity tools mediated by company employees. These differ from the “external chatbot” and “recommendation algorithm” use cases where customers interact directly with an LLM-enabled solution.

This finding also tracks with Synthedia’s market analysis. Employee productivity is driving the first wave of enterprise generative AI adoption. There is significant reticence about exposing LLM-enable solutions to customers. The perceived risk of errors negatively impacting customers will mean that externally facing generative AI solutions will require more testing and generally be slower to market adoption.

The exception to this will be customer service chatbots for some low-risk customer use cases. An LLM-enabled FAQ bot grounded in company and product data, or a bot that integrates with account information but with a limited scope of authority, will lead the market. Any support request deflected from email, chat, or phone support with a live customer service rep is good for the company from a cost perspective and for customer convenience.

Build Over Buy

The final point made by a16z is that enterprises are primarily building generative AI solutions as opposed to purchasing packaged applications.

Enterprises are overwhelmingly focused on building applications in house, citing the lack of battle-tested, category-killing enterprise AI applications as one of the drivers. After all, there aren’t Magic Quadrants for apps like this (yet!). The foundation models have also made it easier than ever for enterprises to build their own AI apps by offering APIs. Enterprises are now building their own versions of familiar use cases—such as customer support and internal chatbots—while also experimenting with more novel use case.

This also feeds into the enterprise preference for control. Building your own applications while using vendor models offers the opportunity for customization and the ability to abstract the models from the core functionality.

The trends of control, multi-model, cloud, open-source, and internal employee use cases are shaping enterprise generative AI adoption in 2024. They are also converging along with the strong need for enterprise productivity that is leading to a rapid rise in corporate budgets.

4 Shortcomings of Large Language Models - Yan LeCun, Research, and AGI

Large language models (LLM) offer seemingly magical capabilities often mistaken for human-level qualities. However, Yan LeCun, Meta’s top AI scientist and Turing Award winner, recently laid out four reasons why the current crop of LLM architectures is not likely to reach the goal of artificial general intelligence (AGI). During an interview on the Lex F…