AI21 Labs Ramps up Competition with OpenAI on Performance and Features

The New Wordtune APIs Point to a Future of Services and Not Just Models

AI21 Labs made two announcements last week. The first was the introduction of the new Jurassic-2 (J2) large language models (LLM) named Large, Grande, and Jumbo. In case it is unclear, Large is the smallest, and Jumbo is the largest. New models are generally accompanied by better performance in terms of accuracy, speed, and other factors. My colleague, Eric Schwartz, had early access to the J2 models, and his evaluation suggested improved output generation for a variety of use cases.

The timing is particularly important for AI21 Labs as OpenAI’s GPT-4 is expected to be announced by Microsoft later this week. However, the second announcement may be even more important. AI21’s task-specific APIs provide easy access to specific generative AI model tasks ranging from summarization to rewriting text.

Nearly every application provider today is working on adding generative AI features to their software. If they can skip the fine-tuning and access a service already optimized for a particular task, this process will become easier and faster.

Get the Features and Skip the Training

A121 is in a good position to offer several task-specific APIs because it has products that already use these features in Wordtune and Wordtune Read. Developers today typically access the LLMs directly and then add embeddings or conduct fine-tuning before building a new product or adding a feature to an existing product. AI21 is offering developers the opportunity to skip those steps.

By providing developers with task-specific APIs, they can leap over much of the needed model training and fine-tuning stages, allowing them to take full advantage of our ready-made best-in-class language processing solutions…With the release of Wordtune API, we’re giving developers access to the AI engine behind this award-winning line of applications, allowing them to take full advantage of Wordtune’s capabilities and integrate them into their own apps:

Paraphrase - Reword texts to fit any tone, length, or meaning.

Summarize - Condense lengthy texts into easy-to-read bite-sized summaries.

Grammatical Error Correction (GEC) - Catch and fix grammatical errors and typos on the fly.

Text Improvements - Get recommendations to increase text fluency, enhance vocabulary, and improve clarity.

Text Segmentation - Break down long pieces of text into paragraphs segmented by distinct topic.

Does LLM Size Equal Performance?

Discussions around large language model (LLM) performance almost always start with model size. This generally devolves into a simplistic comparison of total parameters. If you look at recent history, this makes some sense. While it is not the only metric that matters, models with more parameters have tended to perform better on a wide range of tasks. So, it was a surprise that AI21 Labs did not reveal the parameter count of its new Jurassic-2 (J2) model.

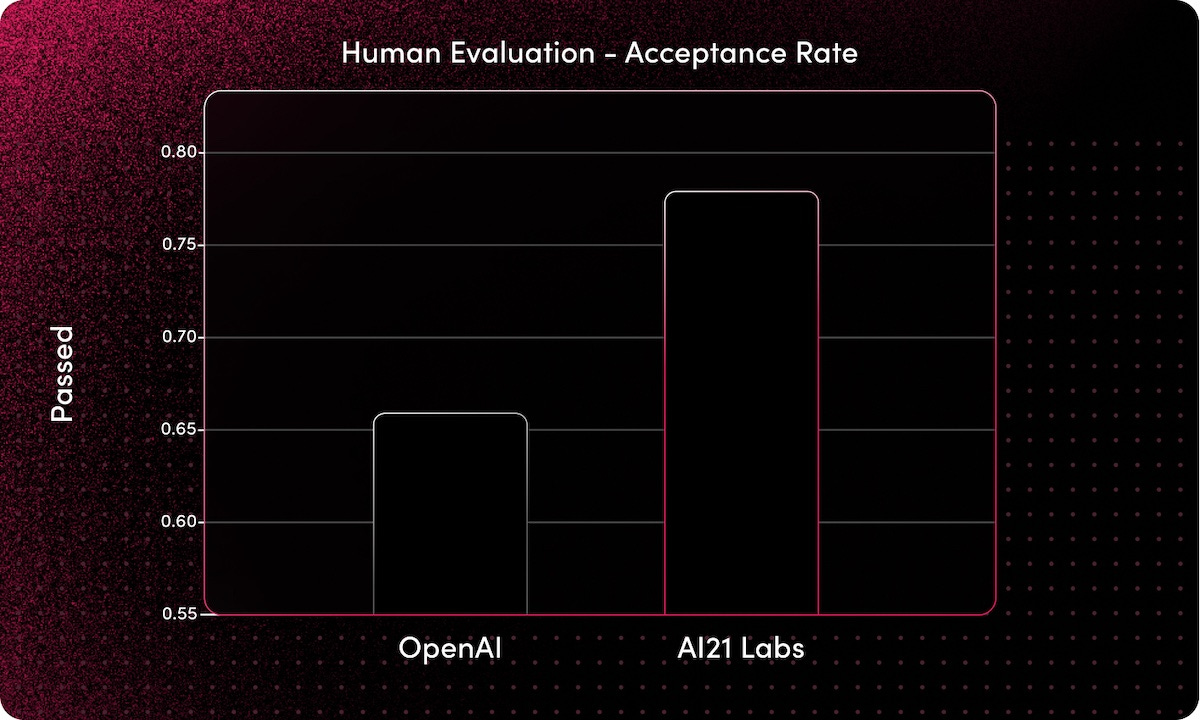

The company’s Jurassic-1 (J1) model has 178 billion parameters, slightly higher than GPT-3’s 175 billion. However, J1 trailed GPT-3 in terms of data tokens used in training, 300 billion compared to 499 billion. So, we don’t know the parameter count or the number of training data tokens, but AI21 has said the J2 model is 30% faster and performs better on the Stanford HELM benchmarks.

This move could lead to a more performance-oriented discussion of generative AI models focsuing on outputs instead of inputs and architecture. This may also lead to more consideration of API services than direct access to generalized models.

Services Over Models

I suspect AI21’s task-specific APIs are a sign of things to come. Generalized models with customization options are a good solution for an early market. However, as the market matures, features packaged as API services are likely to become attractive. It is inefficient for everyone to train their own model when they only want a specific feature that is common across many developers.

While we are about to see more competition in the generalized model segment, there may be even bigger momentum for tailored API services on top of these models over the next 12 months.