AWS Launches Amazon Bedrock Studio to Support No-Code Generative AI Projects

Closing the gap to Azure and Google Cloud

Amazon Bedrock Studio is the AWS answer to Azure’s Copilot Studio and Google Cloud’s Vertex AI Agent Builder. It is a low-code/no-code generative AI assistant builder for testing or deployment. Developers can add documents as data sources that are automatically vectorized to support retrieval augmented generation (RAG) question answering. They can also implement basic prompt engineering and set topical and keyword-based guardrails.

In addition, the new Studio provides administrative functions such as user management, collaboration, and access control. According to Amazon’s announcement:

Amazon Bedrock Studio accelerates the development of generative AI applications by providing a rapid prototyping environment with key Amazon Bedrock features, including Knowledge Bases, Agents, and Guardrails…

You can build applications using a wide array of top performing models, evaluate, and share your generative AI apps within Bedrock Studio. The user interface guides you through various steps to help improve a model’s responses. You can experiment with model settings, and securely integrate your company data sources, tools, and APIs, and set guardrails. You can collaborate with team members to ideate, experiment, and refine your generative AI applications—all without requiring advanced machine learning (ML) expertise or AWS Management Console access.

Playground

The solution also has a Playground where developers can test models and configurations. Bedrock provides access to a number of large language models (LLM), ranging from Meta’s Llama 2 and 3 series and Athropic’s Claude to Mistral, AI21 Labs, and the homegrown Titan. However, it is worth noting that access to any Anthropic models requires you to provide details of your use case and a review before permission is granted. In addition, Claude 3 Opus, the most advanced of Anthropic’s LLMs, is not an option today through AWS. Only the Haiku and Sonnet versions are listed as options.

It is also far harder to actually get to the playground than if you are familiar with similar experiences through OpenAI or AI21. If you don’t already have a configured AWS workspace with single sign-on and roles, you must set those up first. A key takeaway is that the Playground and Bedrock Studio may be a low-code/no-code option for building a generative AI assistant. However, it is still really for developers as opposed to designers and analysts.

Function Calling

Bedrock Studio also highlights function calling. The ability to access dynamic data sources and operational systems to execute actions is becoming a common requirement. While many of the earliest generative AI applications were limited to model data, search, or grounded in company databases, corporate users are increasingly interested in executing variable and multi-step workflows.

Functions enable a model to include information in its response that it doesn’t have direct access to or prior knowledge of. For example, a function could allow the model to retrieve and include the current weather conditions in its response, even though the model itself doesn’t have that information stored.

Most generative AI chat solutions today are focused on knowledge retrieval and do not involve tools. That will soon change. As organizations move past the knowledge search capabilities and look toward integrating business processes and executing tasks, function calling will become commonplace. These capabilities further enhance the value generative AI can provide.

Cloud (LLM) Wars

LLMs represent the latest battlefront in the cloud wars. AWS started its generative AI strategy well after Azure and Google Cloud. It already had a basis for more traditional machine learning models through Sagemaker. However, the company was unprepared for the rapid rise in interest in accessing generative AI foundation models.

The introduction of Amazon Bedrock in 2023 was a first step in closing the gap to Azure and Google Cloud. Bedrock Studio reflects the latest evidence that AWS intends to match its cloud rivals with the features that enterprises are coming to expect when using generative AI through their preferred cloud provider.

Employees Are Bringing Their Own AI to Work Regardless of Company Support

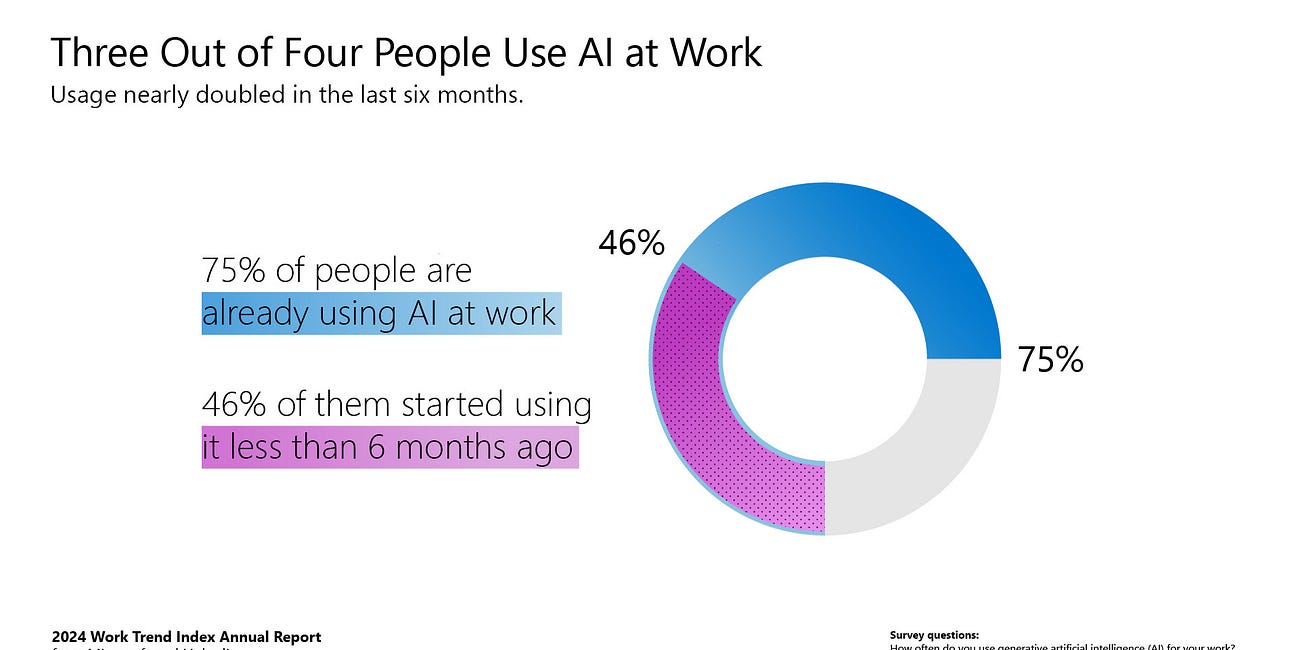

Microsoft’s 2024 Work Trend Index Report surveyed 31,000 people across 31 countries and uncovered new information related to AI adoption by business users. A key finding was that 75% of knowledge workers worldwide are already using generative AI. While some executives lament the slow uptake by workers of internally supplied generative AI solutions, they…

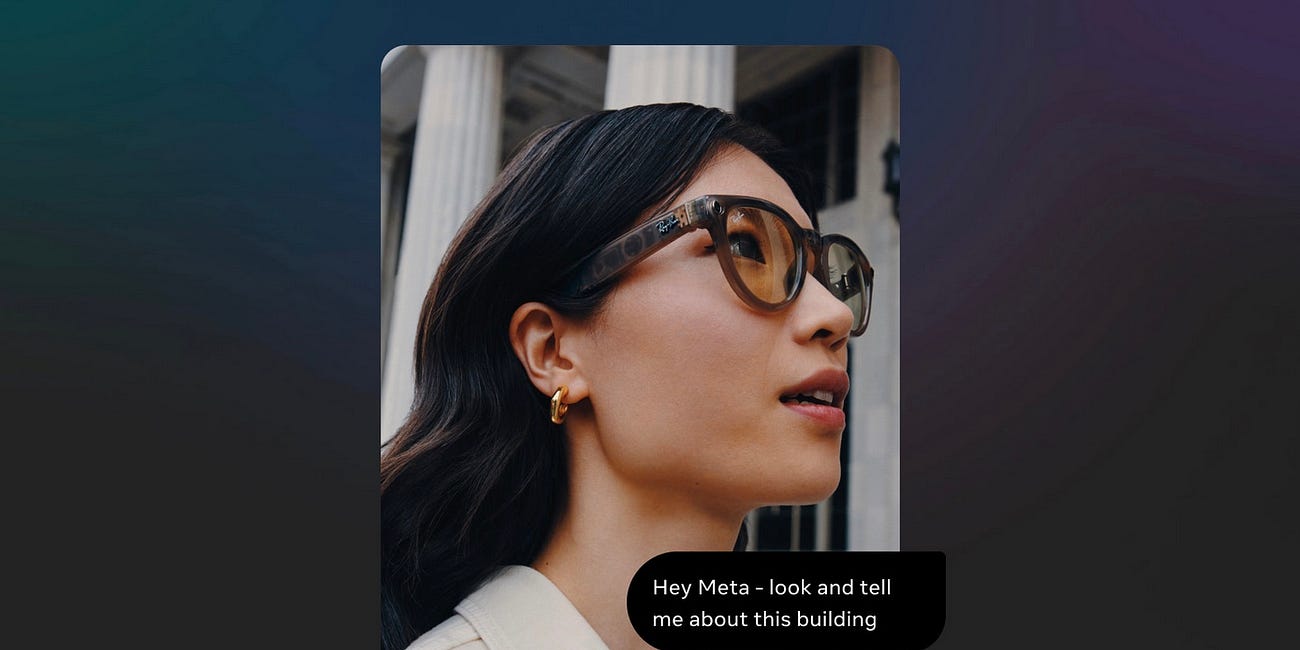

Meta's Secret Weapon in the Generative AI Wars May Be its Smart Glasses + Assistant

Why has Meta invested in the Quest VR product? Once you get past what the company has said about the metaverse and the deeper idea about connecting people, you arrive at the essence of Meta’s business: attention. Why has the company created the Meta Ray-Ban smart glasses? The earliest motivations may be traced back to fierce competition with Snapchat. S…