ChatGPT Acknowledges and Patches Security Vulnerability

New challenges emerge as LLMs go mainstream

Gil Nagli, CEO of Shockwave.cloud, a cybersecurity software solution, took to Twitter on Friday to discuss a security vulnerability he discovered for ChatGPT and shared with OpenAI. He also chronicled OpenAI’s reaction. This was the cause of ChatGPT’s extended downtime for paid and free users on Monday, March 20th.

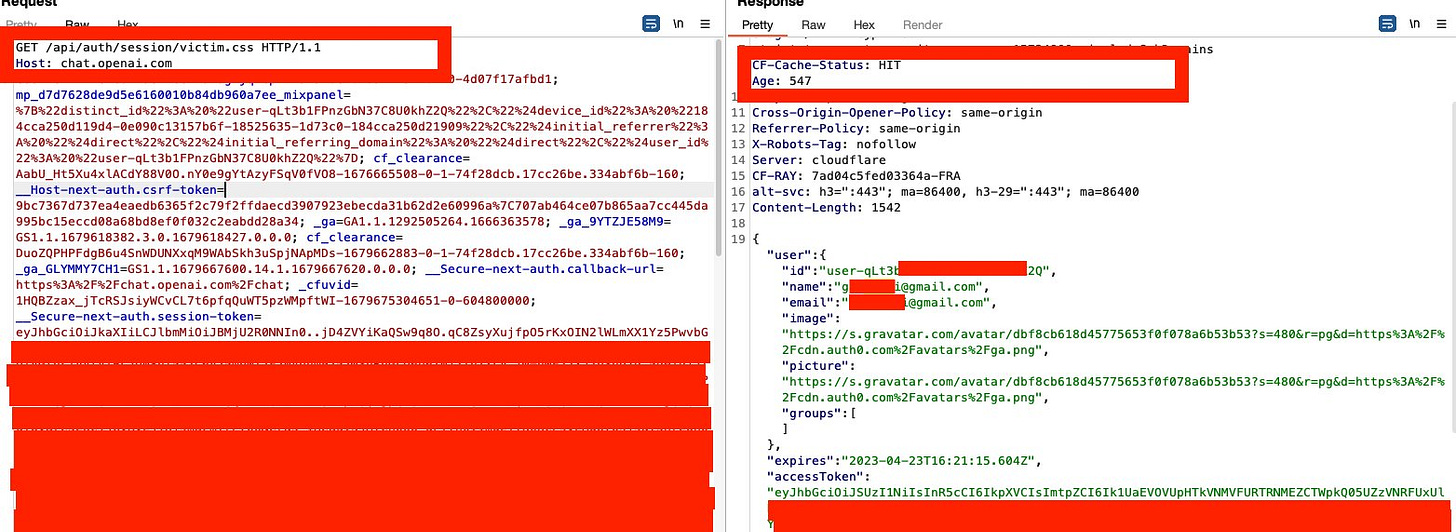

Nagli characterized this as a “web cache deception,” which enabled a hacker “to takeover [sic] someone’s account, view their chat history, and access their billing information without them ever realizing it.” You can see user information redacted from one of Nagli’s screenshots in the Twitter thread. Note the Gmail addresses and other data tokens exposed throughout the post.

Open AI Acknowledges the Vulnerability

OpenAI acknowledged the vulnerability in a Friday blog post and outlined who was at risk and what data may have been exposed. The company indicated that the impact was likely limited to no more than 1.2% of ChatGPT Plus subscribers. It appears that no free users of the service were affected.

We took ChatGPT offline earlier this week due to a bug in an open-source library which allowed some users to see titles from another active user’s chat history. It’s also possible that the first message of a newly-created conversation was visible in someone else’s chat history if both users were active around the same time…

Upon deeper investigation, we also discovered that the same bug may have caused the unintentional visibility of payment-related information of 1.2% of the ChatGPT Plus subscribers who were active during a specific nine-hour window. In the hours before we took ChatGPT offline on Monday, it was possible for some users to see another active user’s first and last name, email address, payment address, the last four digits (only) of a credit card number, and credit card expiration date. Full credit card numbers were not exposed at any time.

OpenAI also published a decent amount of technical detail about how the “bug” (i.e., security vulnerability) manifested in compromised behavior in ChatGPT servers but not how the exploit was executed. If you want to know more about the exploit and the type of data potentially exposed, you should consult Nagli’s Twitter thread.

The company identified the vulnerability’s origin to Redis, open source software used for caching. Also acknowledged in the post was that OpenAI inadvertently exacerbated this problem with a software update that caused a rise in Redis request cancelations. Server request cancelations could have occurred even without the “inadvertent…change,” which would normally mean the vulnerability existed before the change but was less noticeable. However, OpenAI narrowed the window of the potential risk to a few hours, so for some reason, they believe the vulnerability emerged as a result of the update.

Fast Reaction

While Nagli was critical of OpenAI not having a bug bounty program to proactively crowdsource these types of vulnerabilities, he was complimentary of the speed of response and attempted resolution. This is true even though the initial mitigation approach didn’t entirely solve the problem, and there were actually two patches in the response process, with the second being what Nagli labeled as a “production fix.”

The first attempted resolution was implemented within about 45 minutes of the notification from Nagli. The second, “production fix,” was live in just over 90 minutes. “Kudos on the fast production fix,” said Nagli via Twitter.

New Apps, New Problems

It is worth noting that OpenAI may have expanded its cybersecurity attack surface with ChatGPT’s launch and then further expanded into a paid subscription service. While it is possible that these same vulnerabilities existed in OpenAI Playground or DALL-E, ChatGPT has different UI elements and faces new scalability problems.

The company also worked hard to address performance issues that arose when demand for ChatGPT far outstripped expectations. New caching policies and techniques were surely part of that effort to reduce database calls and improve user experience and uptime. The introduction of ChatGPT Plus created new rules for service access, which could have led to additional unanticipated vulnerabilities.

OpenAI was a platform provider offering API services in November 2022. A consumer-style web app must address a different set of security vulnerabilities related to the application operation and the variety of tools, such as browsers, that are used to access it. The developers that access API services for user-facing applications must account for full-stack security, so some of OpenAI’s customers may be well-versed in these issues.

Was OpenAI ready for this new flavor of security threats? Was its staff experienced in this area? When you are rushing a new end-user application to market for the first time, the emergence of new security vulnerabilities could be a blind spot. With that said, maybe hundreds or thousands of application providers spent the weekend updating their own Redis implementation to close this vulnerability. OpenAI may not be the only company that was susceptible to this attack. There is no common vulnerabilities and exposure (CVE) entry I could find that lists this type of security issue so it is either novel or novel to OpenAI’s implementation.

OpenAI may have just received a gift in the proactive notification from Nagli (and maybe others) with what looks like a relatively small risk exposure. This will surely not be the last security vulnerability identified for ChatGPT or similar solutions. My guess is that OpenAI is not looking at security with renewed vigor and trying to minimize other blindspots. Well, I hope they are.