ChatGPT Suffers DDoS Attack and Multiple Outages. Anthropic Also Down. What's Going On?

The APIs and chatbots were both affected

Editors Note: The coincidence seemed anything but random. Just when OpenAI gets a new level of interest due to recent announcements, the service goes down. The easy answer, and the one Tweeted about by OpenAI CEO Sam Altman, was that strong interest in GPT-4 Turbo led to a spike in demand. But multiple rounds of outages followed by an Anthropic outage and unscheduled maintenance seemed an unlikely coincidence when I wrote this post five hours ago. And now we know that OpenAI suffered a DDoS attack. That makes much more sense. We may soon learn that Anthropic did as well if the company decides to be forthcoming.

OpenAI had a good week up until this morning. Everyone was talking about all the companies it was suddenly going to put out of business. The narrative that “OpenAI just won generative AI” seemed to be everywhere. On social media and in personal conversations, that is what everyone seems to have taken away from the announcements that leaked over the weekend and were confirmed during the Dev Day keynote on Monday.

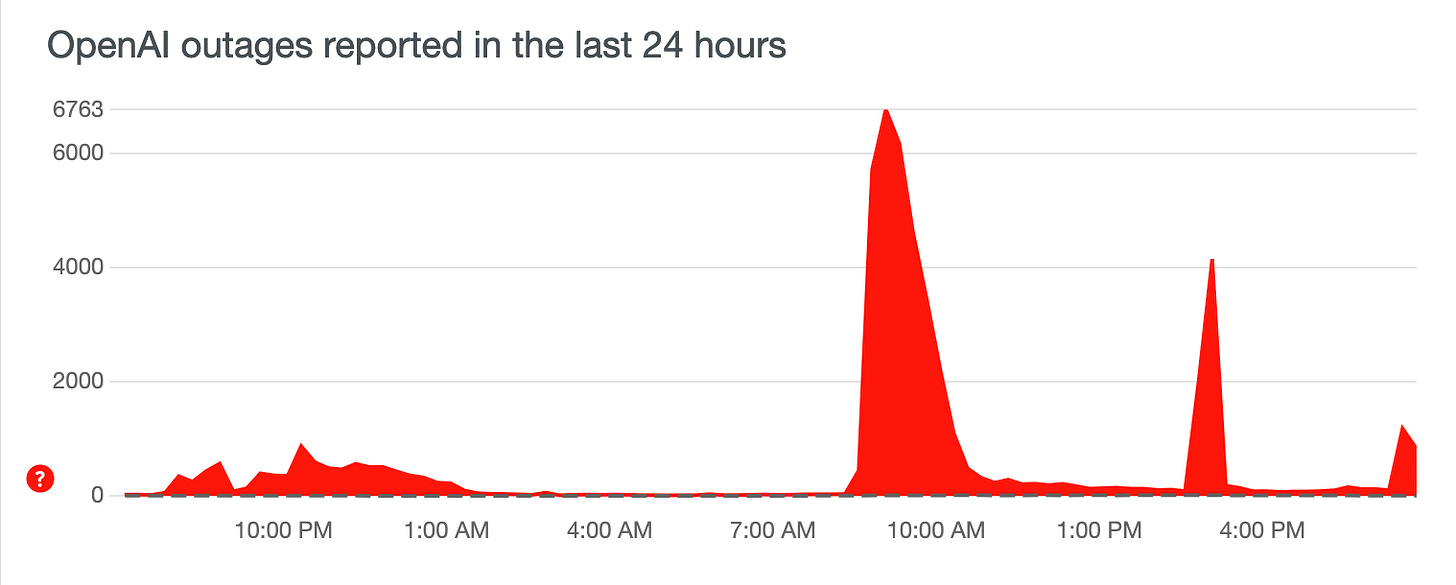

However, no one and no companies are invincible. OpenAI had a major outage for nearly two hours earlier today. It then had another outage a few hours later that lasted nearly an hour. Downdetector data suggests there may have been a smaller outage a few hours after that.

The outage impacted the ChatGPT consumer application and API used by businesses. This situation is problematic if you are running a mission-critical application. It is a warning if you plan to.

Editors Note: Now that we know it was a DDoS attack, it may help mitigate negative sentiment. Every large company has faced DDoS. An outside attack is a better narrative than engineering errors that led to scalability issues either at the API layer or the model. Also, the Down Detector data suggests that each outage period seems to be less severe in the new chart. If that trend holds, it may also build confidence in the company’s ability to recover from technical and security difficulties. However, I’d also note that the declining spike amplitude in Down Detector reports may also be caused by normal usage declines as 3:00 pm ET would likely not include many of the European users. The outage after 10:00 pm ET is after business hours for Europe and all of the mainland United States and Canada.

Was it Users or Something Else

OpenAI has not released much information on the source of the outage so far. The company acknowledged on the service status page that there were issues and later that they had been resolved. Sam Altman, OpenAI CEO, Tweeted today that usage of new features was outpacing expectations and that, “there will likely be service instability in the short term due to load.”

The issue arose after OpenAI opened up access to the new GPT-4 Turbo model. It is less expensive and faster than its predecessor, which could have caused API users to shift over en masse. However, it is interesting that this issue occurred around the same time another large language model (LLM) provider experienced a similar outage.

What About Anthropic?

CNBC reported a short time after OpenAI’s first outage that Anthropic was also experiencing service downtime.

Anthropic’s Claude 2 chatbot, a ChatGPT competitor created by ex-OpenAI employees, also experienced issues Wednesday. An error said, “Due to unexpected capacity constraints, Claude is unable to respond to your message.”

GPT-4 Turbo offers a context window up to 128K tokens. Anthropic offers a 100K token window. OpenAI offers the assistant chatbot ChatGPT, while Anthropic offers the very capable but less popular Claude 2. It is possible that Anthropic’s outage was a knock-on effect as OpenAI API and ChatGPT users switched over to using Anthropic’s API and Claude 2. It could also be a coordinated cyber attack intending to compromise multiple LLMs simultaneously or probe the security defenses of the technology providers. Or, it could be a coincidence

Editors Note: This speculation was confirmed by Open AI about three hours after the original post was published.

As of 7:45 pm ET, Claude is down for maintenance, according to a message on Anthropic’s website. This is clearly unscheduled maintenance. It has been down for at least 30 minutes at this point and it is still during normal business hours in the Pacific Time Zone. The service issues earlier today, must have led to the discovery of something that justified an extended outage during what would normally be a high usage volume time.

Are these issues related, but just a cascading services of events due to OpenAI demand for GPT-4 Turbo?

Is there more to this story that OpenAI and Anthropic are not reporting?

Are they completely unrelated?

Occam’s razor suggests that scenario 3 is the most likely. It requires no assumptions, coordination, or interdependencies. However, the timing of the outages and the subsequent unscheduled downtime at Anthropic is worth noting.

Editors note: We now know that scenario 2 was correct. There was more to this story.

Market Impact

In a nascent market, a service-level issue like this could severely slow adoption. I don’t think this will be the outcome. A key reason is that generative AI models are not yet driving real-time mission-critical applications like the energy grid or an order management system where downtime has significant consequences. It is inconvenient if marketers can’t write their blog posts for a couple of hours or you have to revert to Google for search, but the consequences are clearly not devastating.

This news arrived in a week when I was told by three separate companies how unstable the OpenAI API is and that they would never rely on it for their own application. These developers either have a backup or are biasing toward Microsoft Azure OpenAI Service, which offers more service stability.

This makes sense. OpenAI creates leading generative AI foundation models. Its core competency is not server and service uptime. Azure’s core competencies include service uptime for critical computing workloads. That is a key reason why the partnership with Azure is so critical to OpenAI. If customers are concerned about API stability, elastic scaling, or service level guarantees, they can still access OpenAI products through a service designed around those features.

Some reporters suggest this is bad for OpenAI because Microsoft is taking some of the revenue. This logic has a fundamental flaw. OpenAI’s costs and service requirements are lower when the customers access its models through Azure. If the outage has an impact beyond a short convenience today, it may be that more companies think Azure first or migrate existing solutions over to the cloud provider.

There is another scenario where something more dramatic occurred earlier today, and as that information is revealed, business customers pause to consider the implications. I don’t expect that to happen, but it is plausible.

Of course, if it’s all just copilots, then the humans could actually get back to doing the work they are now outsourcing to OpenAI models. 😀

12 Things that Matter from OpenAI Dev Day - Including 2M Developers and Personal Bots

OpenAI concluded its first Dev Day keynote a short time ago, and we followed that up with a special edition of the GAIN Rundown. Our guest experts had a lot of interesting insights to share and offered an important perspective on how the new features and products will impact the generative AI market. I recommend you watch the video discussion

What is Grok? Is X.ai's Chatbot for Twitter Really Better Than ChatGPT?

Grok is an AI modeled after the Hitchhiker’s Guide to the Galaxy, so intended to answer almost anything and, far harder, even suggest what questions to ask! Grok is designed to answer questions with a bit of wit and has a rebellious streak, so please don’t use it if you hate humor!