ChatGPT is so Erudite You Will Want it As Your Personal Assistant

An analysis and comparison with Google LaMDA

Many people have heard about the Turing Test. Lately, it has become popular to say the Turing Test is no longer relevant and that AI models have surpassed this simplistic test that had value when considering 1950s-era technology development but is outdated in the 2020s. The thinking generally relies on either or both of the following ideas:

There are more important tasks to focus on than fooling humans, so the Turing Test is the wrong benchmark to strive for.

Current solutions already pass the Turing Test, so the point is moot.

It is fair to say that Alan Turning’s famous test is not the only relevant measure of conversational AI performance and that other tests may lead to more beneficial advances in AI technology. However, when you look at large language models (LLM) such as GPT-3 and what they are used for today, communicating in text with humanlike qualities is a key use case. The more humanlike, the better in this regard. The original GPT-3 models available to the public presented important advances in generating written content based on a prompted request, but they didn’t hold conversations. ChatGPT changes that.

ChatGPT is not the only large language model designed for open-ended dialogue. We will hit on Google LaMDA briefly below and more in the future. In addition, more traditional AI models, such as the one behind Amazon Alexa, have been used for a similar use case. The Alexa Prize SocialBot Grand Challenge has a stated goal of training Alexa to hold an interesting conversation for twenty minutes and receive a high-quality rating from reviewers. It has not come close to that benchmark yet, but LLMs could help competing University teams get much closer to the goal.

ChatGPT Example Conversation

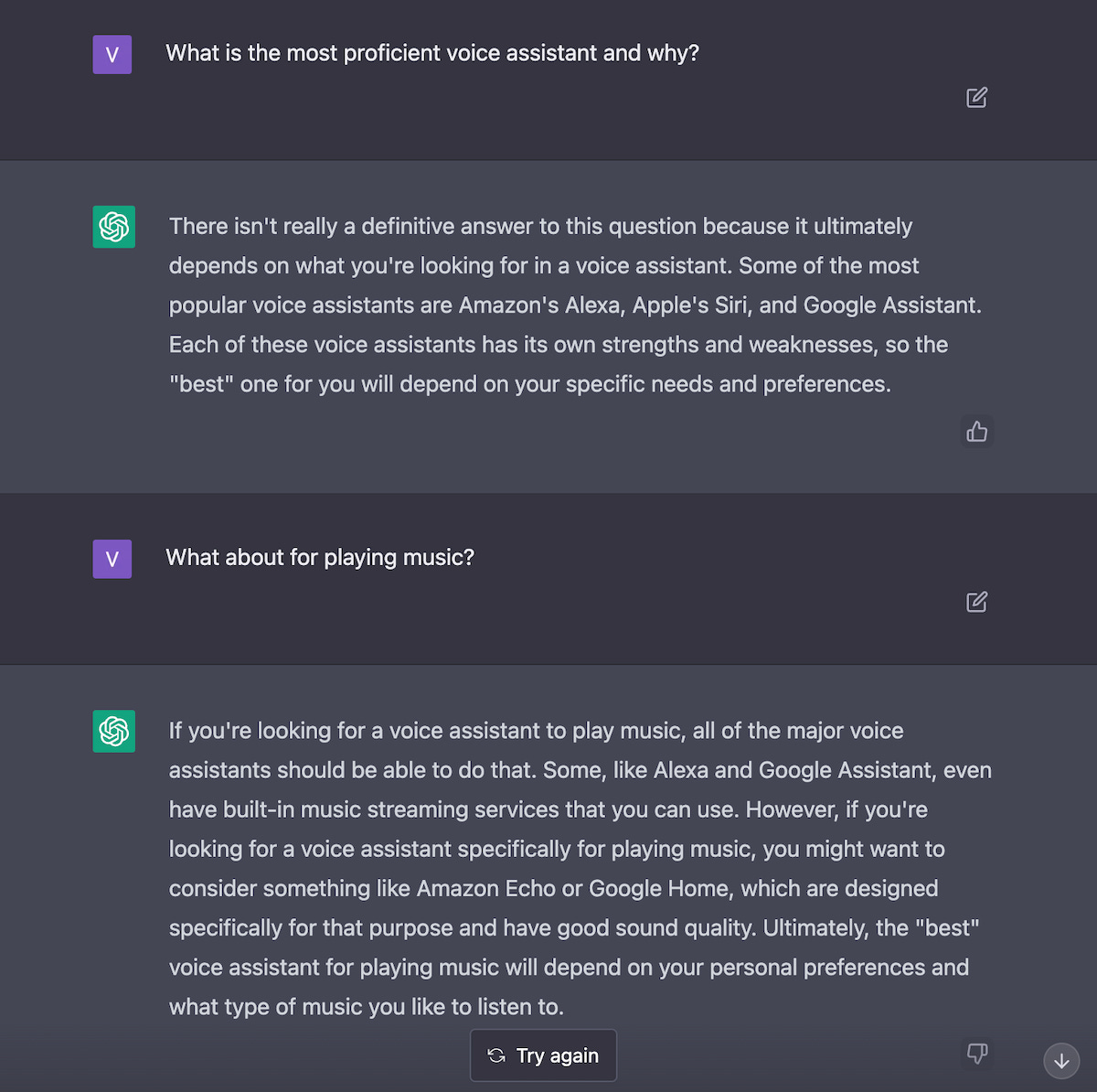

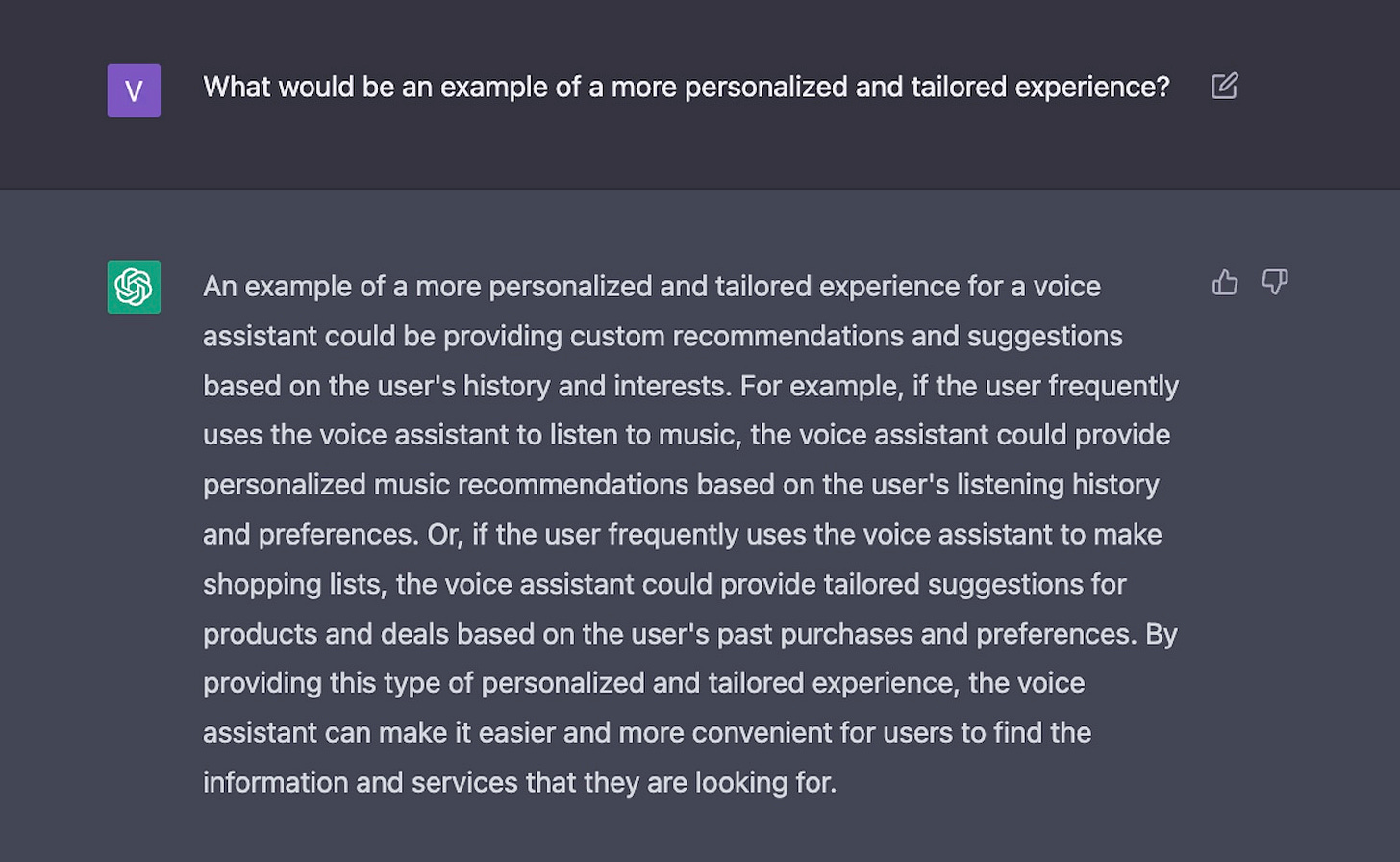

We might as well start out with an example, so you can get a better idea of how ChatGPT responds to questions. I decided to start by asking ChatGPT about voice assistants since I could quickly discern the quality of the answer.

An obvious question here is what the responses suggest about ChatGPT’s knowledge base. The opening page for the service has a clear disclaimer under the Limitations category: “Limited knowledge of the world and events after 2021.” So, you should assume the answers are based on general knowledge up through the middle of 2021.

From a technical perspective, the first response correctly identified three leading consumer voice assistants. That is the type of general knowledge we might expect from a human. On a conceptual level, the opening and closing were very much in line with what you might hear even from an expert in this particular technology field. “It depends” is a perfectly reasonable response for this type of question with a closing statement about what it might depend upon — user “needs and preferences.”

In the follow-up question, we are asking a more detailed question but with an indefinite subject that must be interpreted from the preceding conversation. ChatGPT maintained conversational context and knew that I was still referring to voice assistants. It also knew that both Alexa and Google Assistant had offerings for music streaming. Then, it goes offer the rails a bit.

The idea that it would suggest alternatives is something you will not typically see from a conversational assistant. They most often answer the question as simply as possible. In this case, the error here is that the system doesn’t recognize it is recommending the same services as the alternatives. Alexa is the voice assistant behind Amazon Echo and Google Assistant behind Google Home. It is true that smart speakers might be a great way to use Alexa and Google Assistant for music listening. However, a better response would have been to present the Echo and Home devices as ways to access the assistants or mention a true alternative, such as Samsung’s Bixby assistant.

Finally, you can see from the text that ChatGPT responds cogently and even eruditely. These are well-written responses, regardless of the content’s factual accuracy.

More ChatGPT Examples

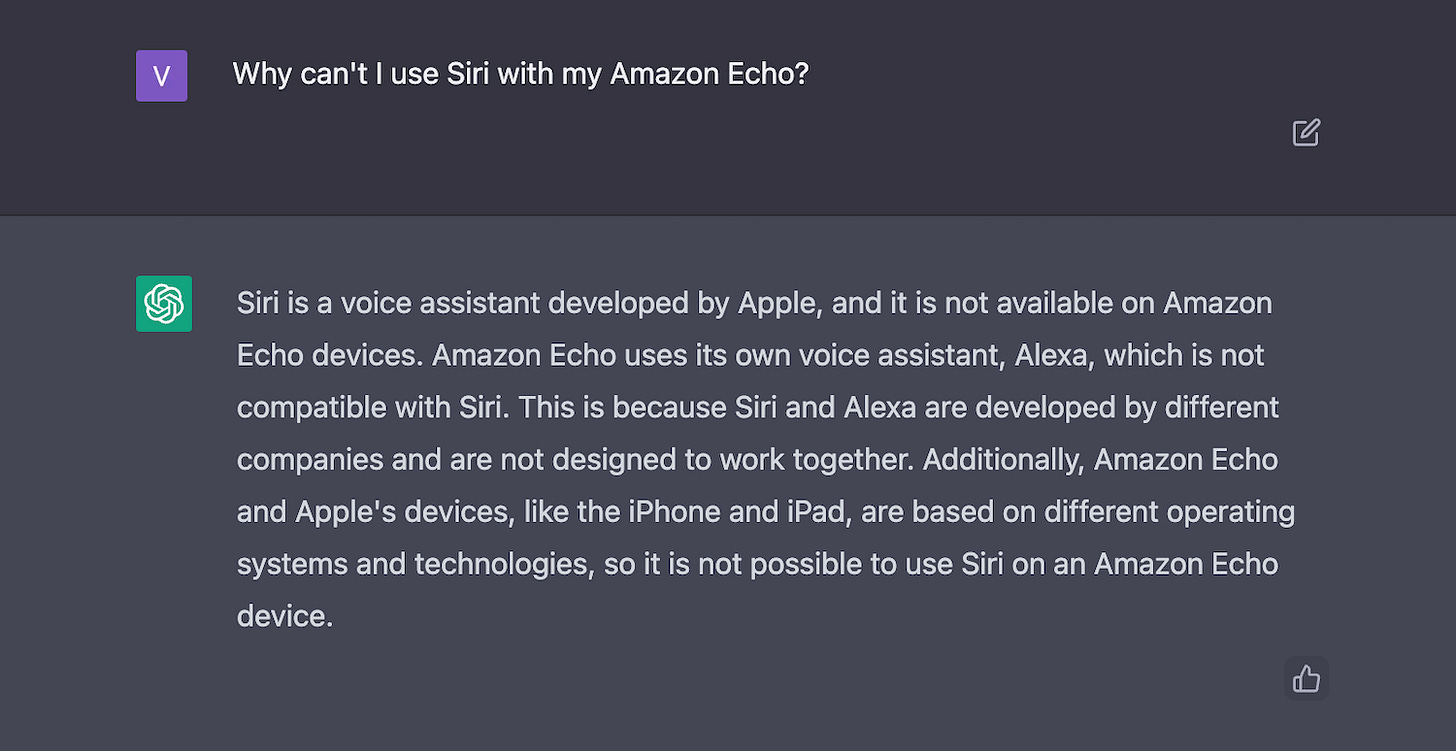

This response to a question about using Siri with Amazon’s smart speaker is technically correct and offers more detail than I anticipated. The first two sentences are what you might expect from a conversational assistant. It answers the question well. The additional details about the technology origins in different companies, the mention of other Apple devices, and the different operating systems offer useful supplementary information. This is what you might expect from a very well-composed Quora response to such a question.

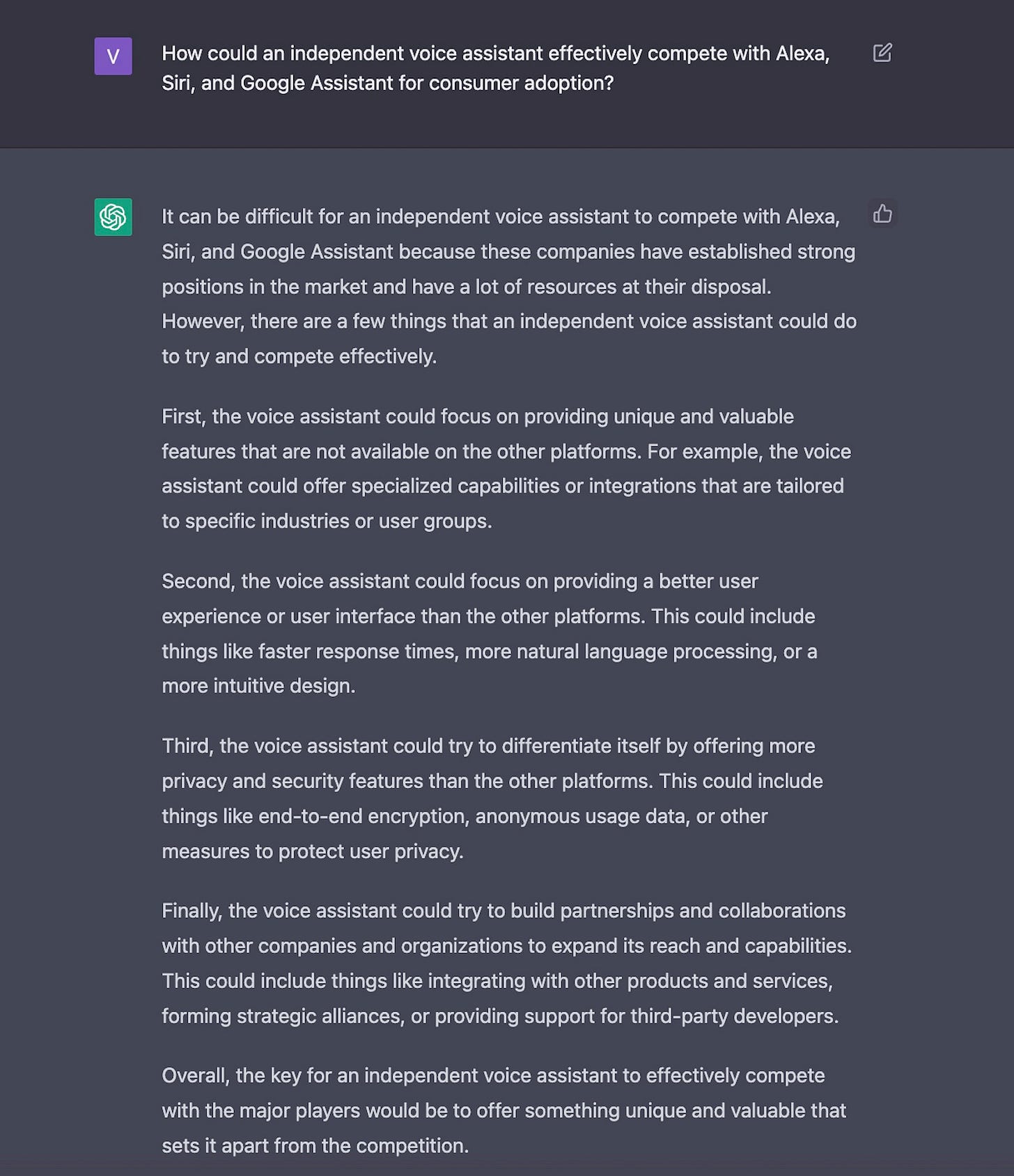

So, it seemed like a good idea to challenge ChatGPT with an even more nuanced conceptual question about voice assistants.

ChatGPT again provides an entirely reasonable — and even insightful — answer to a question that would be well-received by many people that work in the voice AI industry. The four suggestions boil down to features that appeal to specific user groups, offer a better user experience, provide better privacy and security, or are easy to integrate with other services. This is a good list, and the strategy is based on an important marketing concept, differentiation.

However, I particularly noticed the introduction. Look at that first sentence. I only asked about what a voice assistant could do. It would have been perfectly logical for a conventional AI system to start with the recommendations. Instead, it appended upfront a comment about this potentially being a difficult task along with a reason for the challenge. This is very humanlike.

ChatGPT: Surpassing Google LaMDA?

Google LaMDA, first announced in May 2021, was designed for open-ended conversation. What has been made available publicly through Google’s AI Test Kitchen is impressive. The topic domains are limited to imagining a scenario, creating a list to tackle a task, or having an open-ended chat about dogs. I decided to ask how to launch an independent consumer voice assistant.

This is a highly relevant task list. You would need to do all of these things to launch a voice assistant. Granted, it tells me what to do tactically, but has no concept of the strategy I would need to develop to be successful. So, I asked the question again to see if I would receive a different response.

The second response offered higher-level task suggestions that included strategy-related topics. Again, every item is useful for someone thinking about their business plan or project. When you drill down into the marketing strategy, it offers a list of very tactical steps. The launch strategy generates a list much closer to what you need to do when creating a strategy for your voice assistant product to succeed in the market.

However, you can see it doesn’t offer any recommendations. It presents several steps you should add to your project plan. Granted, this is a list generator. LaMDA is not designed to hold a conversation with you on this topic, or at least not in the demonstration options available today.

Task Steps Versus Suggested Approaches

LaMDA may have all of the same capabilities as ChatGPT, but they are not generally available to the public. The public release has been more limited and arrived two years after the launch of GPT-3, so the capabilities may reflect a different approach, a less mature model, or some combination of the two.

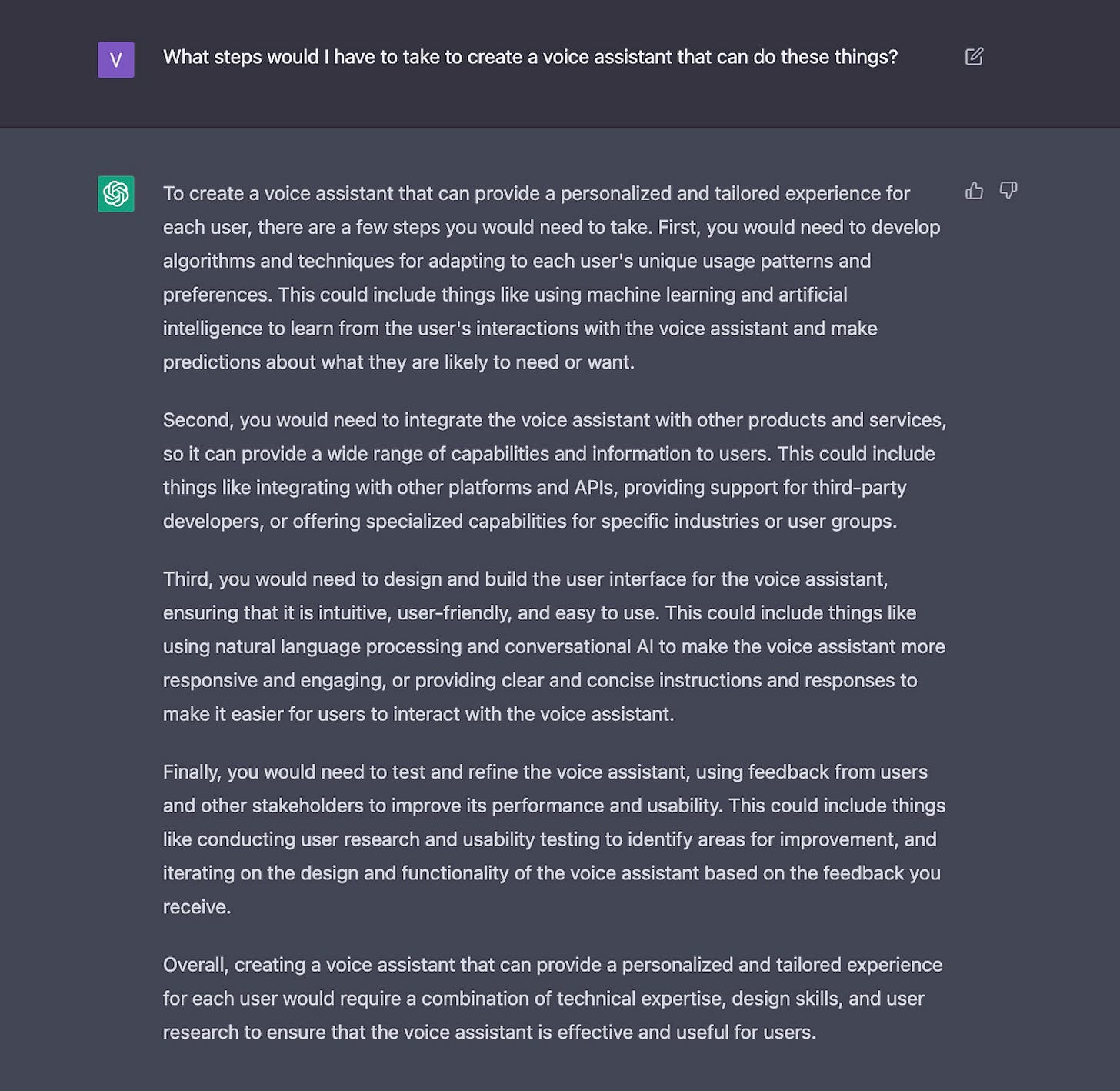

ChatGPT is not quite as sharp as LaMDA at creating the execution task list, but it is more humanlike in its conversational approach. For example, a follow-up question about one of the suggested independent voice assistant differentiation strategies yielded additional ideas about what features to consider.

It seemed logical to follow this line of questions up with a more tactical implementation question to see how well it might handle the more LaMDA-like list base query.

The suggested tasks are again higher-level than what we received from LaMDA, but they show an ability for ChatGPT to drill down into more detail. In addition, we see once more ChatGPT offering examples and suggestions about both what to do, how to do it, and some ideas to consider. The responses are higher resolution in detail while also being more humanlike.

LaMDA may be able to perform similarly, but not with the features it has exposed publicly. Certainly, no one would confuse the publicly available demonstrations as pointing toward sentience, as one Google employee concluded. The sentience claim was surely far-fetched, but I have to assume that LaMDA is much more robust than what we have seen so far. The creative writing demonstration is very interesting, and the conversation with a tennis ball is an example of a simple question-and-answer conversation.

However, LaMDA behaves more like what I would expect from a conversational assistant today. The responses are relatively short and nearly uniform in their length. There is some detail, but they do not offer as much depth as ChatGPT or include those surprises that go beyond the question that was asked. ChatGPT also provides more variable-length answers that align with the type of question and optimal response. This also provides a more humanlike experience.

The Implications of ChatGPT

Contrasting ChatGPT with LaMDA is useful because both are LLM-based solutions. However, the more prescient comparison today is with currently available chatbots and voice assistants. These conversational assistants excel at task execution. They can answer simple questions as long as the information already exists in a knowledge base. They can execute tasks by integrating with digital services. They tend to struggle when holding a back-and-forth conversation where context maintenance is important, where an explicit answer or response to an intent is not already recorded somewhere, and when responding to conceptual ideas. ChatGPT represents a significant advance in these feature areas.

Marrying these two types of features would make a virtual assistant far more valuable. Add the ability to conduct real-time searches on a regularly updated knowledge base, and the frequency of use would skyrocket. This breaks down to the ability to execute simple tasks, search for information, and serve in an advisory role when facing more complex questions or decisions. Maintaining context for the user over time would further solidify the assistant’s value to a user.

In the end, it may not necessary for a virtual assistant to pass the Turing Test to make it extremely valuable. However, making assistants more humanlike, not just in the phrases they spit out but also in the way they handle conceptual ideas, go beyond the precise query intent, and maintain context over time, would significantly transform the value to users.

Today’s voice assistants and chatbots are typically glorified user interface upgrades. ChatGPT suggests we may soon see a step-change in virtual assistant capabilities. Then again, it will probably take at least two years to mold this into a usable format that can scale. I expect it to be worth the wait.

Did you know Synthedia is currently analyzing several LLM services and will soon publish a report on the findings? Subscribe to ensure you don’t miss the forthcoming report.