CMU Study Shows Gemini Pro Trails GPT-3.5 and GPT-4 in Performance Benchmarks

Is Gemini Pro as robust as Google says?

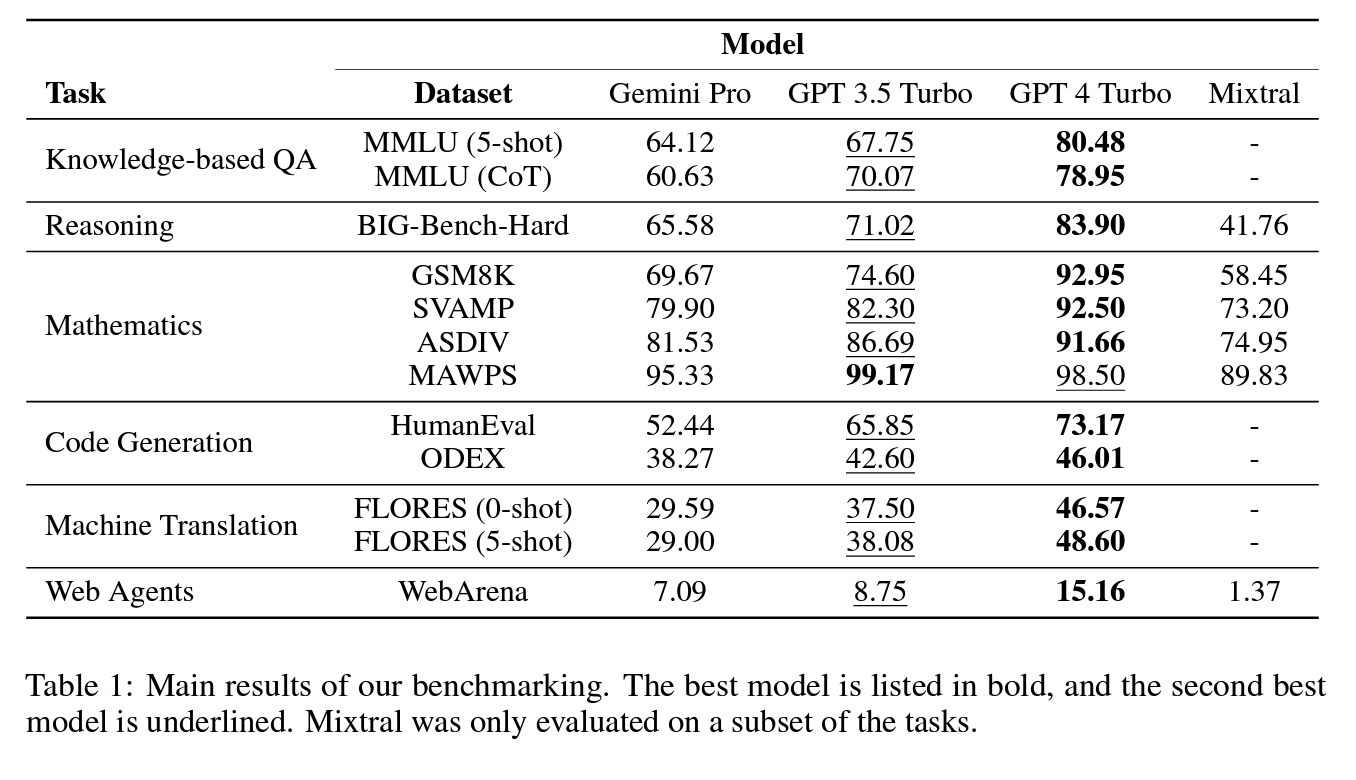

A new paper published by AI researchers at Carnegie Mellone University (CMU) shows that Google’s Gemini large language model (LLM) benchmark performance is outpaced by GPT-3.5 and GPT-4. Several of the findings run counter to data presented by Google at the time of Gemini’s public launch. The study’s authors report:

Gemini is the most recent in a series of large language models released by Google DeepMind. It is notable in particular because the results reported by the Gemini team are the first to rival the OpenAI GPT model series across a wide variety of tasks. Specifically, Gemini’s “Ultra” version reportedly outperforms GPT-4 on a wide variety of tasks, while Gemini’s “Pro” version is reportedly comparable to GPT-3.5…We found that across all tasks, as of this writing (December 19, 2023), Gemini’s Pro model achieved comparable but slightly inferior accuracy compared to the current version of OpenAI’s GPT 3.5 Turbo.

How Competitive is Gemini?

When you look at the CMU researchers’ independent analysis of Gemini and the GPT models, you immediately see the challenge of relying solely on self-reported benchmark performance results by model creators. The new research found

Of the benchmark tests conducted, Gemini trails GPT-3.5 Turbo and GPT-4 Turbo in each benchmark. The researchers add that some of the difference could be accounted for based on Google’s guardrails. It refused to answer numerous questions related to moral_scenarios and human_sexuality in the MMLU evaluation. These were marked as incorrect since the model did not answer the question. Non-answers were marked incorrect for each of the models.

However, researchers indicated that was only one element of Gemini’s shortfall. The paper indicated that “Gemini Pro performed somewhat more poorly at the basic mathematical reasoning necessary to solve the formal_logic and elementary_mathematics tasks.”

You can see in the charts at the top of the page (Figure 4) where Gemini and the OpenAI models are placed for each topical category within MMLU. Gemini did beat GPT-3.5 for security_studies and high_school_microeconomics but trailed in other categories.

Table 1 above shows results for a variety of tests. Google says Gemini Pro’s MMLU 5-shot and Chain of Thought (CoT) scores are 71.8 and 79.1, respectively. The CMU researchers say the results of their independent evaluation are 64.1 and 60.6. Similarly, Google says the result was 75.0 for the Big Bench Hard benchmark, while the new research suggests it is 65.6. These results are materially lower than for OpenAI’s GPT models and are reflective of other analyses presented in the paper.

Benchmarking and Selecting LLMs

The results suggest that relying solely on self-reported benchmark scores may not be a reliable gauge of LLM performance. Granted, LLM evaluation and benchmarking should be conducted based on use case data and supplemented by general benchmark test results. It’s not often you will ask an LLM to complete a multiple-choice quiz. Instead, you should mirror the data and use case as closely as possible. The general benchmarks are a good pre-screening tool, but not the results to base an LLM selection upon.

Another question is whether Google’s Gemini models are really on the cusp of surpassing the GPT model family. The data presented in the most recent academic research paper suggests OpenAI still has a comfortable lead over Google Gemini. Maybe Gemini Ultra will be a true competitor to GPT-4, but we will have to wait for some independent analyses to confirm that notion.

Google's Gemini LLM Arrives Next Week and It May Just Outperform GPT-4 (sort of)

Google CEO Sundar Pichai announced in a blog post that the Gemini large language model (LLM) will launch next week as an API in Google AI Studio and Vertex AI in Google Cloud. The solution is regarded as a replacement for the company’s current flagship LLM, PaLM. This represents the third LLM product family that Google has used this year, including the …