D-ID Lets You Talk to a ChatGPT Powered Avatar

The company also announced a new streaming API

D-ID has been busy. At Mobile World Congress last week, the company introduced a new streaming API for its lifelike digital people. Yaniv Levi, vice president of product marketing at D-ID, told me:

“Previously you would generate the video and would need to wait for video to be ready and then play. With the new real-time streaming capabilities, you can generate the video, and the video immediately starts streaming to the user while it keeps on processing.”

This enables the user experience to support dynamic interactions with the virtual human character. In other words, the responses don’t need to be pre-scripted. They can be generated in real-time and converted at text-to-speech and text-to-avatar-movement simultaneously. To demonstrate this capability in action, D-ID introduced the ability to speak with one of its virtual humans that will respond using ChatGPT.

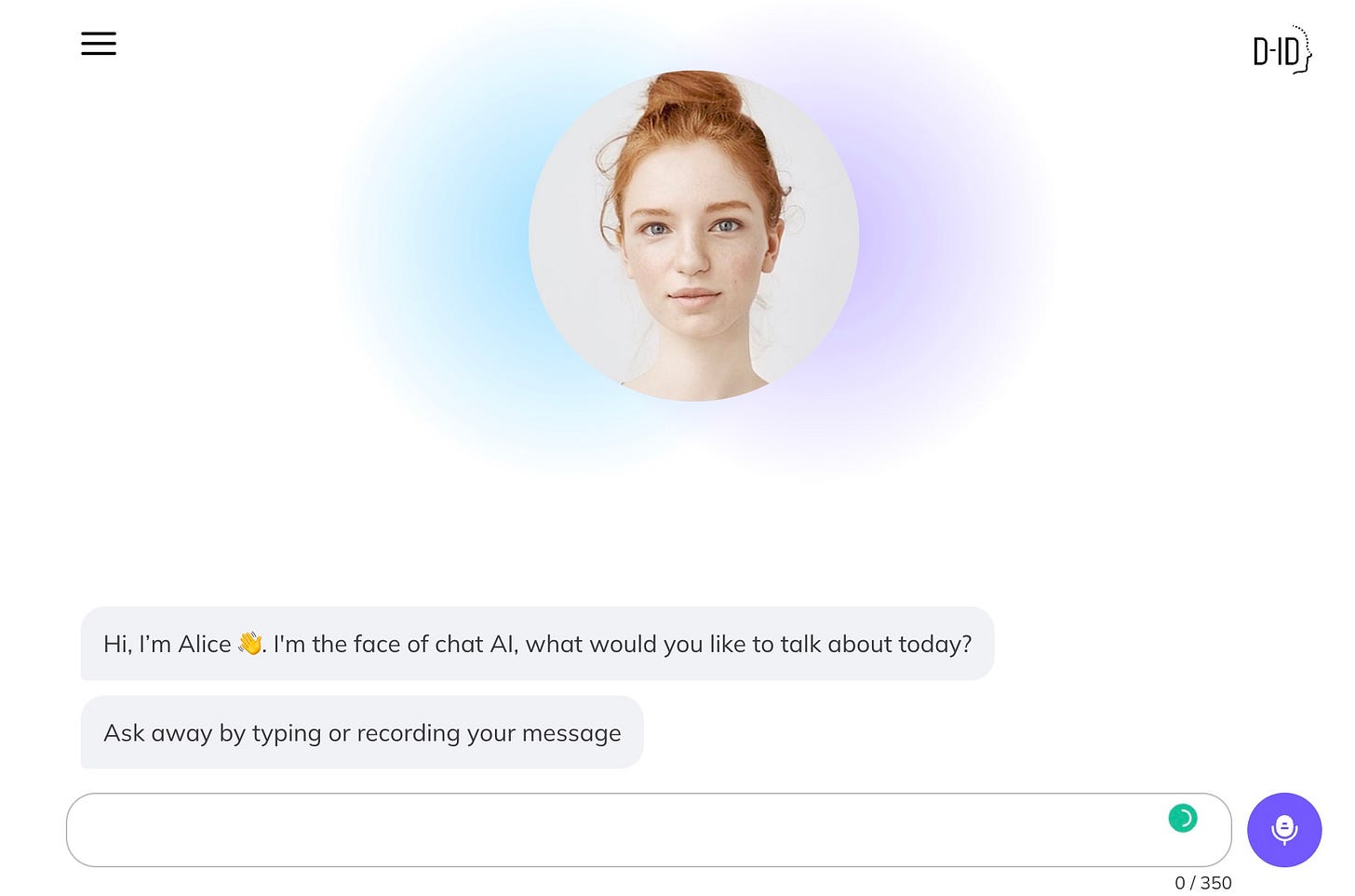

Putting a Face on ChatGPT

The chat.D-ID web app is an interesting way to interact with ChatGPT. You can listen to the responses as opposed to reading the generated output. More interesting is how it changes the experience. No longer are you reading text generated by the faceless borg at OpenAI. The web app was deployed initially with D-ID’s Alice avatar, so you hear the responses from a young woman speaking with you. Below is a screen recording of one of my conversations with Alice.

Gil Perry, D-ID’s CEO and co-founder told me in Barcelona last week that very soon, you will be able to choose from different avatars or upload a photo that will be your personalized face of ChatGPT. This includes loading up a picture of you so that your conversation with be with your own digital twin. D-ID processes photos of human faces and humanlike characters and can animate mouth and head movements to match the text generated from ChatGPT or other systems.

He also said that using avatars for interacting with large language models can make them more accessible to a wide range of people that may not be using them today.

"The switch from text interface to speaking face-to-face makes the experience more impactful, enjoyable, and engaging and helps people better understand the information it delivers. With chat.D-ID, conversations with AI will become accessible to a far wider audience, including children, the elderly, people with disabilities, and billions of people worldwide beyond the tech community."

Compressing Latency

I wrote previously about D-ID’s Creative Reality studio and its integration with GPT-3 and Stability AI. That is an easy-to-use tool to create your own videos with an animated virtual human presenter. One of the things I noted immediately in using that feature was how fast it was at rendering the video output. That speed is certainly necessary to conduct real-time streaming of virtual human-delivered conversational content.

Perry told me that using D-ID’s streaming API alone is about 80% faster than what you see with the chat.D-ID demo today. He expects an update in a couple of weeks to chat.D-ID will dramatically reduce the latency between when you finish your request and receive the response from Alice. For the beta launch, D-ID’s streaming API is waiting for the entire text response from GPT-3 before starting to speak. The next iteration will use a combination of OpenAI’s ChatGPT streaming API and D-ID’s streaming API, which he expects to reduce response times dramatically.

This strikes me as an interesting technology demo. I recommend you give it a try. While speaking with Alice, you can imagine her as a replacement for a website chatbot or when asking questions in a mobile app. It is a different experience and could change the way people think about virtual humans and how they will be used.