Databricks Raises $500M at $43B Valuation on Rapid Revenue Growth

$1.5B revenue run rate and 10k+ customers is more than a generative AI story

Databricks has recently made headlines for its large burn rate, which The Information said would cause the company to lose about $450 million each of the next two years after doubling losses to $430 million last year. A new infusion of $500 million from a number of investment firms and companies, including NVIDIA, Capital One, Fidelity, a16z, and others, should help plug that gap.

In addition, Databricks shared some data on its recent financial performance. The company said that last quarter it surpassed:

$1.5 billion annual revenue run rate for the first time while notching its largest quarterly revenue

10,000 total customers

300 customers that will spend more than $1 million over the next year

This market momentum and optimism around generative AI overwhelmed rumored cash burn challenges and resulted in a $43 billion valuation.

Not exactly a Generative AI Swoon

Databricks is definitely benefiting from the interest in generative AI, but it was already a hot private investment two years ago. In August 2021, Databricks raised $1.6 billion at a $38 billion valuation and a reported 5,000 customers. That was up from a $28 billion valuation in a funding round six months earlier on a reported $425 million in annual recurring revenue (ARR). It was also well before the ChatGPT moment.

Over the past two years, Databricks has doubled its customer base, more than tripled its ARR, and increased its valuation by … wait for it … 13%. It could be that Databricks is growing into an optimistic valuation set in 2021. However, this is not a newly lit generative AI sparkler. The company was founded more than a decade ago.

Databricks CEO Ali Ghodsi indicated to VentureBeat that the company didn’t need the cash. Instead, he positioned the funding round as a strategic way to solidify partnerships.

“This is not like we need cash,” he said. “Our poor finance team was just looking at doing the strategic partnership…because of the leaks, now everyone wants to invest.”

But Still, a Generative AI Future

Enterprises have a lot of data needs that predate generative AI, which is the bulk of Databrick’s current business. However, the company is also steering into generative AI and its unique data needs. The company completed the acquisition of open-source large language model (LLM) developer MosaicML in June and also announced its new LakeHouseIQ product the same month.

Today, we are thrilled to announce LakehouseIQ, a knowledge engine that learns the unique nuances of your business and data to power natural language access to it for a wide range of use cases. Any employee in your organization can use LakehouseIQ to search, understand, and query data in natural language. LakehouseIQ uses information about your data, usage patterns, and org chart to understand your business’s jargon and unique data environment, and give significantly better answers than naive use of Large Language Models (LLMs).

Large Language Models have, of course, promised to bring language interfaces to data, and every data company is adding an AI assistant, but in reality, many of these solutions fall short on enterprise data. Every enterprise has unique datasets, jargon, and internal knowledge that is required to answer its business questions, and simply calling an LLM trained on the Internet to answer questions gives wrong results. Even something as simple as the definition of a “customer” or the fiscal year varies across companies.

Matei Zaharia, co-founder and chief technologist of Databricks Inc., commented in a June interview during Silicon Angle’s The Cube podcast:

With generative AI, one of the new challenges is you want to train on lots of data. You maybe get it from the web or other places, but at the same time, some of it is wrong. Maybe some of the policies around copyrighted data and use that are changing over time. So, you naturally want to trace exactly what data went into this and be able to fix that as you release your applications. And I think, increasingly, just through regulation, you’ll be required to … explain and document what went in there.

You can see how the MosaicML acquisition supports this strategy. While Databricks can be used with any LLM, the presence of Mosaic’s open-source models and focus on efficient training and inference may appeal to users who want to deploy custom applications. Generative AI solutions don’t start with a foundation model or a data source. It’s both.

Most LLM activity so far has started with proprietary third-party models such as GPT-3/GPT-4, NVIDIA NeMo, or Anthropic. Databricks’ Ghodsi told VentureBeat:

Every one of these CEOs, they want to build their own large language models. That becomes their intellectual property for their business, which then they can use to compete with their competitors and get ahead using generative AI.

It is too early to validate this thesis as most enterprises are still in the early stages of their LLM adoption journey. If it turns out that enterprises embrace the added responsibility of building custom LLMs, their trusted data partner may be a more attractive option than starting with a model with uncertain long-term support. Besides, Meta will not be the preferred choice for everyone for various reasons.

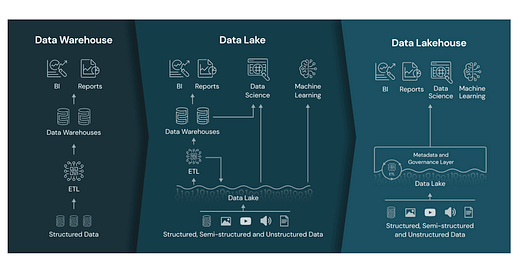

Either way, enterprises need data lakes, data warehouses, and databases for their existing application stack, as well as the new generative AI solutions. The capability to support the old and the new could be Databricks’ biggest advantage.

Databrick's Acquires Generative AI Data Startup MosaicML for $1.3 Billion

We are excited to announce that MosaicML has agreed to join Databricks to further our vision of making custom AI model development available to any organization. Naveen Rao, Hanlan Tang, MosaicML Databricks, a data storage and management company, announced

Meta Just Became a Big Player in the LLM World Making Llama 2 Free and Open Source

Meta today announced the availability of three open-source Llama-2 large language models (LLM). They are all available for free for both commercial and research use but do include some licensing restrictions (see below). The models come in 7B, 13B, and 70B parameter sizes, and they now occupy the top spots in Hugging Face’s Open LLM leaderboard. Each wa…