Detecting Deepfakes - Pindrop Demos its Anti-fraud Voice Clone Detection for Synthedia

Protecting agains the downside of deepfake technology

Amit Gupta, vice president of product management, research, and engineering at Pindrop Security, chose Synthedia for the first public demonstration of the company’s deepfake detection software.

He took the stage at Synthedia 3 to offer a live demonstration of deepfake voice detection, something I have not seen anywhere else. I have heard people talk about deepfake detection, but you will be hard-pressed to find many example videos showing it in action, much less a live real-time demonstration.

You can watch the entire video (recommended) by clicking the image above or just skip to the demo segment here. The demo starts off with a test of recorded video that includes both real and deepfake content. Gupta then opens Pindrop’s deepfake detection software to show it running in real-time.

The Various Types of Voice Fraud Attacks

Gupta began his talk by outlining the problem. This included the key challenges facing enterprises and various types of voice fraud attacks, and their increasing technological complexity.

One of the challenges that recently came up for every voice-based authentic tooling is the emerging threat of deepfakes. We have all heard and seen a news article coming up every day. In the last 30 days, we have received over 30 different customers inquiries in terms of, “How do we protect against deepfakes?"

There is an entire spectrum of voice attacks, starting with the age-old, someone gets a clandestine recording of me and tries to impersonate me. Or, for a person who is on several webinars or is really active on social media, if someone gets hold of several different recordings, they try to concatenate words from different recordings, and try to make phrases that were never said by the person and trying to impersonate that person, as well. And we have all seen that in many different forms in the past.

The other one that is a little bit more complex to create and detect is where the text-to-speech (TTS) engines come in. The attacker is still in the loop and they are typing the responses, but then they are using the TTS engine to convert that into speech, either to just sound like any human being or to sound like a targeted human being. But the main difference is here is that the person is typing in the responses. This is actually one of the attacks we started seeing in some of our customers, but you can even hear the attacker typing in the responses. It is funny to listen to those calls and hear the keyboard keys typing there.

What makes a little bit more of a complex attack is the advancement in generative AI, with the large language models, combining that with the speech-to-text technologies. You can literally take the human out of the loop in that attack. Where a conversation is happening with a person and the response of that person is analyzed in real-time—through NLP and leveraging large language models—then context-relevant messages generated by a machine fed into a text-to-speech engine. So, a bot is talking to another bot and there is a humanlike conversation being had.

This is definitely more complex because the human in the loop aspect is removed. There is no typing involved. There are some delays, which used to be unnatural delays in the speech synthesis attack, that are taken away with automation. But, it is still possible to detect some of it and pretty much all of it.

[In] the most complex attack…the speaker is speaking in real-time, but in real-time that voice is getting converted to be somebody else's voice. There are various different technologies such as voice modulation that is used.

Addressing Multiple Attack Types

There are a lot of useful and entertaining applications of deepfakes. However, the quality and ease of use have made these technologies accessible to anyone. Sometimes that means YouTubers and gamers have a bit more fun creating or playing. More recently, it has led to more fraud, from individual shakedowns to impersonating people to the businesses that provide them services.

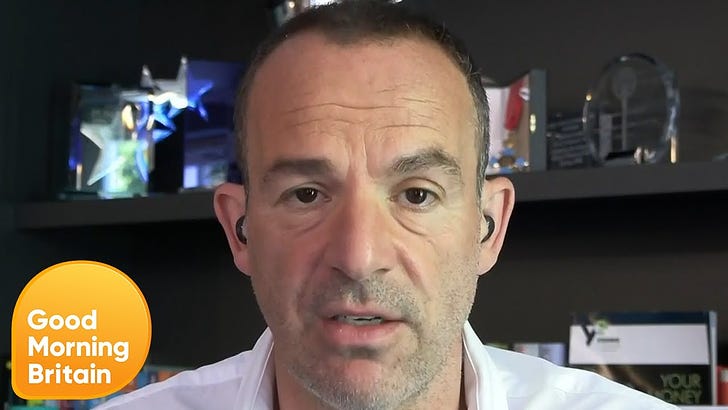

In July, Synthedia featured an example of this when British consumer advocate Martin Lewis showed up in a video recommending people invest in a new company. Some consumers then invested in the company thinking it was the real Martin Lewis in the video. It was a scam. The company didn’t exist.

There are many other examples of these consumer-targeted fraud scenarios. Enterprises have an even larger potential risk exposure from people impersonating their customers and employees.

It is an arms race between criminals attempting to use technological advances to commit fraud and innovators like Pindrop creating tools to accurately detect deepfakes in real-time. It is also a race that is having a real financial impact and more risk is on the horizon. Let me know what you think about the demo and the talk.

Bret: Congratulations on this scoop. And thank you to Amit and Pindrop for addressing this risk. The Sisyphean task of staying ahead of generative AI Deepfake voices presents a multitude of technological and policy challenges.