Drake, Bad Bunny, Eminem, Joe Rogan, and Indiana Jones - Deepfakes on The Rise

Is the tech good, bad, or just in need of an easy way to validate authenticity

Deepfakes are now in the news weekly. It used to be the monthly article quoting a retired FBI agent warning about fraud and invasions of privacy. Today, it ranges from Hollywood movies and new digital experiences to viral TikTok videos and Wall Street earnings calls.

The upcoming Indian Jones movie reportedly has a 25-minute deepfake sequence that de-ages the eighty-year-old actor Harrison Ford to 35. We have seen fleeting glances of de-aging in TV shows like The Mandalorian. We have also seen several minute performance pieces on America’s Got Talent and social media. Longer sequences confirm how quickly the technology is progressing.

Deepfake Takedowns

Deepfake videos have received the most attention in the past. That changed recently when TikToker ghost writer 777 racked up 10 million views for his audio deepfake of Drake and The Weeknd. It also captured over 600,000 listens on Spotify, and several hundred thousand on YouTube and Soundcloud before being taken down. Universal Music Group made a request to social media and music streaming services to remove the AI-generated media.

The deepfake involved voice clones of the singers along with AI-generated song lyrics and music in their style.

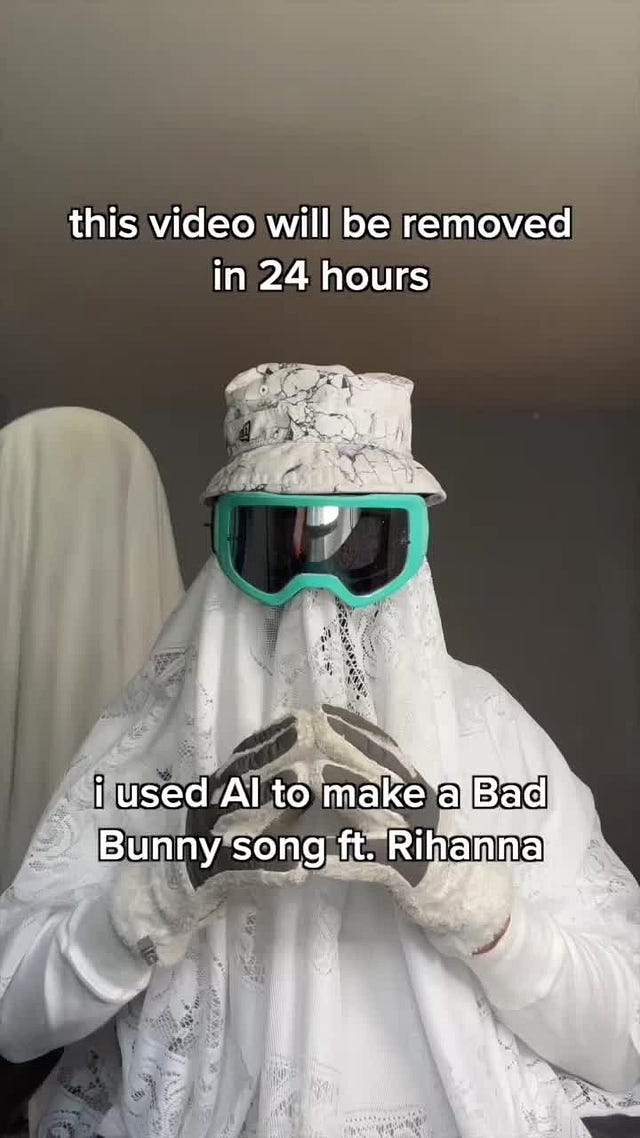

This past week, ghost wrider 777 (apparently the same person with a slightly altered account name) was at it again with a deepfake featuring Bad Bunny and Rihanna. That song accumulated 1.2 million TikTok views and hundreds of thousands of listens on Soundcloud.

Musical artist Grimes said she would collaborate with anyone looking to create new tracks using her voice or likeness and split royalties 50/50. Singer Holly Herndon has made her synthetic voice available to anyone that wants to use it. So, this is not all about takedowns and a slowdown of the technology.

A Universal Music executive said the company planned to use deepfake and synthetic media technology with artists. However, the company does not want others to use deepfakes mimicking the style of its contracted artists. I suspect this extends to a prohibition on fair use as well.

These events follow David Guetta’s creation of an Eminem deepfake rap in one of his concerts in February. He called the piece Emin-AI-em. Guetta said at the time:

I discovered those websites about AI. Basically, you can write lyrics in the style of any artist you like. So I typed, “Write a verse in the style of Eminem about Future Rave.” And I went to another AI website that can recreate the voice. I put the text in that and I played the record and people went nuts!

It’s not just the iconoclasts and rebels that are using these techniques. The shapers of culture are adopting synthetic media as generative AI tools have become more robust and easier to use. Key adoption barriers are falling, and this is accelerating growth in 2023.

This is leading financial analysts to question CEOs about their approach to generative AI and deepfakes. Spotify CEO Daniel Ek and Universal Music Group CEO Lucian Grainge both answered questions on these topics in their recent earnings calls.

Even Joe Rogan has been brought into the discussion with a new deepfake podcast feautring guests that have no appeared on the popular Joe Rogan experience. We have reached a point where celebrities, creative rights holders, and media distributors must have an approach to handle these issues when they arise.

The Flavors of Deepfake

Video and audio are the media that generally come to mind when discussing deepfakes. However, there are four flavors of deepfake:

Video

Audio

Image

Text

It is worth noting that news media reporters, business executives, and celebrities use the term deepfake in different ways. Some use it as a catch-all term to describe synthetic media and generative AI technologies. Others suggest that all deepfakes “seek to deceive viewers with manipulated, fake content.”

These are antiquated and misguided ideas. Many high profile deepfakes have been fully transparent about their origin and authenticity. The David Guetta Emin-AI-em is an example.

Other deepfakes are intended to mislead either to see what the creator can get away with or as performance art. Regardless, 2023 is the year when social institutions and digital distribution platforms are starting to think through their policies. TikTok established a new synthetic media policy in March and every other platform is surely considering revisions to their own policies.

Aside from policy, the thing that is missing today from the deepfake debate are tools that publishers can use to evaluate digital content authenticity. These tools might even be useful for consumers and offer a deepfake or authenticity score of any media. However, since deepfake media can manifest as video, audio, images, or text, there is not a single solution for this.

Truepic and Adobe are promoting authenticity services that enable good-faith actors to record the origin of their media and provide transparency to synthetic media consumers. That may be a step in the right direction but it doesn’t solve the problem of bad actors intentionally misleading. For those situations, we need easy-to-use and reliable detection tools. This might be even more critical during election cycles.

Deepfake technology such as voice clones are now so easy to use that they are gaining widespread use. Most of that will be for good reasons. We don’t need to stop deepfake technology and it isn’t likely we can slow it down. What we need are some tools that can help us identify when something purported to be real is actually a deepfake.

Of course, watch out for the downside of new tools that emerge which yield a lot of false positives and assign the deepfake label to authentic work. There are two important metrics:

Correctly identifying deepfakes

Avoiding falsely labeling genuine media as deepfakes.

The tools with the most potential in this space will excel in both metrics. Those that excel in the former but are poor in the latter are sure to create a lot of problems. And minimizing false positives might be the harder challenge.

TikTok's New Rules on Deepfakes and Other Synthetic Media

TikTok first established guidelines about the use of synthetic media and deepfakes in August 2020. The guidelines were last updated a year ago, adding three words, “significant” and “other persons.” For about two-and-a-half years, 27 or 30 words have sufficed to cover TikTok’s approach to AI-generated media. Times, and technology, have changed.

Deepfake Transparency and Identity Rights Get Boosts from Truepic and Metaphysic

Deepfake and digital twin technologies pose several novel challenges. One concern is that deepfake quality is getting so high that viewers increasingly face difficulty identifying real from synthetic media. This will become even harder as more of our media mixies the two formats.