Google Gemini 1.5 and Flash LLMs Show Significant Advances Hidden in Research

Progress in math, instruction following, and long-tail expertise

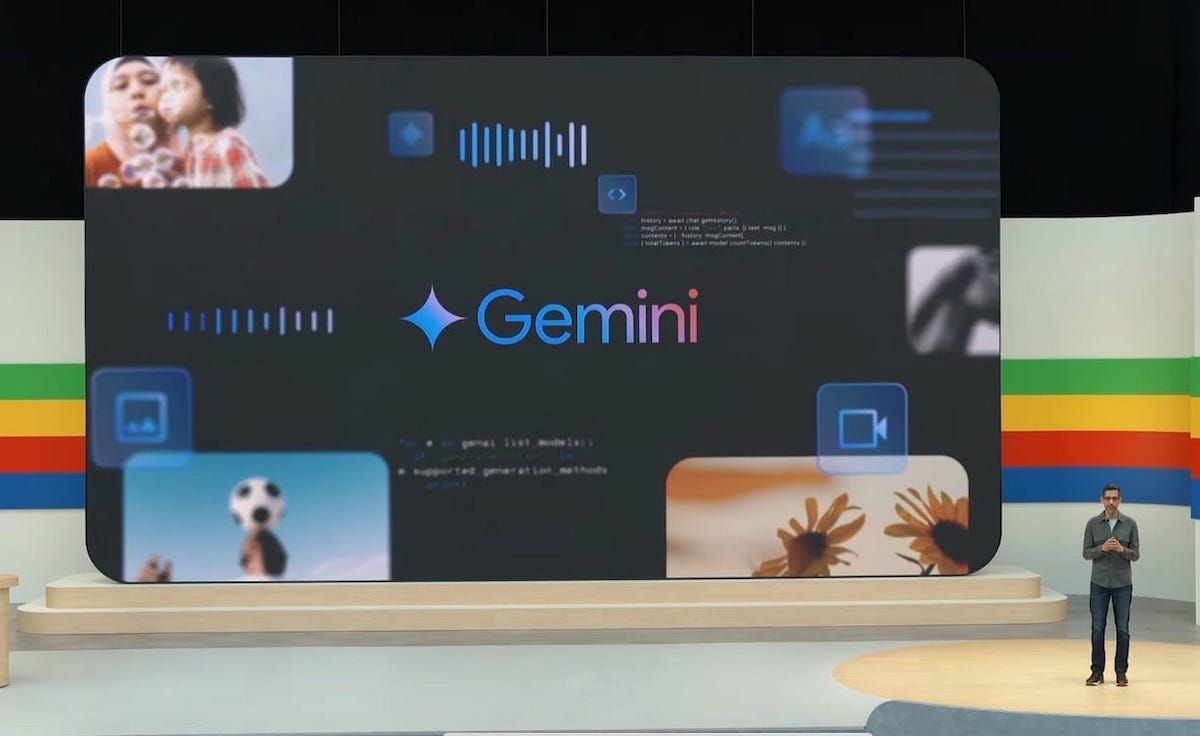

Google is developing a habit of releasing research papers and then simply adding information to the versions hosted in Deepmind servers without versioning. This makes it harder to know when the information was added and what was added. During Google I/O, the company showed off the latest Gemini 1.5 Pro and Flash large language models (LLM) but didn’t mention new research data in its Gemini 1.5 paper. However, you can see the magnitude of the change because the original paper was also uploaded to arXiv.org.

An April 25, 2024, version of Google’s research paper titled “Gemini 1.5: Unlocking multimodal understanding across millions of tokens of context,” hosted on arXiv.org, has a total of 77 pages. The link to the paper with that same name hosted on Google Deepmind servers is 153 pages.

This decision to withhold some of the research from the initial paper publication is understandable. The expanded version includes information about the Gemini 1.5 Flash LLM that Google likely did not want to share publicly before the I/O developer conference. With that said, the paper doubled in length over two weeks and added a lot more than additions for the 1.5 Flash model. Some of these changes are particularly notable and it seems odd that Google isn’t driving more attention to it.

Better Reasoning

One of the most interesting sections is Advancing Mathematical Reasoning. The paper discusses a math-specialized model that includes addition inference time for complex problems.

In training Gemini 1.5 Pro, we want to understand how far we can push the quantitative reasoning capabilities of LLMs, with the goal of solving increasingly challenging and open-ended problems. Solving such problems typically requires extensive domain-specific study. In addition, mathematicians often benefit from extended periods of contemplation while formulating solutions; written mathematics tends to focus on the final result, obscuring the rich thought processes that precede it…We aim to emulate this by training a math-specialized model and providing it additional inference time computation, allowing it to explore a wider range of possibilities.

The result was a significant improvement in math-related benchmarks and tests. It is unclear what proportion of the improvement should be attributed to additional training as opposed to the longer inference time. However, the net result is notable.

The Gemini 1-5 Pro model scored a respectable 67.7 on the MATH benchmark, which landed it halfway between the scores of Anthropic’s Claude 3 Opus and OpenAI’s GPT-4 Turbo. Math-Specialized 1.5 Pro model scores well above both models in the MATH, AIME 2024, Math Odyssey, HiddenMath, and IMO-Bench benchmarks. Google has taken its share of criticism for trailing OpenAI and Anthropic in a technology segment it invented, but we are now seeing the combined Deepmind and Google AI innovation engine showing off its capabilities.

Instruction Following

A topic that was significantly expanded between the paper’s April and May editions was Instruction Following. The first two sentences of the sections are the same. They then diverge around the number of customer prompts developed by human rates regarding “real-world use cases.” The April edition included 406 prompts relating to “generating formal and creative content, providing recommendations, summarizing and rewriting texts, and solving coding and logical tasks.” By May, that list had grown to 1,326 prompts and added several categories.

In addition, they capture enterprise tasks such as information extraction, data/table understanding, and multi-document summarization. These prompts are long, 307 words on average. They have between one to tens of instructions with a mean of about 8. Different from the Gemini 1.0 Technical Report (Gemini-Team et al., 2023), we also use another set of 406 prompts from human raters that covers varied topics and instruction types. These prompts are shorter, 66 words on average, with one to more than a dozen instructions (average count is five).

For evaluation, human annotators were asked to rate whether a response follows (or not) each of the instructions present in the prompt. We aggregate these human judgements into two metrics: per-instruction accuracy (the percentage of instructions over the full evaluation set that are followed) and full-response accuracy (percentage of prompts where every instruction was followed).

Our results for the two prompt sets are shown in Table 13. The Gemini 1.5 models show strong gains on the set of 1326 long and enterprise prompts: the 1.5 Pro model has 32% improvement in response accuracy from the 1.0 Pro model, by fully following 59% of all the long prompts. Even the smaller 1.5 Flash model has a 24% increase here. At instruction-level, the 1.5 Pro model reaches 90% accuracy.

For the set of 406 shorter prompts, the Gemini 1.5 models follow 86-87% of the diverse instructions. 65% of the prompts were fully followed, performing similar to Gemini 1.0 Pro.

Long-tail GenAI Tasks

One more area to highlight is the addition of data around long-tail instructions. These exercises test LLMs on their ability to answer expert-level questions.

In Expertise QA, we engage with in-house experts that have formal training and experience in various domains (e.g., history, literature, psychology). They produced hard, sometimes complex questions (e.g., As films began using sound for dialogue, how did the changing use of visual metaphor affect the ways audiences expected narratives to develop in movies? or How does Vygotsky’s theory of internal speech apply across multilingual language acquisition?).

The same experts then rated and ranked model responses to their respective questions. Models were evaluated according to their ability to answer such questions with a high degree of accuracy, but also, secondarily, completeness and informativeness…Gemini 1.5 models significantly and strongly outperform 1.0 Pro on the this task.

You can see from Figure 18 above that Gemini 1.5 and 1.5 Flash significantly outperformed the Gemini 1.0 Pro and Ultra models. Just as important, instances of “severe inaccuracies,” commonly referred to as hallucinations, declined significantly.

These data help illuminate one of the mysteries revolving around Gemini 1.5. Why did it arrive so quickly after the limited introduction of the 1.0 model family, and why has the Ultra model not been released? Google wanted to release Gemini before year-end, and any inclination to delay until 2024 was set aside after OpenAI had its management shake-up debacle in November 2023.

The 1.5 model release was likely about more than just hitting the million token context window. It was the more accurate and reliable model that Google wanted to release as Gemini’s debut, but their hand was forced to move more quickly.

Google’s Progress

Other additions to the updated research are worth reviewing. Improvements in tool use, STEM topics, translation, and video interpretation are among the new content that Google quietly inserted into the paper, which more than doubled in length between late April and early May 2024.

Google has been widely criticized for its missteps and inadvertent role as a follower in the market. Some of those critiques were warranted, and some of the issues were self-inflicted. However, the issues may be overshadowing substantial progress on the technology innovation front. OpenAI and Anthropic have carved out technology leadership roles in the frontier AI foundation model segment. It looks like Google now has a seat at the table. More choices among leading frontier models will be better for users and incentivize the technology leaders to keep innovating.

8 Announcements from Microsoft BUILD

The Microsoft BUILD 2024 developer conference offered a new slate of generative AI announcements. However, the tone was very different from what we witnessed last week for OpenAI’s and Google’s announcements. That seemed appropriate, given that the “wow factor” was not working in Microsoft’s favor.

Scale AI's $1B Funding Round Highlights a New Phase in the Data and AI Wars

AI foundation models, whether they are large language models (LLM) or multimodal models with capabilities extending to audio, video, and images, rely on data to work their magic. Scale AI’s founder, Alexandr Wang, commented in a recent blog: AI is built from three fundamental pillars: data, compute, and algorithms.