How Generative AI Testing Differs from Traditional Apps - Lessons from 15K Testers

Applause's Chris Sheehan discusses scale, diversity, UX, and variable outputs

When you come to testing traditional apps versus LLM apps or features embedded into your traditional app, there really are two main differences that you need to consider. The first is around the inputs and the outputs. With an LLM it's variable.

Bret and I may have the same intent, but the way that we put the prompts in are very different and the outputs we get are very different…With a traditional app, again, the way you do testing, you have [an] expected input and expected result. So, if I'm testing a mobile app I will have a certain set of steps on a journey and I will expect a certain result. If I don't get that it's actually failed.

The other area is in risk. Traditional apps definitely have risk … but with the LLM it depends on the use case and … your risk profile tends to be higher…What this means is that when you look at a test plan for a traditional app—so let's just say we are testing a mobile application—I may test that with a small team. I may only need a dozen people and what I'm trying to optimize is to make sure I test on all the devices and the operating systems that my end users are using. When it comes to LLMs, the test plan is different…

Your best chances of capturing those high impact risks is to use a large team. A large team could be 50 people, it could be 100 people. We run teams of thousands over several weeks.

Chris Sheehan, SVP of AI and strategic accounts at Applause, took the stage at the Sythedia 4 LLM Innovation Showcase to reveal for the first time what the company has learned from having 15,000 human testers using generative AI applications. Sheehan covers a lot of ground in his presentation and fireside chat, including:

The three areas where you need to focus generative AI app testing

Adoption patterns of generative AI apps

The importance of testing scale and diversity

The generative AI risk matrix for application providers

And quite a bit more…

Testing Generative AI Apps

Traditional app testing focuses on ensuring that expected inputs deliver expected outputs. This is about more than ensuring consistency. Variability is not part of the design or expected functionality for traditional apps. Variable performance is a bug. Testers identify these problems, and the software developers then provide a fix.

Generative AI apps expect variability in both inputs and outputs. It is a feature of the system or, at the very least, an expected app behavior. However, it leads to a lot more testing complexity.

If a traditional app has ten features, you may only need to test ten inputs and verify that the ten outputs are the expected results. By contrast, a generative AI app with ten features could confront the challenge of both infinite inputs and infinite outputs.

This means you must test at a larger scale to ensure the application statistically functions consistently across a wide variety of inputs and outputs. In addition, you also need to evaluate those outputs to ensure that, despite variability, they fall within an acceptable range of output accuracy and quality.

Beyond this, the output variability creates more complexity in assessing acceptable output accuracy and quality. You also must consider how different users gauge the appropriateness of the variable outputs. This means you must also have a breadth of tester diversity to account for user experience variables.

Generative AI App Risks

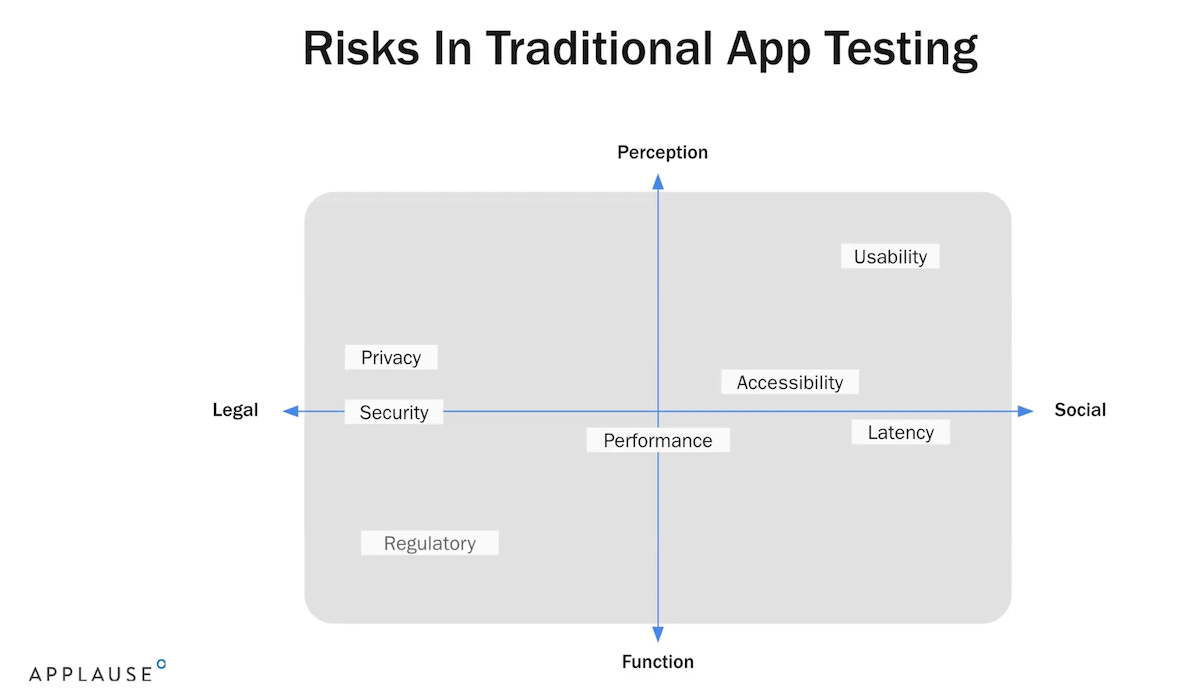

Sheehan also went into more depth about the broader set of risks associated with generative AI apps. Traditional apps face risks such as performance, usability, accessibility, and security. The range of risks associated with generative AI apps include the potential for inaccuracy (due to hallucinations), higher latency in responses, copyright infringement, bias, toxicity, and novel cybersecurity threats.

These risks also can carry higher consequences for the app developer and the user. This situation means that human testing is both more complex and more vital when introducing a generative AI app compared with a traditional programmatic app.

A Topic That Deserves More Attention

It is not often that testing turns out to be one of the more interesting elements of the application development process. When it comes to generative AI, testing is very interesting. It is only through repeated use of generative AI apps that you can really understand how they function. That has implications for app quality, user experience, and risk management.

I appreciated Chris’ presentation for shedding more light on these topics. An added benefit is that all of the testing data also reveals interesting insights into the black boxes that characterize generative AI applications and how users respond.

Will OpenAI Face Economic Headwinds as Azure Takes on New Customers?

The Information published an article outlining some potential financial difficulties facing OpenAI. At the center of this thesis are two issues. Several software providers that originally introduced generative AI-enabled features using OpenAI technology have migrated to alternative large language models (LLM).