Inflection AI Announces Inflection-2 LLM with Significantly Improved Performance

Is the company fighting for position as an OpenAI alternative or is it all about Pi?

Inflection AI, the maker of the personalized chatbot Pi, has announced Inflection-2, its latest large language model (LLM), which the company says is better than Inflection-1 and Google’s PaLM 2. Although the company did provide one comparison with GPT-4 and GPT-3.5 in the blog post announcing the new LLM, most of the data provided side-stepped a direct comparison with the OpenAI models.

The chart above originated with Inflection AI, and Synthedia overlayed the GPT-4 performance benchmarks from the GPT-Fathom research paper to offer additional context. Inflection-2 is approaching GPT-4 performance levels in a couple of tests, but it remains significantly behind in coding, math, and reasoning capabilities.

PaLM 2 has many hallmarks of a high-quality LLM, but most organizations prefer to see benchmarks compared to GPT-4 or GPT -3.5 since those are the most widely used. Comparisons to Meta’s Llama 2 are also popular, given it is the open-source model that has generated the most interest to date.

Thankfully, there are several comparisons to Llama 2, though the Inflection team did not report comparative results across all tests. This is surprising because there is no reason not to run the tests directly on Llama 2, given it is open-source. Unlike PaLM 2, engineers do not need to rely on previously reported data. They can run the tests themselves.

The Results

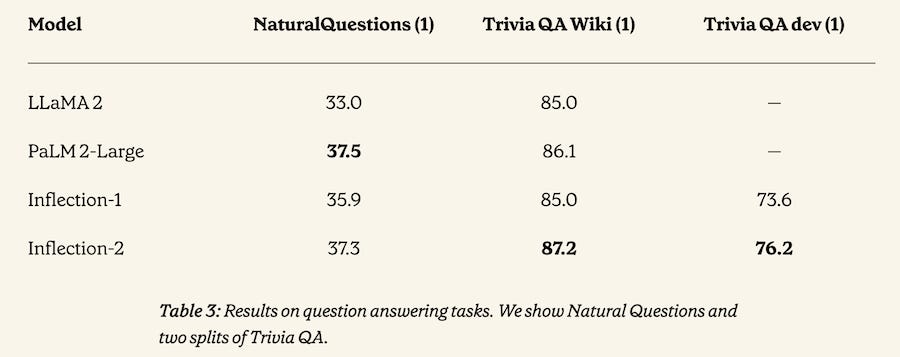

Inflection AI’s self-reported results show the Inflection-2 model essentially matching PaLM 2 performance for the MMLU, Hellaswag, ARC-E, ARC-C, PIQA (1-shot), LAMBADA, Natural Questions, and TriviaQA Wiki benchmarks. Inflection-2 was slightly better on some benchmarks, while PaLM 2 held a small edge in others. According to Inflection AI’s blog post:

Inflection-2 was trained on 5,000 NVIDIA H100 GPUs in fp8 mixed precision for ~10²⁵ FLOPs. This puts it into the same training compute class as Google’s flagship PaLM 2 Large model, which Inflection-2 outperforms on the majority of the standard AI performance benchmarks, including the well known MMLU, TriviaQA, HellaSwag & GSM8k.

Inflection’s data shows it has a small edge in performance compared with Llama 2 for the Hellaswag and PIQA (0-shot) benchmarks and a significant lead for MMLU. The benchmarks reported here offer a mix of general knowledge, reasoning, common sense, math, science, and coding. It is unclear whether the reported results represent all of the tests conducted or whether the published results are for a subset.

There are many benchmark tests available, and LLM developers often choose to report the results of different benchmarks. Unsurprisingly, there are generally few results reported that show a model’s significant underperformance relative to its selected peer set.

The more interesting comparison may be between the Inflection-1 and Inflection-2 models because this indicates progress of the company’s core product technology. While Inflection-2 showed small gains across most benchmark tests, significant gains were made in MMLU and several math and coding benchmarks.

Strategy of Alternatives

The choice to focus on the comparison with PaLM 2 suggests that Inflection is focused on gaining a position as a reasonable OpenAI alternative. If you think PaLM 2 is the key alternative right now and it is a safe choice, given its backing by Google, then showing that comparison makes sense. Granted, the more prominent alternative to OpenAI’s LLMs is Antrhopic, but it is only mentioned for the MMLU benchmark.

Despite my concerns about companies that produce only limited datasets for analysis, Inflection is only about six months old, and to have achieved near parity with Google’s current premier LLM is significant. Google Gemini may turn out to be a bigger challenge to match, but that is rumored to be delayed beyond the planned year-end launch. Inflection is clearly making quick progress.

Pi

Most people know Inflection for its personalized assistant, Pi. The company said Inflection-2 will be coming to Pi soon and will enable new features. You should expect one of those features to be a coding assistant. Granted, this week’s announcement is about the underlying technology and the hope that it will make an impact on enterprise buyers.

Inflection AI launched Pi in May 2023 and then added an API offering for enterprises in June. That was followed by a $1.3 billion funding round. It is likely that half of that was used to acquire a 22,000 GPU cluster of NVIDIA H100s. Inflection is setting its operations up for scale both for training new models and for running inference for customers.

Anthropic and Inflection both announced new models just before the U.S. Thanksgiving holiday, and AI21 Labs reported new funding. I suspect all of these announcements were planned for immediately after the holiday weekend, but the intense interest in OpenAI’s turmoil from this past week motivated early announcements. This was particularly important because several enterprises contacted LLM providers to learn more about OpenAI alternatives in the wake of the management shake-up.

What is OpenAI's Q*? How Aligned Incentives are Fueling a Questionable Narrative.

News broke late Wednesday about an internal project at OpenAI called Q* (pronounced Q-Star) that is generating a lot of discussion online and across media outlets. All things OpenAI are top of mind following its soap opera-style drama over the past week. In addition, this story includes a favorite element of both the “AI doomers” (i.e., safetyists worri…

Anthropic's Claude 2.1 LLM Has a 200K Context Window, API Tools and Poses a New Challenge to OpenAI

Anthropic has introduced Claude 2.1. Its latest large language model (LLM) boasts “an industry-leading 200K token context window,” lower hallucinations, tool APIs, and system prompts. It also arrives with a new price reduction for LLM use. This announcement follows OpenAI’s introduction of a 128K context window for GPT-4 that bested Anthropic’s previous…