Meta Launches New Open-Source Speech Recognition and Translation Model

SeamlessM4T provides a multimodal model with coverage of 100 languages

Meta announced today a new speech recognition and translation model called SeamlessM4T. OpenAI’s Whisper speech recognition and translation model was released in September 2022 to strongly positive reviews. It was so positive that some companies began hosting and charging for access to the API. OpenAI then added its own pay-for-use Whisper AI service earlier this year.

Meta seems to have an open-source foundation model to match everything in OpenAI’s and Google’s product portfolios. SeamlessM4T is its Whisper competitor. According to the announcement:

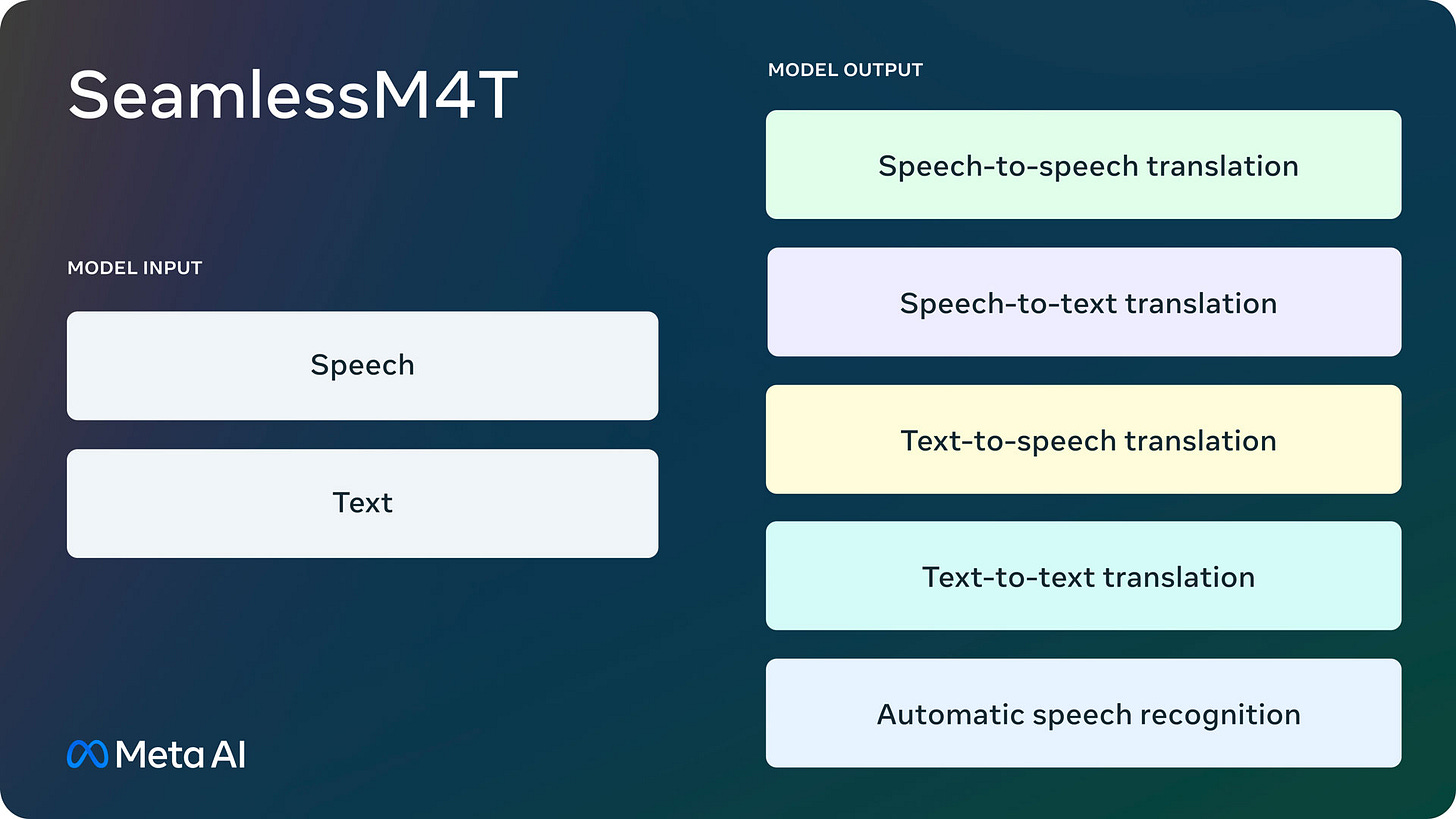

Today, we are introducing SeamlessM4T, a foundational multilingual and multitask model that seamlessly translates and transcribes across speech and text. SeamlessM4T supports:

Speech recognition for nearly 100 languages

Speech-to-text translation for nearly 100 input and output languages

Speech-to-speech translation, supporting nearly 100 input languages and 35 (+ English) output languages

Text-to-text translation for nearly 100 languages

Text-to-speech translation, supporting nearly 100 input languages and 35 (+ English) output languages

…

Building a universal language translator, like the fictional Babel Fish in The Hitchhiker’s Guide to the Galaxy, is challenging because existing speech-to-speech and speech-to-text systems only cover a small fraction of the world’s languages. SeamlessM4T represents a significant breakthrough in the field of speech-to speech and speech-to-text by addressing the challenges of limited language coverage and a reliance on separate systems, which divide the task of speech-to speech translation into multiple stages across subsystems. These systems can leverage large amounts of data and generally perform well for only one modality. Our challenge was to create a unified multilingual model that could do it all.

We believe the work we’re announcing today is a significant step forward in this journey. Our single model provides on-demand translations that enable people who speak different languages to communicate more effectively. We significantly improve performance for the low and mid-resource languages we support. These are languages that have smaller digital linguistic footprints. We also maintain strong performance on high-resource languages, such as English, Spanish, and German. SeamlessM4T implicitly recognizes the source languages, without the need for a separate language identification model.

Paco Guzman, a Meta research science manager, said, “The technology is multimodal. That means that it can understand language from speech or text and generate translations into either or both. It can also transcribe speech into text and all with a single model. What is very exciting is that it is also massively multilingual. Right now, it supports translation into nearly 100 languages for text, and 35 languages for speech.”

The new product landing page adds additional details around the motivation and capabilities of the new model:

Existing translation systems have two shortcomings: limited language coverage, creating barriers for multilingual communication, and the reliance on multiple models, often causing translation errors, delays, and deployment complexities. SeamlessM4T addresses these challenges with its greater language coverage, accuracy and all-in-one model capabilities. These advances enable more effortless communication between people of different linguistic backgrounds, and greater translation capabilities from a model that can be used and built upon with ease.

SeamlessM4T (Massive Multilingual Multimodal Machine Translation) is the first multimodal model representing a significant breakthrough in speech-to-speech and speech-to-text translation and transcription. Publicly-released under a CC BY-NC 4.0 license, the model supports nearly 100 languages for input (speech + text), 100 languages for text output and 35 languages (plus English) for speech output.

SeamlessM4T draws on the findings and capabilities from Meta’s No Language Left Behind (NLLB), Universal Speech Translator, and Massively Multilingual Speech advances, all from a single model.

Performance

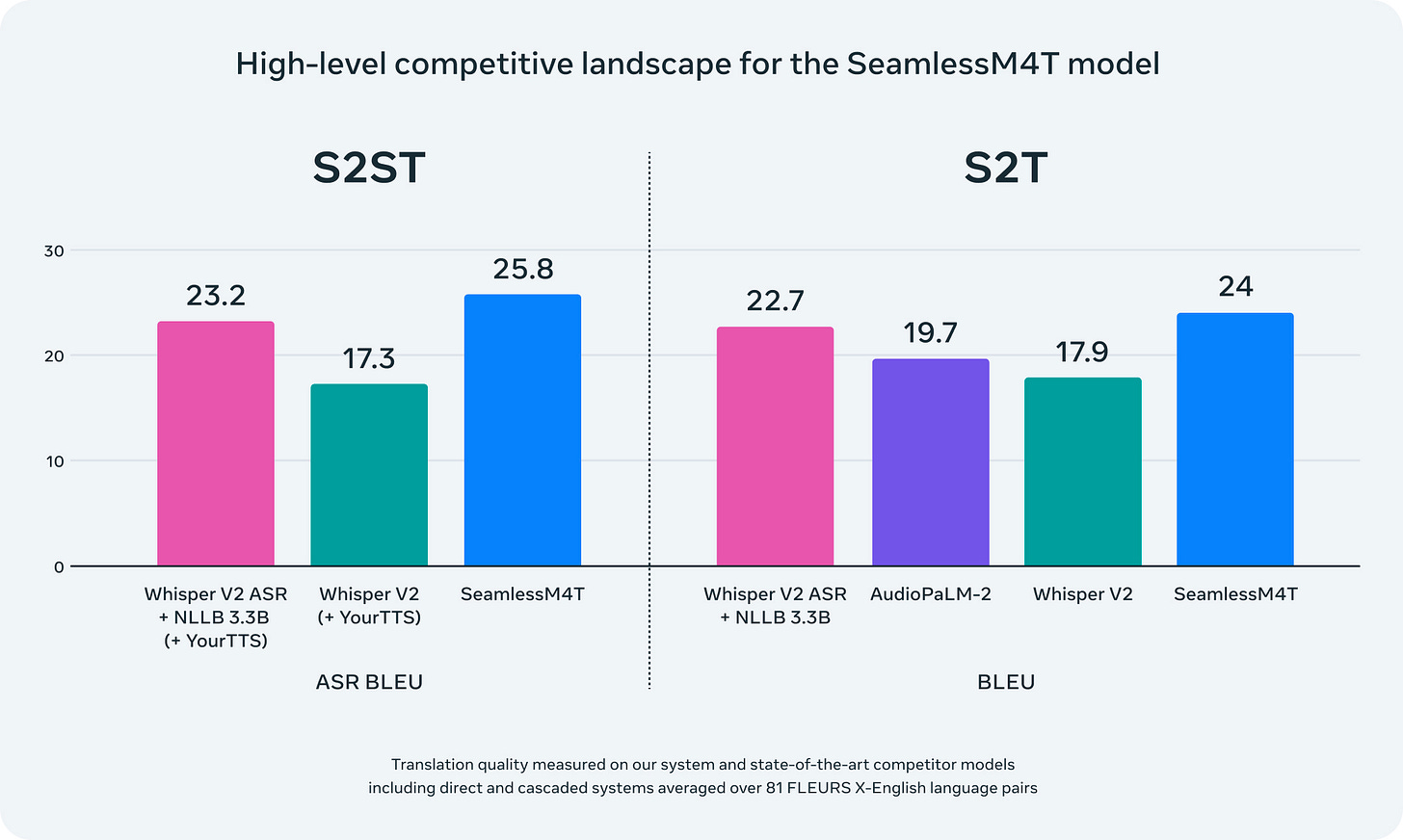

Meta shared that its internal benchmarks for speech recognition and translation that showed a higher score than OpenAI’s Whisper for speech-to-speech translation, and higher than Whisper and Google’s AudioPaLM-2 for speech-to-text.

The company said it achieved these rankings using the 470,000-hour SeamleassAlign dataset. Meta also developed this dataset, and it is available for researchers to employ.

Non-Commercial Use Only - For Now

While the licensing model is free, it does not allow for commercial use. Meta’s announcement clarifies that SeamlessM4T is for researchers and developers. However, you may recall that Meta’s original Llama large language model (LLM) originally only allowed non-commercial use. The company later shifted to a permissive open-source commercial use license for the release of Llama 2. That means Seamless4MT may add commercial use permissions in a future release.

Speech Recognition and Translation Rising

SeamlessM4T is impressive based on its description and claims. Along with Whisper and AudioPaLM, SeamlessM4T is transforming the existing speech recognition and translation markets. Open-source models generally reduce the overall cost of a project or capability. That alone creates a situation where lower costs make new use cases viable.

It also challenges existing speech recognition and translation tools and services. The competitive pressure of capable open-source models will make it harder for proprietary solution developers who must invest heavily to develop and maintain their solutions. Many also maintain a heavy budget for human transcription and translation.

Meta has been making generative AI news for years at this point, so there is reason to believe the claims. If Seamless4MT is really better than Whisper and AudioPaLM, and it eventually has a commercial use license, it may permanently change the speech recognition and translation landscape.

You can also see how this could be beneficial for several Meta social channels such as Facebook, Instagram, and WhatsApp. These apps bring people together from all over the world around a person or topic. Most of these are English-only products for text or audio. Employing Seamless M4T and immediately translating into 100 other languages for STT and 36 for STS could make these social networks more engaging, and more accessible to many users.

Meta is Rolling Out Generative AI Features for Advertisers

Meta has produced a lot of innovative generative AI research (e.g., here, here, and here), but much of it has been introduced only to academic researchers. Earlier today, the company announced three generative AI features for advertisers. Text Variation

OpenAI Makes the GPT-4, DALL-E, Whisper, and ChatGPT Model APIs Generally Available

There has been a lot of interest among software developers in API access for GPT-4, DALL-E for image generation, Whisper for speech recognition, and GPT-3.5-turbo for ChatGPT-style solutions. Many have been able to secure access but often after long delays. Each of these APIs is now generally available for any developer to use.