Meta's Secret Weapon in the Generative AI Wars May Be its Smart Glasses + Assistant

Meta Ray-Bans receive good reviews and integrate into daily life

Why has Meta invested in the Quest VR product? Once you get past what the company has said about the metaverse and the deeper idea about connecting people, you arrive at the essence of Meta’s business: attention.

Why has the company created the Meta Ray-Ban smart glasses? The earliest motivations may be traced back to fierce competition with Snapchat. Snap Spectacles were a hit with users before they were a bust. However, it was not hard to recognize that there was consumer interest. It is also easy to see that smart glasses can become a more focused gateway to digital experiences than smartphones. Could they become the next chokepoint of consumer attention?

Smart glasses become more valuable when powered by an always-available, voice-activated digital assistant. So, Meta AI adds to the value of the Meta Ray-Ban smart glasses while also providing a new distribution chokepoint for the company’s digital assistant ambitions.

Meta’s mission might be to connect people with each other. However, the business model supporting the mission is based on attention. More attention means more opportunities to service ads or learn more about users, so the ads and services can become more relevant targeted.

Smart glasses and the Meta AI assistant can help funnel that attention in a way that goes beyond productivity use cases. It also goes beyond connecting people as a primary product attribute.

Attention is All Meta Needs

Meta is many things, but they all rely upon and seek to maximize attention. This is understandable. Meta’s apps have the largest user bases in the world and are constantly under assault from existing and new rivals. However, if user attention is primarily trained on their favorite Meta app, they are less likely to try an alternative. A reduction in Meta app attention provides an opportunity for competitors to plant the seed of interest. While maximizing attention may deliver more opportunities for ad revenue, it also reduces the threat posed by competitors.

Meta acquired Instagram and WhatsApp because its goal is to be the first and most frequently used connection between people and digital experiences. Both of these apps were popular enough that they posed a risk to Facebook’s user attention dominance, so they were acquired.

Meta eventually added features to Instagram to drive more app usage and recently turned it into a TikTok clone because the Bytedance app was better at holding user attention. It could not acquire TikTok, so Instagram became Meta’s channel to mitigate the risk of attention erosion. The current Instagram strategy is built around attention consolidation.

WhatsApp was driving more connectivity between people and had become a place where consumer attention was high. It was a different type of attention, but it represented a robust messaging channel, particularly for users outside of the United States. Think of it as attention expansion.

This framing is helpful in understanding the company’s launch of Meta AI. Generative AI-enabled assistants have the potential to become a consumer’s first stop for digital experiences. The de facto digital landing page for consumers will have a significant influence in directing consumer attention. Meta doesn’t want that influence to end up with OpenAI, Google, X, or another generative innovator.

Bringing Digital to the Physical World

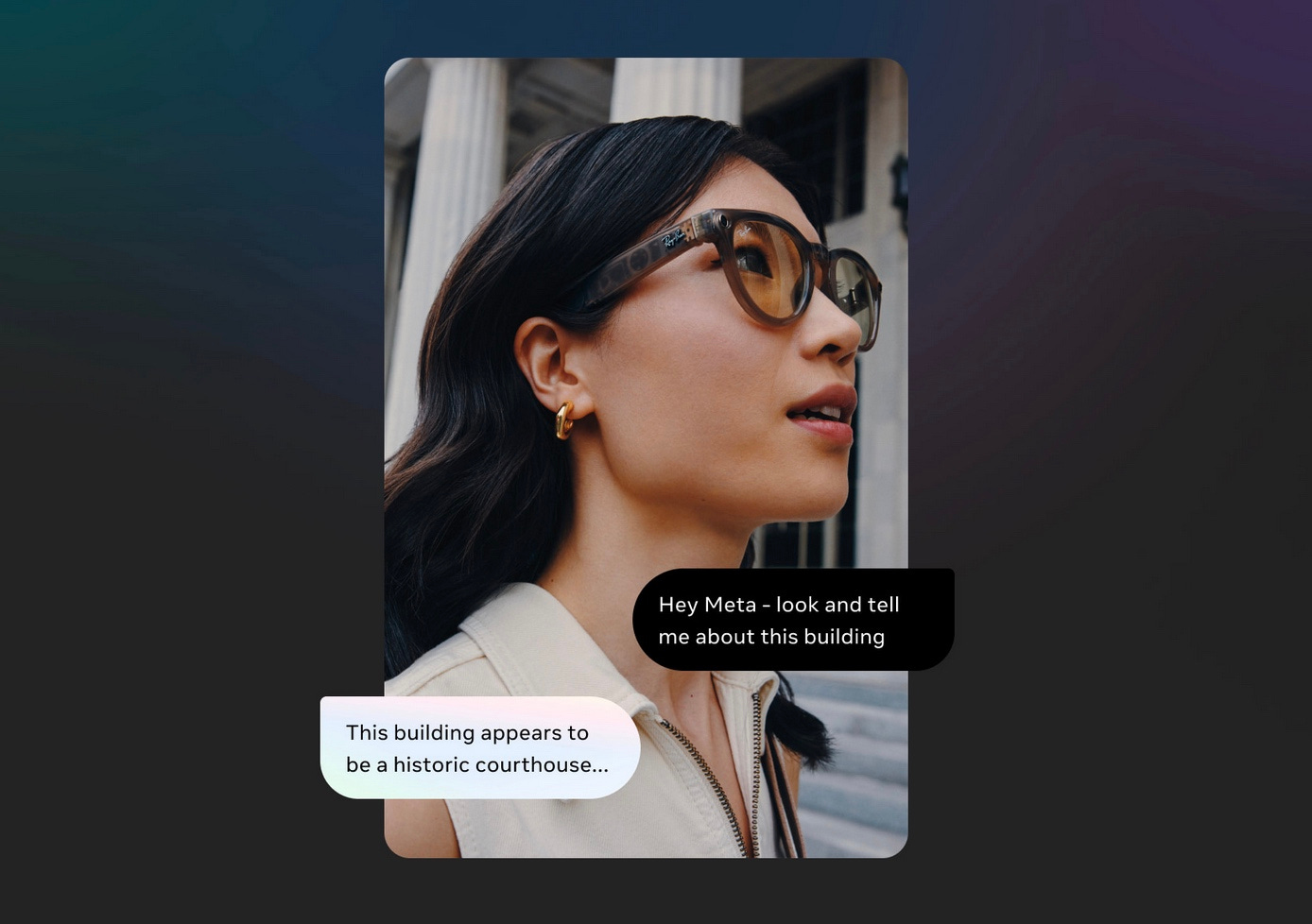

Meta’s most recent Ray-Ban upgrades ran a headline, “Smarter with Meta AI. Use the power of your voice with Meta AI to get information and control features completely hands-free.” The AI assistant angle is second only to the video and image capture features in the promotional material. The upgraded Llama 3 models will make this capability a more compelling feature.

Another key feature upgrade of the latest release is the general availability of multimodal AI identification of objects and places in the physical world. Like Google Lens, Meta Ray-Bans will enable you to ask for more information about what is in front of you. Meta’s smart glasses don’t yet enable users to see information on a projected display. However, they are adding augmented reality features by combining AI vision and language capabilities.

Smartphones are the key devices that connect consumers to digital services wherever they are. They also took the first step in integrating digital and physical experiences. However, integration is a bit clumsy and often inconvenient. Hands-free access via a camera on your face is more seamless and convenient. Convenience is likely to drive more digital interactions during daily life. It is also likely to provide a lot more contextual data for Meta, enabling further personalization of the user experience.

Before that comes to pass, Meta needs consumers to try the product and like it. So far, it seems to be going well. Several recent tech reviews express a favorable view of what Meta has delivered and where it will likely progress.

It’s a low bar so far, but Meta’s Ray-Ban smart glasses are proving to be the best implementation of wearable AI out there. -The Verge

How Meta Ray Ban Smart Glasses Displaced My Smartphone On Opening Day. -Forbes

Smart glasses may never replace the smartphone entirely. However, they are positioned to be adopted into the personal area network that today is comprised of smartphones, smartwatches, and earbuds. When well-designed, smart glasses are not a new screen or a burden to wear. They also add value beyond what the existing devices provide.

You might think that Apple, Bose, or Google are more likely to make an impact in the smart glasses market. Apple has focused on VR Google, which few people want; Bose has made a half-hearted attempt at semi-smart glasses, and Google invested heavily in the segment, but it was too early. Meta seems to have struck a balance between meaningful augmentation of the user experience while avoiding technology features that are not ready for mass consumer use.

The emergence of high-quality AI assistant capabilities enabled by large language models has made this path far easier. Meta AI plus Meta Ray-Bans are an even more natural match than attaching an assistant to existing mobile apps. It is unclear whether Meta can transform smart glasses into a mass-market device. However, it has created a scenario to start driving demand and benefit significantly if the category takes off.

Disintermediating Apple and Google

There was a time when Meta considered launching its own smartphone. Meta CEO Mark Zuckerberg eventually killed that project and focused the company on delivering the most popular mobile apps. Facebook’s outsized success would have been unlikely if it remained a desktop app. At the same time, smartphones have become more valuable because of social media apps.

If you ask someone who won the mobile wars, you will likely hear Apple and Google as the reflexive response. Meta is the third winner. It would never have reached three billion daily active users without the rise of smartphones, and it has capitalized on availability across platforms. Apple serves its mobile users mostly through the iPhone. Google serves its mobile users through Android. Facebook serves everyone’s users.

However, Apple iOS and Google Android are choke-points for digital services. They can (and do) restrict what mobile app makers are permitted to do, what they know about users, and how they can monetize. Smart glasses may enable companies to disintermediate Apple and Google’s mobile stranglehold. Meta wants to be that disrupter. It also wants to avoid disintermediation by OpenAI, Microsoft, and Google.

Meta AI is the company’s cross-platform strategy. The next billion-user app may be a personalized digital assistant. Given Meta’s knowledge of user interest and behavior, it will have a headstart at personalization. Meta Ray-Ban smart glasses are the company’s device platform play. Quest devices may lead to a metaverse bonanza at some point, but the attention opportunity is far higher for smart glasses.

Meta Llama 3 Launch Part 3 - Meta AI Upgrade, Broader Distribution & Strategy

Meta’s Llama 3 announcements (Part 1, Part 2) were coupled with broader availability for the new large language models (LLM) via the Meta AI assistant. This is now available in 14 countries via Meta’s social media apps and a new web app. According to the Meta AI

Generative AI in Entertainment Framework and Landscape

It is well understood that generative AI in general and large language models (LLM) in particular are leading to rapid industry change. One industry that has resisted the change but is bound to be significantly transformed is entertainment. Whether lean-back experiences such as music, movies, and television or lean-forward experiences like games, LLMs, and related technologies will enable a shift in the way we think about and consume leisure activities.