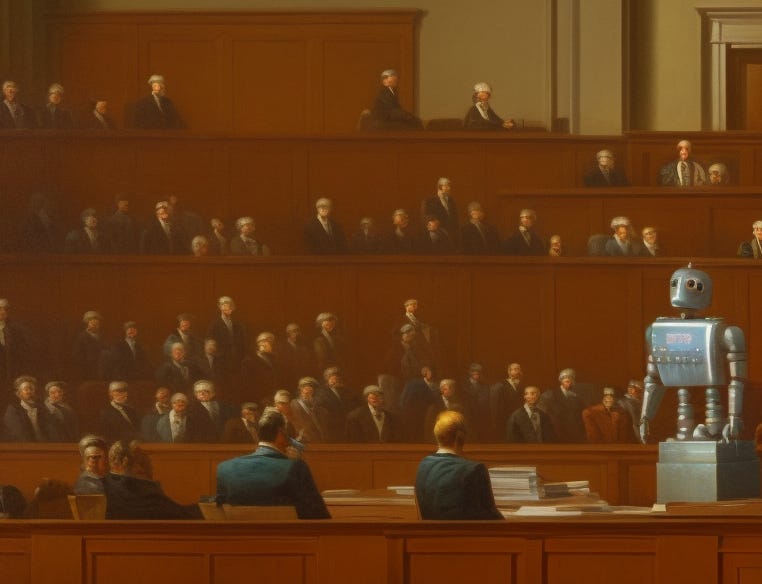

Midjourney, Stability AI, and Deviant Art Named in New Class Action Lawsuit

Law firms say they are representing artists for copyright infringement and unfair competition

The Joseph Saveri Law Firm, Matthew Butterick, and Lockridge, Grindal, Nauen P.L.L.P filed a class action lawsuit last week against Stability AI, Deviant Art, and Mijourney. The suit was filed in U.S. District Court in Northern California on behalf of a class of plaintiffs that lawyer Joseph Saveri identifies as artists. A media release announcing the suit stated:

“The lawsuit alleges direct copyright infringement, vicarious copyright infringement related to forgeries, violations of the Digital Millennium Copyright Act (DMCA), violation of class members' rights of publicity, breach of contract related to the DeviantArt Terms of Service, and various violations of California's unfair competition laws.”

Butterick Strikes Again

You might recognize the name Matthew Butterick. He is also leading a class action lawsuit against OpenAI and Microsoft related to GitHub Copilot. His contention is that state and local laws, federal copyright laws, and the terms of use of various open source repositories restrict the use of the code in OpenAI’s Codex for commercial purposes. The new lawsuit against some of the leading text-to-image generators is based on similar premises.

The legitimacy of these suits will be decided in the courts. Butterick contends that the laws are very clear, and these tools violate existing statutes. He also has an interest in this being true as a legal representative leading a class action suit.

For this suit, Butterick created a new website focused on Stable Diffusion litigation. The website and suit list the artists Sarah Andersen, Kelly McKernan, and Karla Ortiz as plaintiffs. Each plaintiff believes their artwork was part of the LAION-5B dataset and their work is being unlawfully used to create derivative works. Section I. 4. of the suit alleges Stable Diffusion is “merely a complex collage tool.”

That is a savvy legal argument. There are instances where collages include copyrighted materials and that can be deemed unlawful. However, in other instances, they are deemed lawful based on “fair use” doctrine.

Kathryn Dalli, a partner with Twomey, Latham, Shea, Kelley, Dubin, and Quartararo commented in a blog post in 2018:

“There are four factors that the courts consider in determining whether or not use of previously copyrighted material constitutes a fair use. Without getting too bogged down in legal analysis, the bottom line is that the new work must be transformative, any copyrighted work used should be proportional to the whole and not be too substantial in terms of the overall work, and the artist must have a genuine creative reason for using the image or other work, rather than using the material to get attention or avoid coming up with something fresh.”

Butterick seems interested in arguing against the “fair use” case since it shows up on his website related to the lawsuit. He likely plans to argue these images are not “transformative” due to minimal effort by the creators, and the AI artist cannot have a “genuine creative reason for using the image” because they could not know what image the model would produce.

Lawyers for the defendants will probably start by saying fair use doesn’t even apply because the models generate novel images and are not reproductions or directly use original artworks. Instead, they are trained similarly to how an artist is trained by seeing the works of other artists. If this fails, then they will fall back on the fair use argument.

It will be interesting to follow the legal arguments. And keep in mind the laws are somewhat different in the EU and some EU member nations. So, this same set of arguments may play out in different venues and receive different verdicts.

Stability AI Moves Toward Artist Opt-out

Stability AI announced in December that it was working with haveIbeentrained.com to enable artists to opt-out of the Stable Diffusion 3 training data set. If the artists fill out a form and identify their images, Stability AI will tag them in the LAION image database and not use them in future model training.

Is Stability AI doing this to avoid litigation or to honor the wishes of artists? Well, this step was taken before the lawsuit, and Mostaque suggested in another Tweet that there is “no legal reason for this” in referring to the top-out program.

Deviant Art Ensnared Again!

It was interesting to see Deviant Art named in the lawsuit. The company famously banned AI models from using its website images in late 2022. While the website hosts over 500 million images, researchers suggest only about 0.06% of the LAION database is made up of images hosted by Deviant Art. Pinterest has a far larger representation at 8.5% of images.

Deviant Art’s move came only after some community feedback about its own text-to-image AI model called DreamUp, which is also based on Stable Diffusion. Not all implementations of Stable Diffusion are identical, but it is clear that DreamUp’s current model was trained on some images hosted on Deviant Art. This fact may violate the company’s own terms of service. Its copyright section states:

4. Copyright

DeviantArt is, unless otherwise stated, the owner of all copyright and data rights in the Service and its contents. Individuals who have posted works to DeviantArt are either the copyright owners of the component parts of that work or are posting the work under license from a copyright owner or his or her agent or otherwise as permitted by law. You may not reproduce, distribute, publicly display or perform, or prepare derivative works based on any of the Content including any such works without the express, written consent of DeviantArt or the appropriate owner of copyright in such works. DeviantArt does not claim ownership rights in your works or other materials posted by you to DeviantArt (Your Content). You agree not to distribute any part of the Service other than Your Content in any medium other than as permitted in these Terms of Service or by use of functions on the Service provided by us. You agree not to alter or modify any part of the Service unless expressly permitted to do so by us or by use of functions on the Service provided by us.

So, is the company’s claim that the LAION dataset and Stable Diffusion model used the images without permission defensible if they are also using a version of that model? A blog post by Deviant Art in November said:

AI generators were trained using datasets collected from the open web. This includes content from creator platforms like DeviantArt, Pinterest, Twitter, and more. This was done without DeviantArt's permission and without your permission.

It was the first platform that we know of that enabled artists to opt-out or opt-in to AI training datasets. The company recognized it could not actually enforce this but said it would label the artwork to enable companies scraping the site for images to honor the intentions of the artist.

What’s Next?

No one knows how this will play out. However, the verdict will set a new precedent that could send shockwaves through the generative AI industry if the plaintiffs succeed. This is sure to spill over into questions about AI models that generate text and video as well.

One thing we can be confident about is there will be more lawsuits. Some of these will be based on principle, while others will be attempted cash grabs. Regardless, we are about to see (well, several years from now) just how existing laws will be applied to generative AI.

This is the biggest threat to the industry, and some very large companies ranging from Google and Microsoft to Meta and Nvidia also have an interest in how this plays out. Expect each of them to be attached to a lawsuit at some point or figure out a way to have a voice at the table. I suspect they will not want to be mere spectators if they see a way to steer the legal action in a direction that protects their portfolios.

We may also see a DMCA-type law proposed that protects the industry from legal action while in its infancy. The probability of something like this becoming law seems remote at the moment. However, almost anything can be accomplished if enough lobbying effort (i.e. cash) is put behind it.