OpenAI Introduces Generative 3D Model Point-E

A whole new set of use cases

OpenAI has a new solution for creating 3D images from natural language prompts. On December 16th, OpenAI’s published a research paper named “Point-E: A System for Generating 3D Point Clouds from Complex Prompts.”

This paper describes the model, related research around 3D generation models, and reviews results from the system. Yesterday, OpenAI added a GitHub repository enabling developers to deploy their own model and try out Point-E.

Andrew Herndon and I shared a discussion about this on YouTube earlier today.

Point-E vs DALL-E

This is not DALL-E. While DALL-E can create images that look 3D, they are still 2D but use shading and color to render a 3D-like appearance. The model would not let you rotate an image element 45 degrees and see it like you would a three-dimensional object from a different viewpoint. Point-E does operate in a similar manner, however, with the use of natural language prompts.

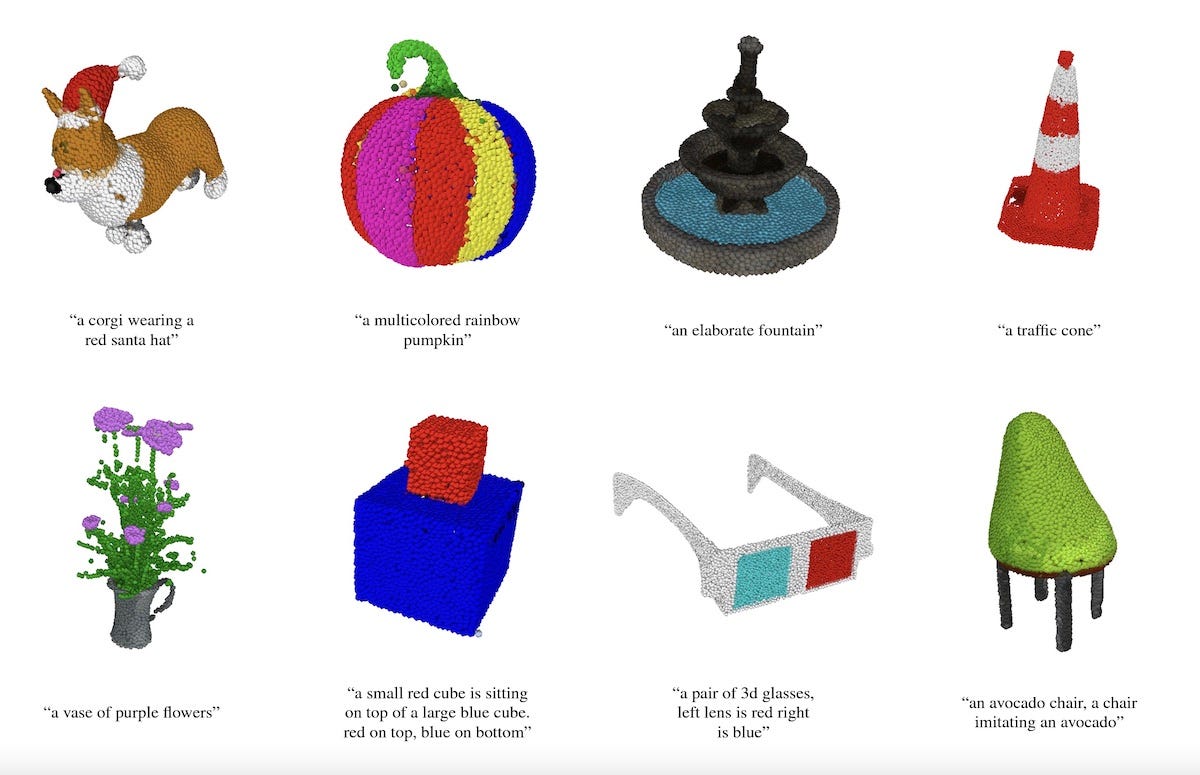

Point-E looks a lot more like what you would expect to see in a CAD model but with far lower resolution. This is one of the limitations of the solution pointed out in OpenAI’s paper.

While our method produces colored three-dimensional shapes, it does so at a relatively low resolution in a 3D format (point clouds) that does not capture fine-grained shape or texture. Extending this method to produce high-quality 3D representations such as meshes or NeRFs could allow the model’s outputs to be used for a variety of applications.

TechCrunch’s Kyle Wiggers put it this way:

Point-E doesn’t create 3D objects in the traditional sense. Rather, it generates point clouds, or discrete sets of data points in space that represent a 3D shape — hence the cheeky abbreviation. (The “E” in Point-E is short for “efficiency,” because it’s ostensibly faster than previous 3D object generation approaches.) Point clouds are easier to synthesize from a computational standpoint, but they don’t capture an object’s fine-grained shape or texture — a key limitation of Point-E currently.

Synthedia’s Andrew Herndon likened the output to Dippin’ Dots art or a 3D dot matrix printer. At the same time, these are not that much lower resolution than what you might find in many metaverse virtual worlds today.

Point-E Use Cases

While the current versions of 3D renders are fairly low resolution and sometimes incoherent, you can imagine several use cases for this even today. It could be used to create 3D models for 3D printers, to add object to metaverse virtual worlds, or even for product or architectural mock-ups.

If Point-E improves at even half the rate we are seeing for 2D image diffusion models, it will be useful very soon. Keep your eye out for an AI-3D generation feature for voxel resolution sandbox games in 2023. I would not be surprised to see this show up in Minecraft soon. For most voxel games, the resolution of Point-E should already be sufficient.

Comparisons to Google DreamFusion

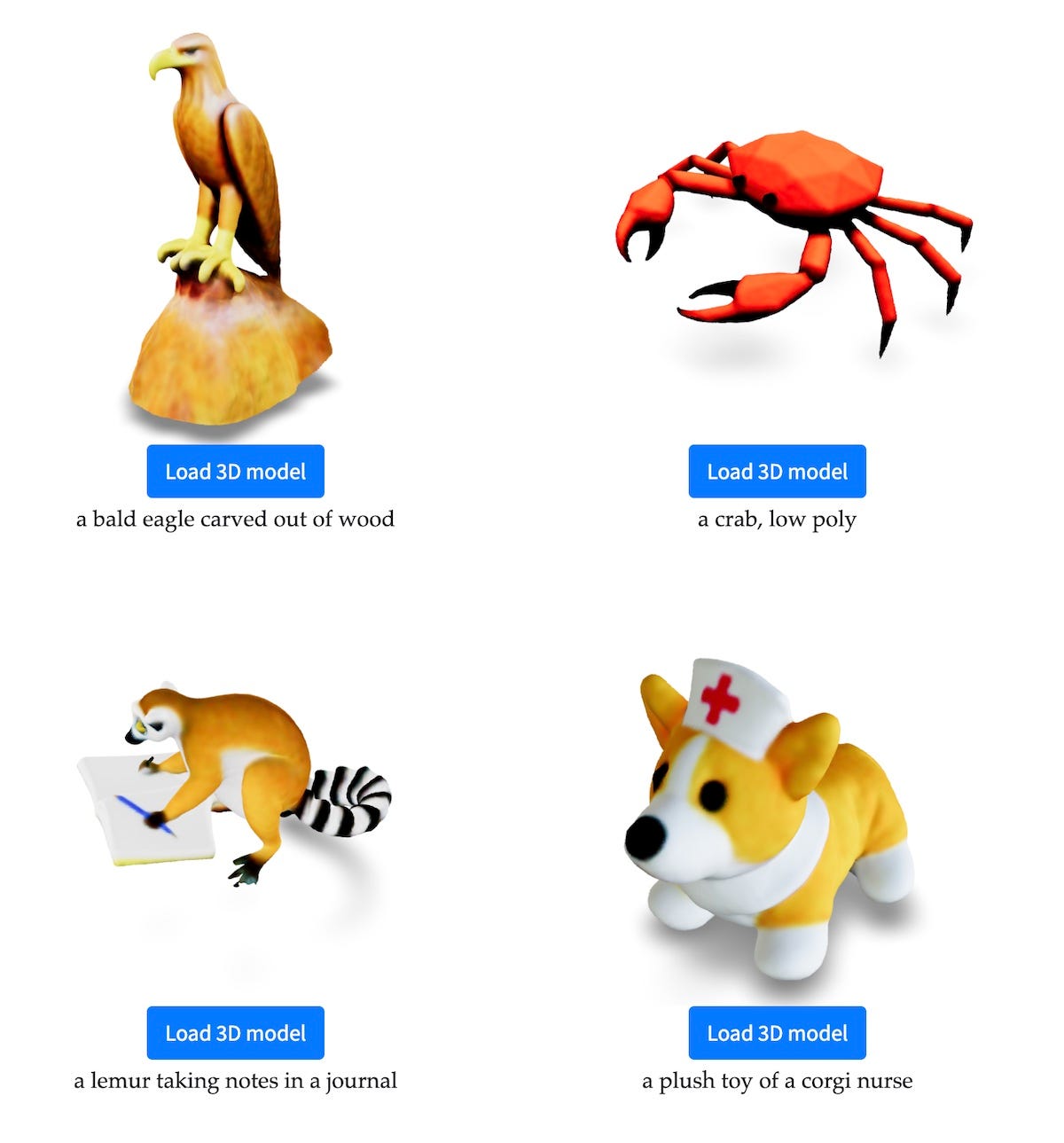

Point-E is based on OpenAI’s CLIP model, first introduced in early 2021. Google created a CLIP-based 3D model and published a paper in late 2021 about its DreamFields model. In September, Google revealed DreamFusion in another paper. It employs 3D scene parameterization which is described as similar Neural Radiance Fields (NeRF). The process stitches together a series of 2D images from different angles to render a 3D object.

DreamFusion’s resolution quality is definitely higher than the examples we have seen so far from Point-E. However, Nvidia AI scientist Jim Fan pointed out on Twitter that Point-E is much faster. He suggests it is as much as 600x times faster to render an image. The rendering time might be better aligned with production use.

Clash of the Generative AI Titans

It will be interesting to see how Google goes about opening up its generative AI solutions. LaMDA appears to be the closest competitor in terms of quality to OpenAI’s GPT-3 in the large language model (LLM). Google’s Imagin text-to-image and text-to-video models may be ahead of OpenAI’s DALL-E. Both companies have now also demonstrated 3D image generation.

There are many other competent players in the 2D image generation segment and a couple of contenders in LLMs. What is notable, is that OpenAI and Google are the companies with announced full portfolios across the text-to-x categories. It is not yet clear whether an integrated portfolio across these different domains will have added benefit but it is conceivable there will be benefits.

The key difference between the two companies today is OpenAI is open for business and Google is open for research. That has enabled OpenAI to grab both mindshare and market share ahead of the tech giant. Point-E is another example of OpenAI pushing the boundaries of generative AI innovation but also of commercialization. It looks like 2023 will be the year Google follows the commercialization path.