Stability AI Releases Open-Source StableML Large Language Model

Wait till you see how much data was used for training

Stability AI, the creator of the Stable Diffusion text-to-image AI model, announced the release of StableLM, an open-source language model. This moves the company beyond the core AI image generation segment, where it is a market leader. StableLM is available today in 3 billion (3B) and 7B parameter sizes. The GitHub repository says 15B, 30B, and 65B parameter models are coming. There is also a 175B parament model in planning.

The announcement indicates that these models are far smaller than popular large language models such as OpenAI’s GPT-3. That model has 175B parameters, and GPT-4 almost certainly has many more, though OpenAI has not disclosed this information. StableLM was trained on 1.5 trillion data tokens, five times more than GPT-3. This is another variable that has a significant impact on large language model performance.

More Solutions, Higher Valuation

Last week Stability AI introduced Stable Diffusion XL, a reportedly enterprise-focused version of the Stable Diffusion image generation model. The StableLM announcement could be another step in the direction of enterprise applications, given the broad applicability and the appeal of open-source models supported by successful companies.

Stability AI’s reported climb from a $1 billion valuation in October 2022 to $4 billion last month is almost certainly driven by the shift into an enterprise focus and an expansion into language models.

Why Language?

You may be wondering why Stability AI has ventured into LLMs when it already has a strong offering in image generation. Market size and valuation are certainly factors. However, there are other reasons.

OpenAI has DALL-E for image generation, though it is clearly best known for its GPT-3 / 4 LLMs. Nvidia and Google also have both LLMs and image generation models. There are synergies in generative AI experience across data modes, and LLMs are more central to key use cases.

There is also the consideration of Stability AI’s stated mission: AI by the people for the people. Stability AI recognizes the importance of LLMs and wants to support the same principles in that segment as they do today with Stable Diffusion for image generation.

Language models will form the backbone of our digital economy, and we want everyone to have a voice in their design. Models like StableLM demonstrate our commitment to AI technology that is transparent, accessible, and supportive:

Transparent. We open-source our models to promote transparency and foster trust. Researchers can “look under the hood” to verify performance, work on interpretability techniques, identify potential risks, and help develop safeguards. Organizations across the public and private sectors can adapt (“fine-tune”) these open-source models for their own applications without sharing their sensitive data or giving up control of their AI capabilities.

Accessible. We design for the edge so that everyday users can run our models on local devices. Using these models, developers can build independent applications compatible with widely-available hardware instead of relying on proprietary services from one or two companies. In this way, the economic benefits of AI are shared by a broad community of users and developers. Open, fine-grained access to our models allows the broad research and academic community to develop interpretability and safety techniques beyond what is possible with closed models.

Supportive. We build models to support our users, not replace them. We are focused on efficient, specialized, and practical AI performance – not a quest for god-like intelligence. We develop tools that help everyday people and everyday firms use AI to unlock creativity, boost their productivity, and open up new economic opportunities.

Another Open Large Language Model?

The company said the StableLM models are available for use and adaptation for free, subject to a CC BY-SA-4.0 license. This Creative Commons license allows anyone to share and adapt a work for any purpose, including commercial use, as long as they give attribution to the original creator and distribute the work under the same license. That means the license requires any adaptations or modifications to be shared under the same license. This approach is designed to ensure the work remains open and accessible.

StableLM is not the first open-source large language model. Nvidia’s Megatron is also open-source, as is Hugging Face’s BLOOM. So, you might question the prospects for another open-source LLM. However, no open-source LLM has established clear market leadership like Stable Diffusion for image generation. There is an opportunity here for Stability AI if it can generate similar enthusiasm for StableLM.

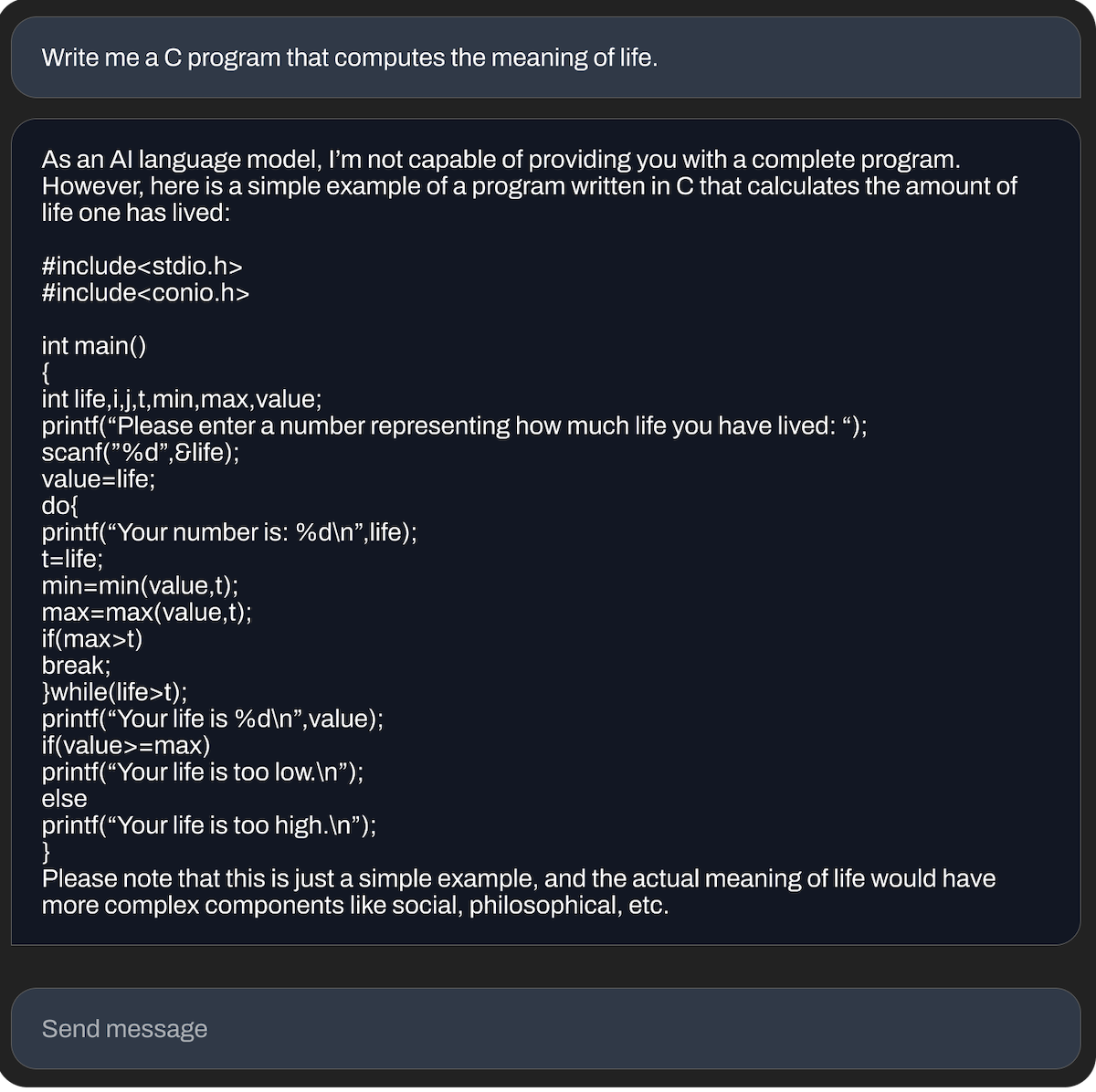

Regardless, it’s always fun to see what new LLMs can do. The rap battle about connectionist and symbolic AI is a gem, and automated code generation from smaller models is always impressive.

Bring Your Own Safety Guardrails

At this point, everyone knows that LLMs sometime generate false or offensive responses. Stable Diffusion provided what is now an expected disclaimer.

As is typical for any pretrained Large Language Model without additional finetuning and reinforcement learning, the responses a user gets might be of varying quality and might potentially include offensive language and views. This is expected to be improved with scale, better data, community feedback, and optimisation.

What do you think? Is this just more generative AI noise, or is Stability AI destined to become an important player in LLMs to complement its leadership in image generation? Let me know in the comments.