The Most Interesting Analysis of the Generative AI Market to Date Has Arrived

a16z's thesis is worth digging into

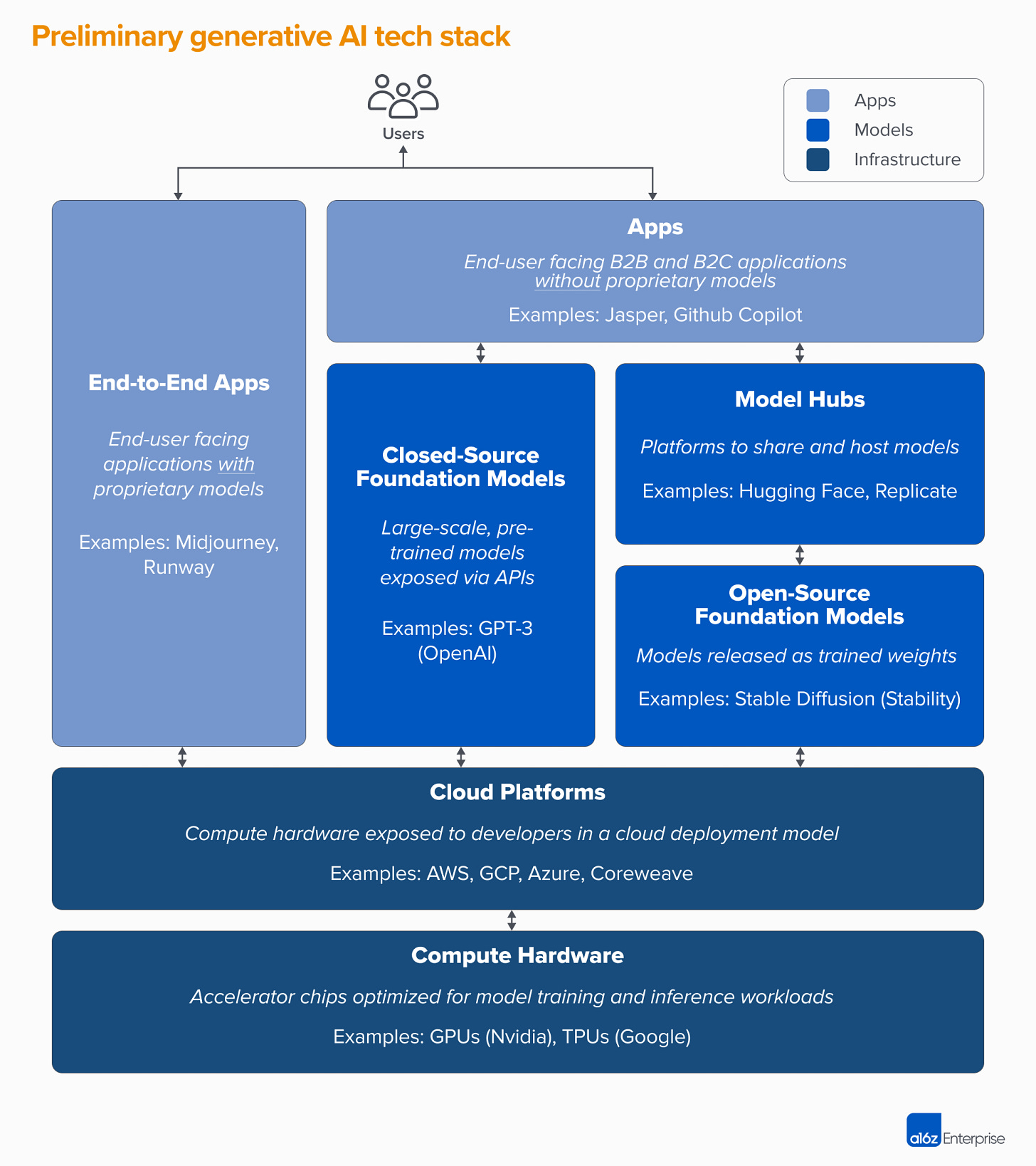

The technology stack architecture from Andreessen Horowitz (a16z) is very useful. It will get updated over time, but it lays out in a simple way to understand the fact that there are many moving parts. This industry isn’t just about OpenAI and Stable Diffusion.

Generative AI and the broader synthetic media markets are getting attention right now at the application layer and the AI model layer. However, there is a lot more going on, which this article points out.

The a16z article is around 3,000 words and, given the depth of the analysis, will probably take close to 15 minutes to read in its entirety. I recommend you do that at some point, but in case you need a quick synopsis, I took the liberty of using Wordtune Read, the app from AI21 Labs, to summarize it for you. Here you go in less than 1,000 words with a little added commentary by me at the end.

***courtesy of Wordtune Read with some minor editing***

Who Owns the Generative AI Platform?

by Matt Bornstein, Guido Appenzeller, and Martin Casado of a16z

We're starting to see the very early stages of a tech stack emerge in generative artificial intelligence (AI), and several applications have reached $100 million in annualized revenue less than a year after launch.

Over the last year, we've met with dozens of startup founders and operators in large companies who deal directly with generative AI. We've observed that infrastructure vendors are likely the biggest winners in this market so far, capturing the majority of dollars flowing through the stack.

High-level tech stack: Infrastructure, models, and apps

3 layers have emerged

Applications that integrate generative AI models into a user-facing product

Models that power AI products

Infrastructure vendors that run training and inference workloads

The first wave of generative AI apps are starting to reach scale, but struggle with retention and differentiation.

To build a large, independent company, you must own the end-customer. In the case of generative AI, the biggest companies are not end-user applications, but rather network effects, holding onto data, or building increasingly complex workflows.

In generative AI, gross margins range from 50-60%, and top-of-funnel growth has been amazing, but it's unclear if current customer acquisition strategies will be scalable. Additionally, many apps are relatively undifferentiated, and there's a strong argument to be made that vertically integrated apps have an advantage in driving differentiation.

AI models can be consumed as a service, which allows app developers to iterate quickly with a small team and swap model providers as technology advances. Generative AI products can take a number of different forms, including desktop apps, mobile apps, Figma/Photoshop plugins, Chrome extensions, even Discord bots.

Graduation risk [for model builders]. Relying on model providers is a great way for app companies to get started, and even to grow their businesses. But there’s incentive for them to build and/or host their own models once they reach scale. And many model providers have highly skewed customer distributions, with a few apps representing the majority of revenue. What happens if/when these customers switch to in-house AI development?

Model providers invented generative AI, but haven’t reached large commercial scale

The brilliant research and engineering work done at places like Google, OpenAI, and Stability has led to the mind-blowing capabilities of current large language models (LLMs) and image-generation models. Yet the revenue associated with these companies is still relatively small compared to the usage and buzz.

There are countervailing forces in favor of open source models, such as the fact that open source models can be hosted by anyone and that closed source models may find it hard to compete.

There's a common belief that AI models will converge in performance over time, but talking to app developers, it's clear that hasn't happened yet. Many model providers have highly skewed customer distributions, and some have incorporated the public good explicitly into their mission.

Infrastructure vendors touch everything, and reap the rewards

Nearly everything in generative AI passes through a cloud-hosted GPU at some point. As a result, a lot of the money in the generative AI market ultimately flows through to infrastructure companies. To put some very rough numbers around it: We estimate that, on average, app companies spend around 20-40% of revenue on inference and per-customer fine-tuning. This is typically paid either directly to cloud providers for compute instances or to third-party model providers — who, in turn, spend about half their revenue on cloud infrastructure. So, it’s reasonable to guess that 10-20% of total revenue in generative AI today goes to cloud providers.

On top of this, startups training their own models have raised billions of dollars in venture capital — the majority of which (up to 80-90% in early rounds) is typically also spent with the cloud providers. Many public tech companies spend hundreds of millions of dollars per year on model training, either with external cloud providers or directly with hardware manufacturers. These clouds are provided by the Big 3: Amazon Web Services, Google Cloud Platform, and Microsoft Azure.

Nvidia is the biggest winner in generative AI so far, having built strong moats around the GPU architecture, a robust software ecosystem, and deep usage in the academic community.

Other hardware options exist, including Google Tensor Processing Units (TPUs), AMD Instinct GPUs, AWS Inferentia and Trainium chips, and AI accelerators from startups like Cerebras, Sambanova, and Graphcore.

Nvidia GPUs are the same wherever you rent them, and most AI workloads are stateless. This means that AI workloads may be more portable across clouds than traditional application workloads, but how can cloud providers create stickiness and prevent customers from jumping to the cheapest option?

So… where will value accrue?

There don't appear to be any systemic moats in generative AI: applications lack strong product differentiation, models face unclear long-term differentiation, cloud providers lack deep technical differentiation, and even hardware companies manufacture their chips at the same fabs.

Based on the available data, it's just not clear if there will be a long-term, winner-take-all dynamic in generative AI. This is weird. But to us, it’s good news. The potential size of this market is hard to grasp — somewhere between all software and all human endeavors — so we expect many, many players and healthy competition at all levels of the stack.

We also expect both horizontal and vertical companies to succeed, with the best approach dictated by end-markets and end-users. For example, if the primary differentiation in the end-product is the AI itself, it’s likely that verticalization (i.e. tightly coupling the user-facing app to the home-grown model) will win out. Whereas if the AI is part of a larger, long-tail feature set, then it’s more likely horizontalization will occur.

*****A final note from Synthedia*****

Vertical Integration and AI Model Ownership

The models are getting very good at a rapid pace. a16z’s analysis of where the competitive moats exist and do not is worth noting. The likely rise of vertical end-to-end applications that include both a proprietary model and the application functionality is an important consideration.

Many application companies can get started quickly by sourcing someone else’s model. If they scale customers and revenue fast enough, they can raise a lot of funding at a high valuation and use that to build their own proprietary model based on an open source code base. That can enable them to save tremendous cost on renting someone else’s model and capture better margins which can then be used to grow market share.

This development is relatively new, but it already exists. Shane Orlick, the president of Jasper AI, told me in a podcast interview last week that the company was investing in its own models. It is not that they have any near-term plans to drop GPT-3, but Jasper is looking to bring some models in-house. In the conversational AI space, Cognigy told me this week that they support GPT-3 today and other third-party models but are working on some of their own.

Over time, industry revenue and profits tend to migrate to whoever owns the end customer unless there is some proprietary and essential source material in the value chain. Nvidia has some of that today and is doing well, followed by the large cloud hosting providers, but these are sure to become commoditized. The AI model builders need to differentiate quickly, or they will soon be at the mercy of the applications that own the most customers. It’s no wonder that OpenAI is building its own ChatGPT iOS app.