This is the Real Reason Google Lost $100 Billion in Market Cap Last Week

LLM-driven search is far more expensive than the current model

“From now on, the [gross margin] of search is going to drop forever. There is such margin in search, which for us is incremental. For Google it’s not, they have to defend it all.”

That quote is from a Financial Times interview with Microsoft CEO Satya Nadella, as reported by CNBC. It was alluded to in an earlier interview with The Verge but was not stated so plainly.

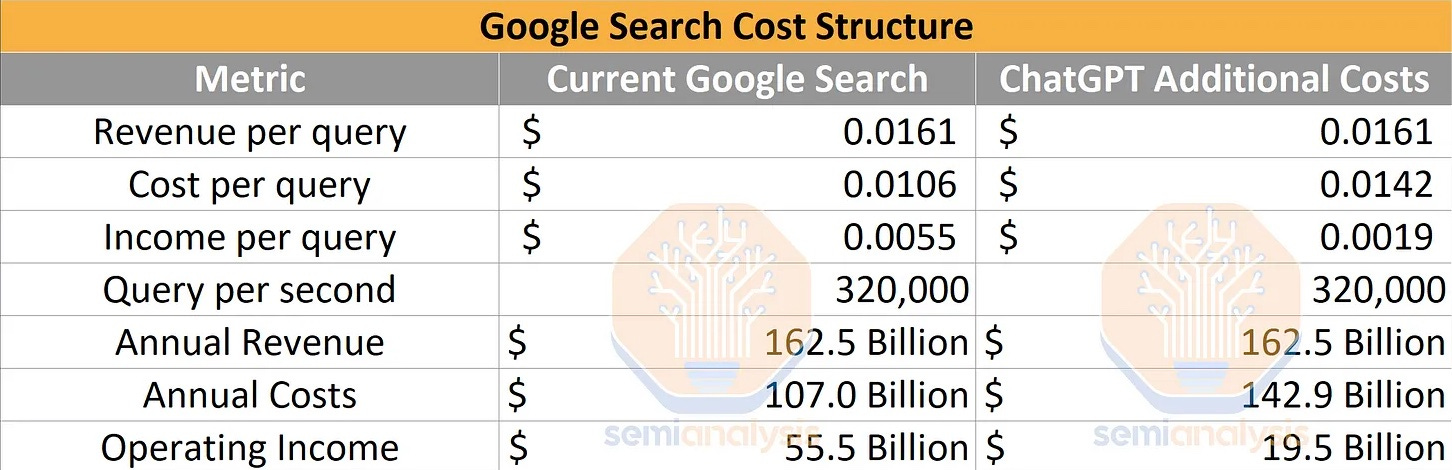

Conversational search, which is what the new Bing with GPT-3 demonstrated this week, introduces an entirely new user experience that is being warmly received by early testers. It is also far more expensive to operate than traditional search. If Google is forced to shift all of its search traffic to a conversational model similar to the new Bing or Perplexity.ai, Semianalysis estimates it will raise the annual operating expenses by $36 billion.

LLMs are More Computationally Intensive

Microsoft is about to force Google’s hand on large language model (LLM) driven search. If consumers truly like it better, the result could be quick erosion of Google’s dominant search market share. Even a small decline in market share can have substantial short-term financial repercussions for the company. That means Google will have to match Bing’s LLM features.

Semianalysis focuses on the semiconductor industry and routinely evaluates the cost of computing for various software services. Its analysis of the cost difference between traditional search and inference using an LLM is eye-opening.

The table above shows the estimate for the total cost of a single search is 1.06 cents, while revenue is 1.61 cents. That reflects a 34.2% gross margin. Semianalysis estimates that the cost of a ChatGPT-style inference-based search using an LLM is 1.42 cents. Assuming the revenue per search does not change with the new features and competitive environment, the new cost structure delivers only an 11.8% gross margin. And added competition is almost certain to put downward pricing pressure on the cost of search ads, further eroding revenue per search.

In addition, this estimate may understate the current cost of running LLM inference at scale. The margin compression may be more severe.

Phil Ockenden, Microsoft’s CFO for the Windows devices and search division, commented during last week’s earnings call, “For every one point of share gain in the search advertising market, it’s a $2 billion revenue opportunity for our advertising business.”

The likely scenario is that any gains by Microsoft in market share will come at Google’s expense. At $2 billion, every point of market share is very valuable. Even at an 11.8% gross margin, that’s $236 million in net new gross profit. The loss in today’s terms would be closer to $700 million in gross profit per share point.

If you think Google has time to defend its market share, consider the comments by Kevin Roose of the New York Times. He had the chance to try out the new Bing this week at the Microsoft event.

After I turn in this column, I’m going to do something I thought I’d never do: I’m switching my desktop computer’s default search engine to Bing. And Google, my default source of information for my entire adult life, is going to have to fight to get me back.

I have had a similar experience with Perplexity.ai. I still use Google or You.com for rifle-shot searches where my expected answer is very specific or very recent. Everything else goes to Perplexity.ai first. Maybe that will change to Bing when I get access to the new version. I would estimate Google has lost about half of my searches. Market share erosion can happen very quickly, and Google must defend against incursions by a number of rivals with new search features at the same time.

In addition, Semianalysis suggests that it would cost Google $100 billion in capital expenditures to acquire the new GPU servers to run all searches through LaMDA. “This is never going to happen, of course,” comments article author Afzal Ahmad.

Google’s Skinny LaMDA for Search

“We’ve been working on an experimental conversational AI service, powered by LaMDA, that we’re calling Bard…

“Bard seeks to combine the breadth of the world’s knowledge with the power, intelligence and creativity of our large language models. It draws on information from the web to provide fresh, high-quality responses.

“We’re releasing it initially with our lightweight model version of LaMDA. This much smaller model requires significantly less computing power, enabling us to scale to more users, allowing for more feedback.”

These statements were contained in Google CEO Sundar Pichai’s blog post introducing Bard, the company’s answer to ChatGPT. Using the lightweight model, Bard is also expected to deliver faster results than if it employed the larger model.

Speed of response has always been one of Google’s hallmark features. This approach may preserve that reputation for speed of response. However, it has the added benefit of costing far less than conducting inference using the LaMDA large model or even larger PaLM.

The Quality Handcuffs

The biggest issue with the small-model approach is the risk this creates for Google’s core brand related to search quality. Google vanquished all of the 20 or so search engines that came before it so quickly because the results were much better. This is what Google is known for. It is also one reason the company is hesitant to hand over search to an LLM that they know will sometimes produce errors but present them as facts.

However, this also means that if BingGPT, Perplexity.ai, or other products are found to be delivering higher-quality results, Google will be forced to shift to the larger model to compete and protect market share. It remains to be seen whether consumers will notice the difference and shift well-established habits. However, the switch took only about an hour for Kevin Roose.

There was a lot of talk, some headlines, and more than a few YouTube videos that suggested Google lost $100 billion in market capitalization due to an error generated by Bard that was part of the product introduction. That certainly didn’t help Google’s credibility, but financial analysts were looking at something else. Google is facing its first significant threat to market share in more than 15 years, combined with a likely erosion of gross margins and economic uncertainty that is slowing ad spending growth.

Higher cost and a credible threat to revenue—it’s no wonder Sundar Pichai issued a “code red” after ChatGPT launched.

Hmm...I wonder if the tech geniuses might develop a distributed model approach, i.e., multiple "smaller" language models designed for an array "categories" of questions/requests routed to each by some type of central system that performs the triage. If this performed fast enough it might provide lower costs and better accuracy. Just a thought?