USA TODAY Publisher Gannett Taps Cohere for Generative AI Driven News Summaries

The company says humans will review every item before publishing

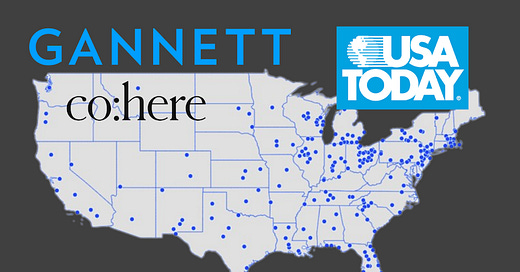

Gannett is the largest U.S. news publisher, with over 200 daily publications. The company has faced a series of strikes by unionized journalists this month spurred by delays in contract negotiations. It also plans to implement generative AI for automating news summaries and other writing tasks.

Reuters reported yesterday that Gannett plans to “tiptoe into generative AI” and stressed that humans will always have the final say before anything is published.

The technology can’t be deployed automatically, without oversight. Generative AI is a way to create efficiencies and eliminate some tedious tasks for journalists, Renn Turiano, senior vice president and head of product at Gannett said in a recent interview with Reuters.

…

Turiano added, “The desire to go fast was a mistake for some of the other news services,” he said without singling out a specific outlet. “We’re not making that mistake.”

A Win for Cohere

Several companies are battling to become the key alternative to OpenAI for large language model (LLM) solutions. Google just opened up access to its PaLM LLM, NVIDIA is pushing the NeMo LLM, and there are a number of startups competing for attention and new business.

One of the surest paths to success is likely domain-specific application support. Gannett may offer Cohere an entree into gathering domain knowledge around media publishing.

Reuters reported that Gannett trained Cohere’s LLM on 1,000 previously published articles and the journalist summaries for them. They followed this up with what sounds like a reinforcement learning with human feedback (RLHF) process.

To train the model further, journalists from USA Today’s politics team reviewed and edited automated summaries and bullet point highlights.

Cohere announced a $270 million funding round earlier this month. The Canadian company has been an early standout among the LLM startups along with Anthropic. It has a deal with Google Cloud to provide access to its models, and users can also go direct. Earlier reports pegged its valuation at $2 billion.

Avoiding Generative AI Fails

Gannett hopes to avoid some embarrassing generative AI failures by other news publishers. CNET admitted earlier this year that half of its AI-written stories contained factual errors. BuzzFeed was lampooned in March for 44 travel articles authored by its Buzzy the Robot. Many sounded like identical descriptions for entirely different travel destinations.

Then there was the Men’s Journal publication of a health-related article. Futurism reported that Bradley Anawalt, the chief of medicine at the University of Washington Medical Center, found 18 factual errors in a single article about testosterone. The Daily Beast reported:

When Arena Group, the publisher of Sports Illustrated and multiple other magazines, announced—less than a week ago—that it would lean into artificial intelligence to help spawn articles and story ideas, its chief executive promised that it planned to use generative power only for good.

Then, in a wild twist, an AI-generated article it published less than 24 hours later turned out to be riddled with errors.

It will be interesting to track Gannett’s experience with AI-led writing reviewed by human editors. If there are errors, there are a number of human journalists at Gannett that have every incentive to point them out. The Byte reported that both CNET and Arena Group’s Sports Illustrated made unannounced layoffs shortly after beginning their generative AI initiatives.

Posturing vs. Productivity

It is not hard to find a major news outlet story about the risks of generative AI increasing the spread of misinformation. Of course, the articles always express concern about misinformation spread by websites that are not news organizations. However, we have seen that some news organizations have been cavalier about their use of generative AI.

This is problematic not because everything on the internet must be true. It is a problem because news organizations have tremendous reach and are generally trusted by their readers. Publishing an AI-generated article in a popular national magazine with 18 medical errors presents more risk than a random blog, Reddit, or Instagram post.

The fact is that news organizations, like most companies today, are more interested in driving higher productivity and better margins than they are in AI-induced risks. You may infer from other posts on Synthedia that we believe the hand-wringing about generative AI risks is typically overblown. However, it also deserves to be called out when the news organizations warning us about the risks show little interest in mitigating those risks.

It is good to see that Gannett is keeping humans in the loop. All news organizations publish factual errors. Humans are error-producing machines. But, at least humans are accountable for their stories and have the incentive to reduce the incidence of errors to serve their customers and protect their reputations.

News organizations now must apply the same standard to AI-generated stories. It will require more work from editors and fact-checkers because they will not have the journalist as a backstop for verifying information or clarifying the text. It is the publisher’s responsibility to verify factual accuracy.

As long as the publishers understand that point and that it will not be credible to blame the errors on OpenAI GPT models, Google PaLM, or Cohere, they should be very big beneficiaries of the generative AI revolution. Journalists may not be so lucky as layoffs were already a trend and may be accelerating.

Mercedes Adds ChatGPT to In-Car MBUX Assistant - Directly Confronts Alexa and SoundHound

Mercedes-Benz announced the availability of OpenAI’s ChatGPT for 900,000 U.S. customers through its MBUX in-car voice assistant solution. The new feature is a beta program that employs Azure OpenAI Service managed by Mercedes. The company announcement stressed data privacy safeguards.

Cohere Lands $270 Million in Funding from Tech Heavyweights - LLM Battles to Intensify

TLDR; Cohere raised $270 million in Series C funding. Earlier rumors suggested the round would assign a $2 billion valuation. The round was led by Inovia Capital, with participation from NVIDIA, Oracle, Salesforce Ventures, Index Ventures, and others.

Big win for Cohere. And a lot on the line to get it right.