What it Takes to Customize Generative AI Models - an Overview with NVIDIA's NeMo

Michael Z. Wang talks about data, models, training, inference, and guardrails

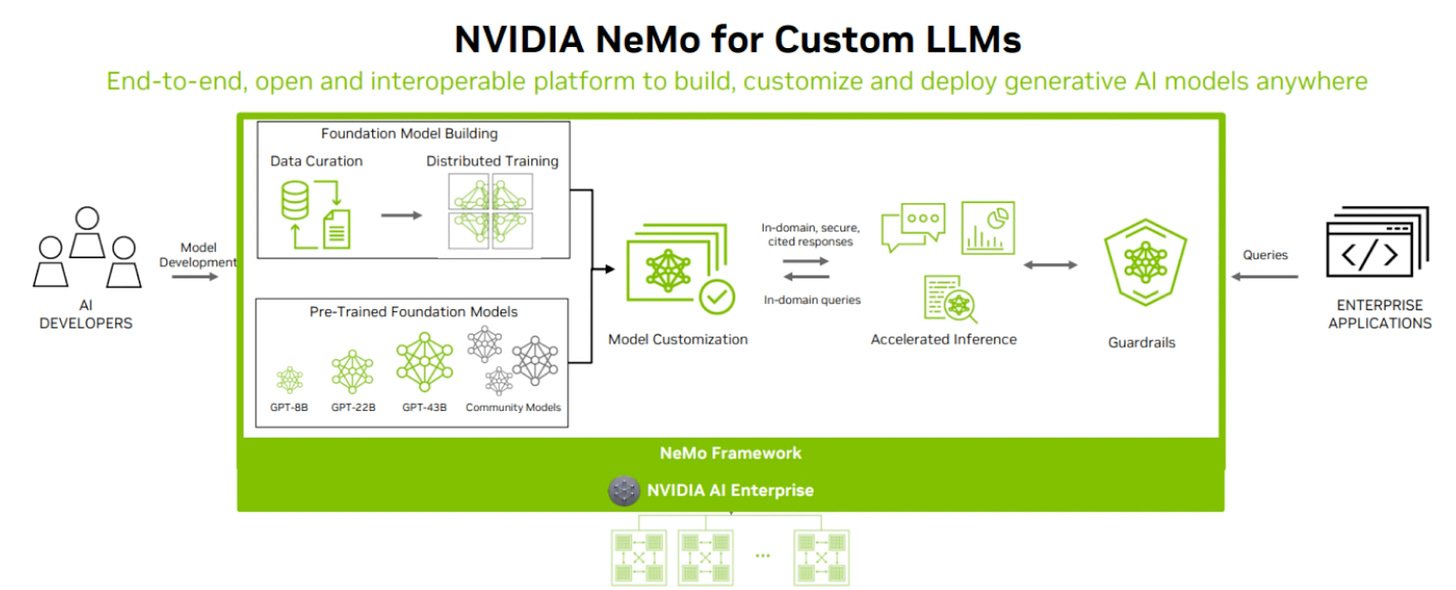

“Enterprises are turning to generative AI to revolutionize the way they innovate optimize operations and build a competitive advantage. To achieve this, they need an AI foundry to build generative AI models. Nvidia’s Nemo framework is an end-to-end, cloud-native framework for curating data, training and customizing foundation models, and running the models at scale, while ensuring appropriate responses with guardrails. The framework also supports multimodality including text-to-text, text-to-image, text-to-3D models, and image-to-image.” Michael Z. Wang, NVIDIA

NVIDIA is well known as a central figure in generative AI because of its GPUs and accelerated computing platforms optimized for foundation model training and inference. The company also provides a number of open-source software tools and frameworks that help companies create their own foundation models or customize and fine-tune existing models.

NeMo is a set of tools and an end-to-end framework to streamline the development of large language model (LLM) enabled applications. It also includes pre-trained AI models available in 14 languages that span ASR, NLP, and TTS technologies. The models are available under the Apache 2.0 open-source license.

NeMo has separate collections for Automatic Speech Recognition (ASR), Natural Language Processing (NLP), and Text-to-Speech (TTS) models. Each collection consists of prebuilt modules that include everything needed to train on your data. Every module can easily be customized, extended, and composed to create new conversational AI model architectures.

You can see NVIDIA’s Michael Z. Wang present an in-depth overview of NeMo in the video above.

Customizing LLMs

While general-purpose LLMs with some prompt engineering or light fine-tuning have helped organizations execute successful proof-of-concept pilots, enterprises face other considerations as they plan migration to production. NVIDIA’s end-to-end LLM customization lifecycle is instructive about what many organizations will need to do when they graduate to customized models.

1. Model Selection or Development

NVIDIA offers several pre-trained models ranging from 8B to 43B parameters and supports using other open-source models of any size. Alternatively, users can build their own model. That process begins with data curation (e.g., selection, labeling) in NVIDIA’s model, but a better term is data engineering. This involves acquiring, cleansing, validating, and integrating data for use in the model training at a minimum. Data engineers will often need to conduct additional analysis, design storage, evaluate model training results, and incorporate reinforcement learning with human feedback (RLHF) into the training cycle.

Building your own foundation model can be costly, complex, and time-consuming. That is why most enterprises will start with a pre-trained foundation model and then focus on customization.

2. Model Customization

Model customization generally involves optimizing model performance with task-specific datasets and adjusting model weights to improve performance. NeMo offers customization recipes, and enterprises can also select models that are already customized to a task and then incorporate proprietary data for fine-tuning.

3. Inference

Inference is the process of running the models based on user queries. There are hardware, architecture, and performance variables that have a significant impact on usability and cost once in production.

4. Guardrails

NVIDIA uses the concept of Guardrails as a broad set of services that intermediate between the models and applications. This can be applied to review incoming prompts to ensure they don’t violate policies, execute arbitration or orchestration steps, and review model responses for policy adherence. Regardless of the terms used, organizations will generally use guardrails and related intermediary functions to ensure topical relevance, accuracy, safety, privacy, and security.

5. Applications

NVIDIA’s diagram lists enterprise applications as if they are LLM-ready, but that is often not the case. Sometimes, existing applications are directly connected to LLMs to enable new features. However, when organizations are building assistants for accessing knowledge or executing tasks, there is often a new application designed specifically for natural language interfaces.

Why Do Companies Want Custom Models?

Most companies are focused today on using proprietary models, and that often makes sense. It helps them deploy solutions faster. They don’t have to worry about building up a team of data scientists who are experts in building and maintaining AI models. It can save on upfront costs. However, companies are increasingly interested in deploying custom generative AI models for many reasons, including:

Data privacy

Data and application security

Performance

Cost

Competitive Advantage

Synthedia forecasts that proprietary models from OpenAI, Anthropic, and other providers will continue to dominate the generative AI application space over the next two years. At the same time, we expect custom AI model adoption to rise quickly. NVIDIA’s NeMo is likely to be one of the solutions that companies adopt as they look to better align generative AI solutions with their objectives and strategy.

ChatGPT Turns 1 - Timeline of a Remarkable First Year and its Impact on Industry and Society

Happy Birthday, ChatGPT! It is hard to overstate how much ChatGPT changed the world’s interest in generative AI. I was using InstructGPT in the OpenAI playground to assist with search in early 2022 and had covered the GPT models as an analyst in 2020. My first article on what we now call generative AI was in 2017. In June 2022, Eric Schwartz and I decid…

Galileo's Hallucination Index and Performance Metrics Aim for Better LLM Evaluation

Galileo, a machine learning tools provider that helps companies evaluate and monitor large language models (LLM), has introduced a new LLM Hallucination Index. The Index evaluated 11 leading LLMs to compare the probably of correctness for three common use cases: