When AI Doesn't Match the Hype - Full Automation But Too Many Errors

Kalendar says it has a 10% error rate. Our experience says it is much higher.

Last week, we highlighted claims that DoNotPay’s Robot Lawyer might have a beating heart underneath the surface. A paralegal and journalist separately testing the solution speculated that the long delays in receiving AI-generated documents suggest that humans are likely reviewing them or they need to be reviewed and edited by humans before sharing with customers. Other scenarios look like “search and replace” features more than AI.

Our example today is about a different problem. If only Kalendar.ai had some human review, easily identified errors could be avoided. We all want ChatGPT without hallucinations and generative images without inappropriate and incoherent elements. However, today it is a matter of limiting the errors as opposed to eliminating them entirely. And an underdeveloped AI model combined with a few unrealistic assumptions can create a bit of a mess.

When AI Goes Wrong

Kalendar.ai says you can “book new revenue on autopilot with AI,” and it is “machine learning-powered audience sourcing.” What does that mean? You input the titles of the type of people you would like to connect with, and it appears that by matching LinkedIn profile data with an email database, Kalendar comes up with a list of target contacts. There may also be a requirement to upload some existing contacts.

The software then creates what it says will be a personalized email from scraped internet data about the contact, a short video slide show, and a contact sign-up form. The GPT-3 powered AI-generated email includes some common boilerplate, while the generative AI parts can include something about the target contact’s work history, their company product or positioning, an invitation to connect, and one other attempt at referencing a personal detail of the recipient. That final element is not depicted in the image from Kalendar’s website below, but there are examples in some unsolicited emails that I have received.

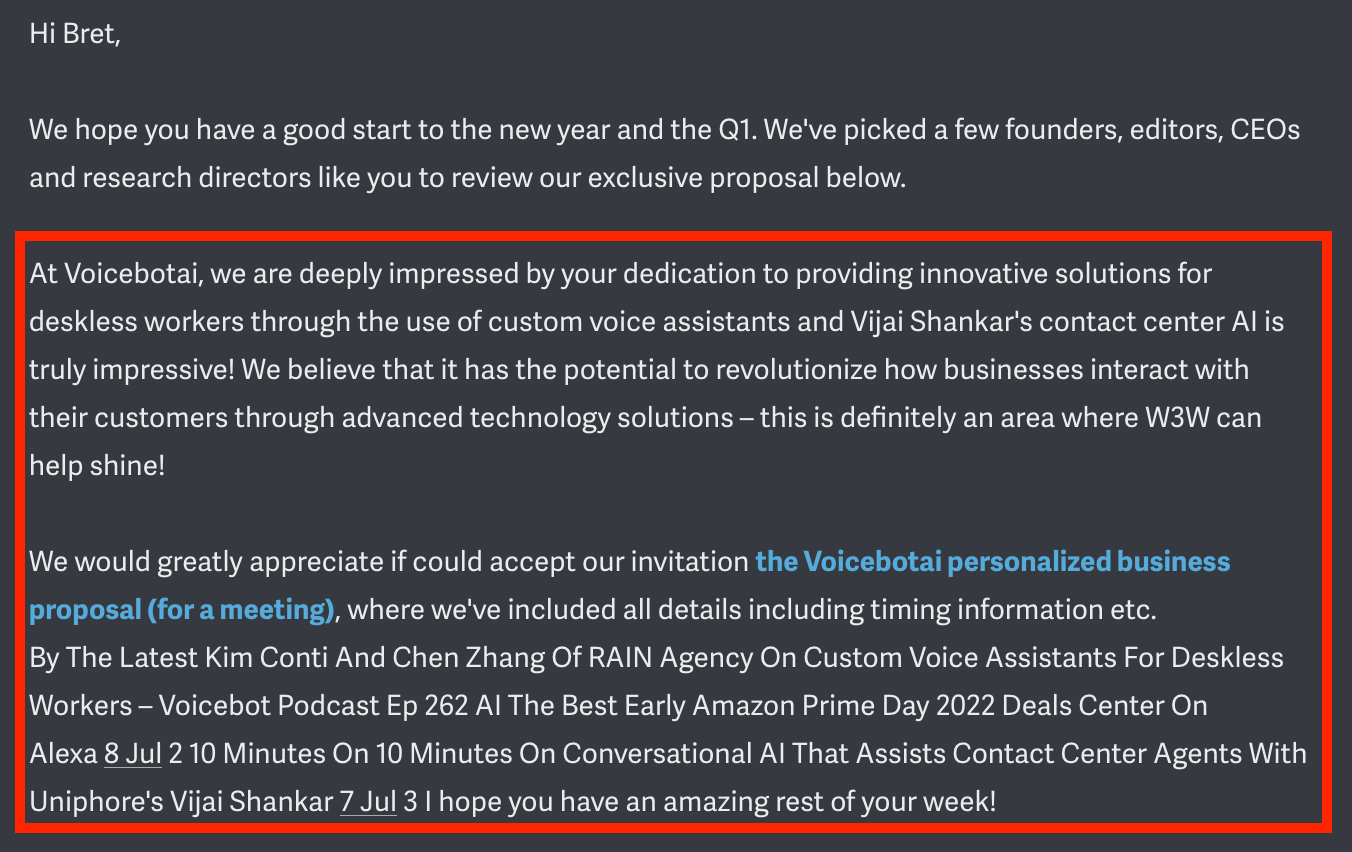

The example above seems pretty straightforward. However, take a look at the first image above at the top of this post. That is the actual email I received from a Kalendar customer using the email marketing service. The first paragraph grabbed information from my LinkedIn profile to make it seem like it was targeting me or people like me. It is fine. Not an amazing introduction, but there is nothing wrong with it.

In the second paragraph, things go off the rails. It begins with the awkward use “At Voicebotai, we…” but the email sender doesn’t speak for Voicebot.ai or me. This should have been the name of the company that was reaching out to me.

That sentence continues with a factual error that would be easily avoided with even a cursory glance at what Voicebot.ai actually does.

“We are deeply impressed by your dedication to providing innovative solutions for deskless workers through the use of custom voice assistants and Vijai Shankar's contact center AI is truly impressive!”

Voicebot does not provide solutions for deskless workers. The AI grabbed that from a headline on Voicebot.ai related to a podcast interview with executives from RAIN that created the solutions. The reference to Vijai Shankar is from a video interview on the Voicebot.ai website.

The final paragraph is just gibberish either scraped from our Voicebot.ai homepage, where it picked up recent posts, or maybe from my LinkedIn post feed.

This is an automated solution, so the idea is “bots only” without human review. Of course, I am not really a good target for the company, so maybe it doesn’t matter that the email was basically gibberish. They didn’t lose a potential customer. Still, I can’t help but wonder how many companies developed a poor opinion of the company using Kalandar and, as a result, will never be a prospect in the future.

Continual Improvement?

Ravi Vadrevu, founder of Kalendar, told me via email, “[It] requires a lot of training on edge cases to make it not speak gibberish… There’s a 10% error rate for the models we use to keep it free for the recipients. By next month, we wouldn’t have them - it’s training as we speak! However, the next 90% love it and connect with our customers genuinely…We are still doing a lot of work to improve it, but we have come a long way!”

I appreciate Ravi answering my questions. And it may be that Kalendar’s generative AI models will improve rapidly with more training. However, this product seems like an early beta or alpha at this point. Let’s put his 10% claim to the test. I have been fortunate enough to receive four emails from Kalendar from two different companies.

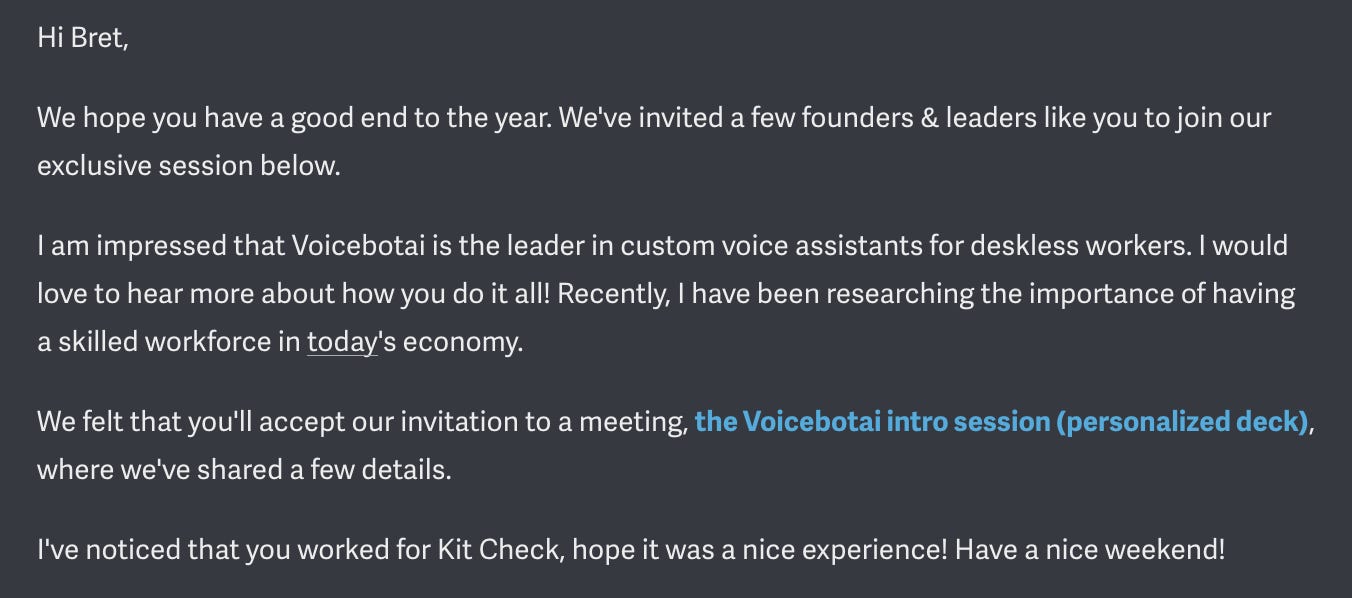

The example immediately above is shorter, and therefore, the risk of generating an error is reduced. However, it still manages to think Voicebot.ai provides conversation AI for contact center agents as opposed to writing about the topic. It also brought back the deskless worker topic. In the final sentence of the second paragraph, the email appears to indicate an intent to share some interesting information with me about Amazon. However, it provides no link.

The third email example repeats the same deskless worker error. The rest of it is not well written, but there are no factual errors or gibberish. Should we consider this a win? I still think we need to call this out as an error.

Finally! The last email was short enough that the risk of errors and gibberish declined precipitously. Is it a good email? It is more generic than the others, and there is no compelling call to action. I suspect this is part of Kalendar’s A/B testing to see what versions perform best. This email might not turn me off to a vendor (i.e., the customer using Kalendar to spam me), but I can’t imagine clicking a link. There is no compelling call to action. You might say there is no call to action at all.

The Results

My assessment is 75% of the emails I have received from Kalendar’s AI-enabled email generator contain factual errors and gibberish that would ensure I would never respond to them. If I took the time to notice the vendor in the signature block, I would surely think less of them. The single email that appears to be error-free was so generic it would be hard to see it converting well.

I am bullish on the power of large language models (LLM) and the new applications they are enabling. However, there will be many bumps on the road to AI nirvana. Today, it is risky for enterprises to use AI-generated text in customer interactions without significant fine-tuning or human review. If you just want broad contact reach and are comfortable with risk, then it may be viable. This doesn’t seem like a long-term strategy.

I’ll keep an eye on Kalendar and see if they do get down to a 10% error rate or better performance. Let me know if you have seen these types of emails or other AI-generated content that are good examples of #AIfails.