5 Key Generative AI Announcements from Google Cloud Next

Is Google Closing the Gap?

Google made several generative AI announcements at its annual Cloud Next conference, which focuses on what you can do with Google Cloud. Here are five that are noteworthy.

1. Agents (or are they copilots)

Okay, Google was not talking about the traditional view of AI agents at Cloud Next when it introduced Vertex AI Agent Builder. These are generative AI applications that Microsoft calls Copilots, and others (AKA OpenAI) might call assistants or chatbots.

A classical definition of AI agents generally suggests some autonomy and “agency” to make decisions when interacting with other variables, such as web services or applications, and not simply extracting information from data sources. Analysis, summarization, and generation don’t fit this classical definition of AI agents. Google is referring to request-response systems that leverage generative AI. Google’s AI Agents are ChatGPT-style solutions grounded in your defined data sources and preferences.

Now that I have Google’s annoying definitional adventures out of the way, this is a useful advance for the Vertex AI stack and is a step toward closing the gap with Microsoft’s Copilot Studio. Vertex AI and Azure AI Studio both offer a set of developer tools to deploy applications that leverage generative AI models. The Vertex AI Agent Builder, like Copilot, is the low-code or no-code environment for building productivity solutions. According to the blog post announcing the solution:

Easily build production-grade AI agents using natural language

The no-code agent building console in Vertex AI Agent Builder enables developers to build and deploy generative AI agents using Google’s latest Gemini models. To start building agents, developers navigate to the Agents section in Vertex AI. Here, they can create new agents in minutes. All they need to do is define the goal they want the agent to achieve, provide step-by-step instructions that the agent should follow to achieve that goal, and share a few conversational examples for the agent to follow.For complex goals, developers can easily stitch together multiple agents, with one agent functioning as the main agent and others as subagents. A subagent can pass information to another subagent or the main agent, allowing for seamless and sophisticated workflows. These agents can easily call functions, connect to enterprise data to improve response factuality, or connect to applications to perform tasks for the user.

Google Cloud’s Ravi Kurian provided a high-level overview of several different types of agents. These included:

Customer Agents for customer self-service

Employee Agents for employee self-service

Creative Agents for creative activities such as image generation and marketing

Data Agents for analyzing data using natural language

Code Agents for writing software code

Security Agents to assist security operations teams in conducting investigations

The solution provides a number of features, including:

RAG - retrieval generative augmentation, a data vectorization and retrieval technique that reduces the incidence of large language model (LLM) hallucinations and grounds responses in specified data.

Search - enables users to access updated information from the web.

Run code - there is a code interpreter that can execute Python code for data analysis, visualization, and math.

Function calling - Gemini models can call functions from a set of APIs that are best suited to fulfill a user request.

Connectors - out-of-the-box integrations for commonly used data sources and applications.

2. Gemini 1.5 Pro

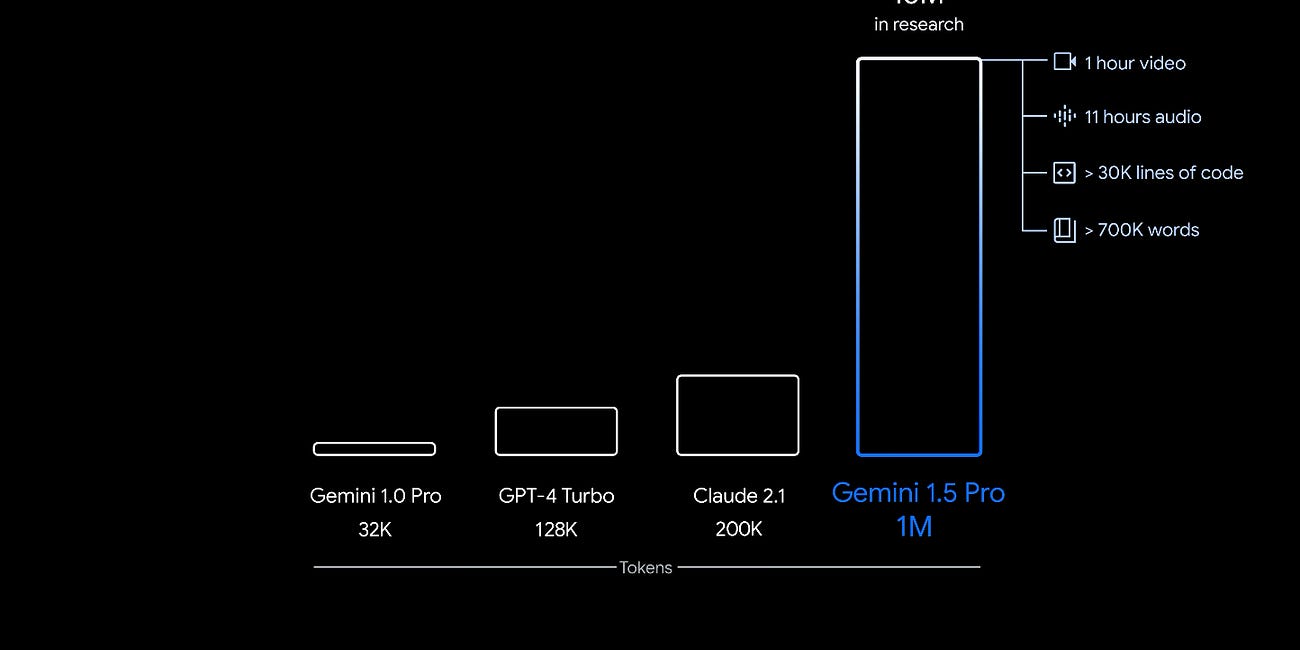

Synthedia already has an extensive write-up on Gemini 1.5 Pro. The biggest news about that model is the 1 million token context window, which is 4-5 times larger than other leading offerings from OpenAI and Anthropic. Cloud Next's news is that now everyone can use it in a public preview through Vertex AI.

3. Gemini Code Assist

Google also announced a coding assistant that specializes in developing APIs that enable your applications to access other applications and endpoints.

Gemini Code Assist simplifies the process of building enterprise grade APIs and integrations using natural language prompts that don't require special expertise.

While off-the-shelf AI assistants can help with building APIs and integrations, the process is still time-intensive because every enterprise is unique, each with their own requirements, schemas, and data sources. Unless the AI assistant understands this context, users still need to manually address these items.

Gemini Code Assist understands enterprise context such as security schemas, API patterns, integrations, etc., and uses it to provide tailored recommendations for your use case.

This isn’t replacing Codey, the GitHub Copilot developer assistant. It is a specialized solution for API development.

4. Imagen 2 with Text-to-Live

Google also announced that Imagen 2 is generally available through Vertex AI for image creation and includes a new feature, text-to-live. This feature goes beyond an image by animating it or creating a short video clip to express a requested concept.

Granted, this is a preview solution, and it must have been added to the event at the last minute. There is a short demonstration video, but the documentation provides very little detail.

5. Google Axion ARM Chip

Google is intensifying its efforts to take on AI workloads that today are largely dominated by NVIDIA. According to the announcement:

Google Axion Processors, our first custom Arm®-based CPUs designed for the data center. Axion delivers industry-leading performance and energy efficiency and will be available to Google Cloud customers later this year.

…

Axion processors combine Google’s silicon expertise with Arm’s highest performing CPU cores to deliver instances with up to 30% better performance than the fastest general-purpose Arm-based instances available in the cloud today, up to 50% better performance and up to 60% better energy-efficiency than comparable current-generation x86-based instances.

This will not be a surprise to many Synthedia readers, as we discussed Google’s interest in directly challenging NVIDIA last week.

Closing the Gap?

Google once again made a number of announcements that all have merit, but they often seem randomly selected. When Microsoft takes the stage at Ignite to promote Azure, it has a fully fleshed-out idea about how all of the components fit together. Google has many of those same components, but because it is largely playing catch-up, the features often come across as a list of piecemeal capabilities.

That said, this week's announcements will help close the gap with Microsoft and Azure, which have a clear lead today in generative AI computing and development solutions. In late 2022, Google suddenly found it was a year behind the OpenAI-Azure offerings. The company has made great strides since then, and its solution set is becoming more fully formed. A key question now is whether its customers will notice.

Google Goes Big on Context with Gemini 1.5 and Dips Into Open-Source with Gemma

Google has been busy well beyond fixing the system prompt issues for Gemini image generation. Gemini 1.5 is not just an upgrade of the Gemini model. It’s an entirely new model architecture. It arrives with a baseline 128k context window. However, Google is stressing that it has an eye-popping one million token context window currently being evaluated by…

4 Shortcomings of Large Language Models - Yan LeCun, Research, and AGI

Large language models (LLM) offer seemingly magical capabilities often mistaken for human-level qualities. However, Yan LeCun, Meta’s top AI scientist and Turing Award winner, recently laid out four reasons why the current crop of LLM architectures is not likely to reach the goal of artificial general intelligence (AGI). During an interview on the Lex F…