Google Goes Big on Context with Gemini 1.5 and Dips Into Open-Source with Gemma

The moves reflect an update in Google's technology and strategy

Google has been busy well beyond fixing the system prompt issues for Gemini image generation. Gemini 1.5 is not just an upgrade of the Gemini model. It’s an entirely new model architecture. It arrives with a baseline 128k context window. However, Google is stressing that it has an eye-popping one million token context window currently being evaluated by early testers. It accepts multimodal inputs. A demonstration video depicts an excellent example of abstract reasoning that matches an image with a specific moment in the transcript of the Apollo moon landing. Impressive stuff. Granted, mileage may vary.

In addition, Gemma arrived this week as Google’s contribution to the small large language model (sLLM or SLM) category with 2 billion and 7 billion parameter foundation models. Google reports that the 7B parameter model materially outpaces Meta’s Llama 2 7B and 13B in a select set of public benchmarks. The rationale for comparing Llama 2 models to the new Gemma models is that they have been released as open models. The license includes usage restrictions, so it does not follow the traditional open-source licensing models, such as Apache or MIT. However, the models can be used for private and commercial use, provided you comply with the requirements.

The introduction of Gemini Pro 1.5 and Gemma was unexpected, particularly so soon after the release of Gemini Pro and Ultra 1.0. This is an indication of the scope of Google’s research activity and reflects a new phase of the generative AI market’s competitive dynamics.

Gemini Pro 1.5

Gemini 1.5’s one million token context window is the feature that immediately stands out if you are simply looking at the model card details. Allen Firstenberg, a Google Developer Expert, was provided early access to the model and uploaded an English translation of Les Miserables to test out the context management. According to Firstenberg, the 655,478-word book represented 873,471 tokens.

However, before he uploaded the document, he added three facts at random locations in the text. He then asked questions about the book, including the three facts. “It found two of the three perfectly,” he said.

It was unable to compare the uploaded file with the original, although it confidently said something that had nothing to do with the facts. This is a perfect example of what you should expect from LLMs. It may be able to accurately identify what is in the context window, but not necessarily provide high fidelity in comparing it to training data. To be fair, in this case, it is unclear whether an English translation of Les Miserables was included in the training data.

Google provided a video screen capture of the analysis of the 330,000 tokens that were associated with a copy of the transcripts of the Apollo lunar mission. It was able to find “three comedic” moments and excerpt them from the transcript while also providing time stamps so they could be compared against the original text.

In addition, the demonstration includes uploading a two-dimensional line drawing of the boot in the style of astronaut gear and entering the prompt, “What moment is this?”

The model correctly identified it as Neil’s first steps on the moon. Notice how we didn’t explain what was happening in the drawing. Simple drawings like this are a good way to test if the model can find something based on just a few abstract details.

The Value of Large Context Windows

It is logical that increasing the context window size has a large impact initially and then exhibits diminishing marginal returns when considered across all potential use cases. Most use cases don’t need giant context windows. However, some require it.

Anthropic was the first leading foundation model supplier to introduce a truly large context window. In May 2023, it rolled out a 100k token context window for the Claude LLM. Shortly after OpenAI announced a 128k token context window in November 2023, Anthropic introduced the Claude 2.1 model that supports 200k.

Some use cases need to access large quantities of data that change infrequently. In those instances, the data can economically be embedded in a vector database and accessed with a modest context window model. Other use cases require access to large quantities of frequently changing or perishable data or need one-time access to it. These are scenarios where accessing the information in memory (i.e., in the context window) may be more efficient. There are not as many of these use cases, but they may be very valuable.

Consider text-based financial services market analysis, patient population health data, assessing daily news, or voluminous legal documentation gathered during pre-trial discovery. The large context window options are designed for research tasks. In these cases, waiting a few minutes or even longer to find just the right information is worth the extra token cost and performance latency.

Mixture of Experts

Google also revealed that Gemini Pro 1.5 is based on a Mixture of Experts (MoE) architecture. This appears to be a change from the Gemini 1.0 series of models. According to Demis Hassabis, CEO of Google DeepMind:

Gemini 1.5 delivers dramatically enhanced performance. It represents a step change in our approach, building upon research and engineering innovations across nearly every part of our foundation model development and infrastructure. This includes making Gemini 1.5 more efficient to train and serve, with a new Mixture-of-Experts (MoE) architecture.

The first Gemini 1.5 model we’re releasing for early testing is Gemini 1.5 Pro. It’s a mid-size multimodal model, optimized for scaling across a wide-range of tasks, and performs at a similar level to 1.0 Ultra, our largest model to date. It also introduces a breakthrough experimental feature in long-context understanding.

…

As we roll out the full 1 million token context window, we’re actively working on optimizations to improve latency, reduce computational requirements and enhance the user experience. We’re excited for people to try this breakthrough capability, and we share more details on future availability below.

GPT-4 is thought to be based on an MoE architecture, and Mistral openly confirms that MoE is the basis for Mixtral 8x7B. MoEs represent a collection of complementary models with various specialties. While Mixtral includes eight expert models, Google and Jagiellonian University researchers suggest in a recent research paper that and MoE could consist of thousands of “sub-networks,” or models.

The benefits of MoE models include both faster training time and higher accuracy. Faster training translates directly into lower costs. This also could be critical for reducing cost and latency during runtime inference that will positively impact generative AI workloads, particularly those involving large context windows.

The Open-Source Foothold

Google says that Gemma is based on the Gemini architecture, though it appears to be on the 1.0 approach, as there is no mention of MoE in the technical report. Gemma 7B outpaced Meta’s Llama 2 7B, and 13B, and Mistral 7B by significant margins in 11 of 19 public benchmarks and tied for the lead with Mistral 7B for one. Mistal 7B showed higher performance on four, and Llama 2 was top-ranked on three.

Groupings of question answering and reasoning benchmarking showed relatively comparable performance for Gemma 7B, Llama 2 7B, Llama 2 13B, and Mistral 7B. Gemma was much stronger for math/science and coding but with scores well below the 50th percentile. Mistral 7B was second. It is worth noting that Google chose not to show the Mixtral 8x7B results, which presumably would have been far superior.

A key contributor to this strong performance was, no doubt, the fact that Gemma models were trained on upwards of 6 trillion tokens. Previous analyses by Sythedia have shown that the scale and quality of training tokens are the key differentiators beyond parameter count. This is the likely explanation for why some models with fewer parameters but more training data outperform their larger peers.

It is even more significant that Google decided to release the Gemma models as open (though not quite open-source) for anyone to use for private or commercial purposes. The models are also small enough to download onto many developer laptops. There is a partnership with Hugging Face for model access, or users can go with the Google Cloud option. Google surely hopes to drive more interest in its broader model family by providing a free, open model option. This is a smart move, given how far behind it is today from a market share perspective.

If you do choose to download the models, you might want to start with the Google Cloud option. Google is offering $300 in computing credits for first-time users and up to $500,000 for startups.

Gemma is built for the open community of developers and researchers powering AI innovation. You can start working with Gemma today using free access in Kaggle, a free tier for Colab notebooks, and $300 in credits for first-time Google Cloud users. Researchers can also apply for Google Cloud credits of up to $500,000 to accelerate their projects.

A Straddle Strategy

These are significant product introductions for Google. The Gemini Pro 1.5 release reflects the first set of generative AI models from Google that offer advanced features not found in other leading models. It also carves out a feature category where Google can legitimately claim a lead.

Google was aware that the PaLM LLM, launched publicly shortly after OpenAI’s GPT-4 debut, was well behind the leading models on a number of dimensions. As a result, it provided access for developers to experiment and work on domain-specific variants such as Med-PaLM but didn’t market it heavily to developers.

In fact, at the time PaLM was officially launched for preview at Google I/O 2023, Sundar Pichai mentioned that Gemini was coming and would be superior. It was almost as if Google wanted to say, “PaLM is here, but don’t bother. Something better is coming soon.”

Gemini Pro 1.0 may not be quite as strong as Google represents it, given the results of the Carnegie Mellon evaluation. However, it appears to be comparable to GPT-3.5, while Gemini Ultra 1.0 is likely approaching GPT-4 performance on public benchmarks and is likely stronger in multimodal domains. Google says Gemini 1.5 Pro is a smaller model than the 1.0 Ultra version but shows comparable performance. If true, this is a significant development and suggests Google is now positioned to compete head-to-head in the frontier proprietary model segment.

The Gemma models represent a differentiated go-to-market approach from Google’s peers. Other leading foundation model providers are largely focused on developing proprietary models or open-source models. Google is continuing to compete with OpenAI in the proprietary frontier model LLM segment and taking on Meta in the open-source arena of small-to-medium-sized models.

Google may be hedging its bets. Android’s open-source strategy helped the company become the dominant player in mobile operating systems. That approach helped drive the use of Google’s paid products and, in some cases, diverted users to the core search franchise. Google may not want to cede that market segment for generative AI without a fight to Meta, Mistral, and the other open-source competitors. It also has a lot of advantages to bring to leverage, including a very large developer community.

The Google engineer who penned the memo that suggested, “Google has no moat,” because of rising open-source model capabilities may have had an impact. At the very least, the Gemma models provide Google with optionality to compete in the open-source segment. That is an area where OpenAI, Anthropic, and Amazon are unlikely to compete, nor has Microsoft expressed interest in this segment to date. It is not easy to fight a two-front war, but Google has the resources and ambition to target total market victory.

I have been skeptical that Google will successfully execute in the proprietary segment because the company has become bureaucratic and suffered from a diminished culture of innovation. In addition, it has continually lost top AI talent to startups and other technology players. Despite those considerations, it appears Gemini Pro 1.5 and Gemini 1.0 Ultra are competitive with the leading frontier LLMs today.

Adopting a two-pronged strategy will make Google’s path to success harder. However, if it is successful, the win will be bigger. The approach also offers Google a chance to concentrate its resources in one of the segments if progress is not materializing in the other.

Synthedia’s forecast is that Google will continue to invest heavily in the frontier model segment and likely neglect the open-source segment as it will be viewed as less valuable and a diversion of resources. What do you think?

NVIDIA is Officially the Giant of Generative AI

Eleven months ago, Synthedia ran the article headline: NVIDIA is Becoming the Giant of Generative AI. This week, it announced $61 billion dollars in revenue for fiscal year 2024. That is more than double 2023, which was also a record year but ended with a quarter where data center revenue was down slightly from earlier quarters. This was before the Chat…

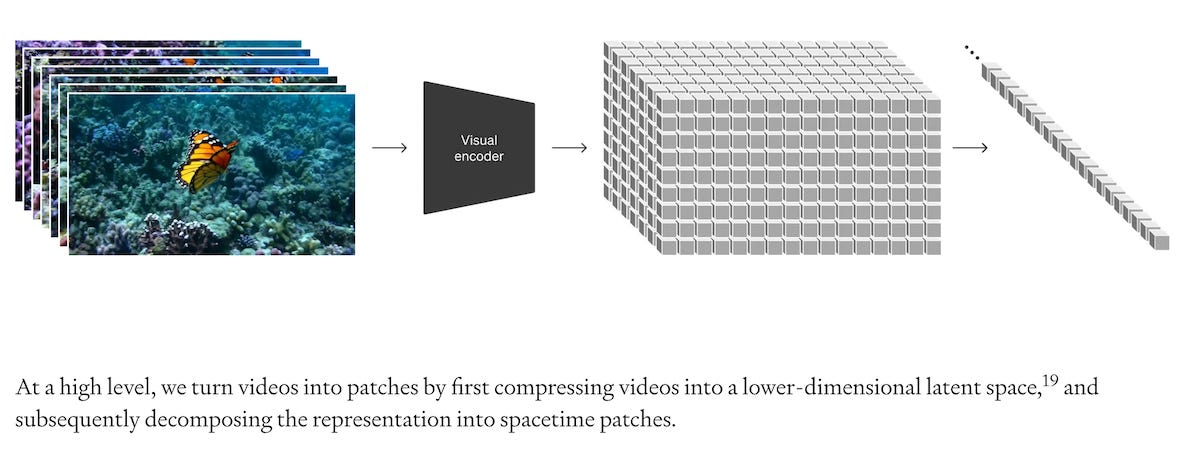

OpenAI's Sora Text-to-Video Demonstrations are Insanely Good

Earlier today, OpenAI debuted Sora, the company’s first text-to-video AI model. It is not yet available to the general public, but the announcement said it is going through testing. While the model supports a variety of styles, it clearly excels in photo-realism, as seen in the video embedded above.