NVIDIA is Officially the Giant of Generative AI

While AI model developers and cloud hyperscalers compete for customers, NVIDIA is just cashing checks

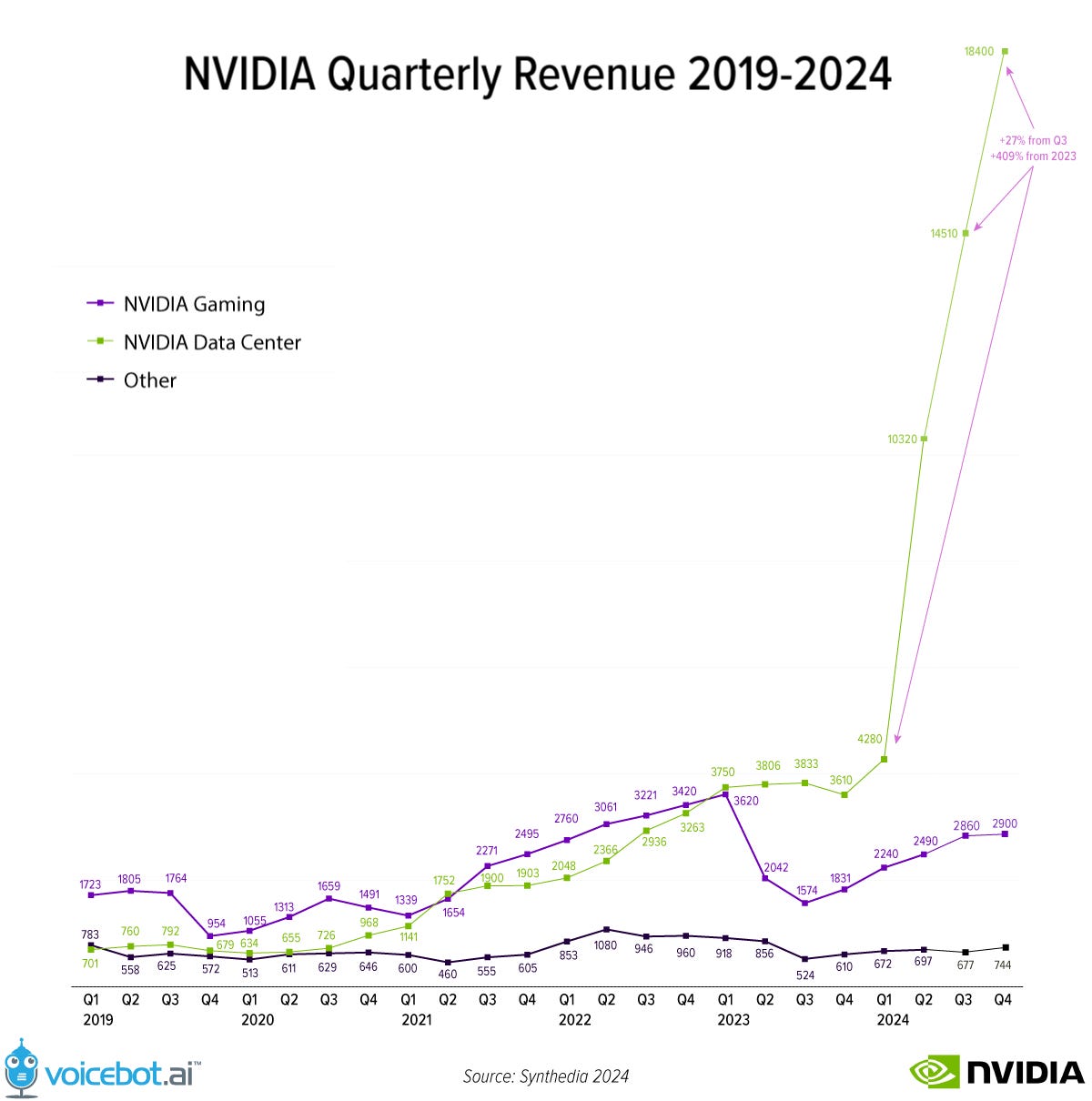

Eleven months ago, Synthedia ran the article headline: NVIDIA is Becoming the Giant of Generative AI. This week, it announced $61 billion dollars in revenue for fiscal year 2024. That is more than double 2023, which was also a record year but ended with a quarter where data center revenue was down slightly from earlier quarters. This was before the ChatGPT wave accelerated everything associated with generative AI.

NVIDIA’s fiscal year ends in January. That means the FY2023 revenue was the 12 months leading to January 31, 2023. The $27 billion in revenue was basically flat from 2022. This fiscal Q1 2024 was up significantly from the previous quarter. However, that is where everything changed.

Data center revenue is largely driven by NVIDIA GPU sales for AI foundation model training and inference. In Q2 2024, that revenue climbed to $10.3 billion, followed by $14.5 billion in Q3. It rose again in Q4 to $18.4 billion. In the final two quarters of FY2024, NVIDIA generated more revenue from data center sales alone than its entire FY2023 revenue. The earnings release quoted NVIDIA CEO Jensen Huang as saying:

“Our Data Center platform is powered by increasingly diverse drivers — demand for data processing, training and inference from large cloud-service providers and GPU-specialized ones, as well as from enterprise software and consumer internet companies. Vertical industries — led by auto, financial services and healthcare — are now at a multibillion-dollar level.”

Inference Rising

NVIDIA GPUs were primarily used to train large generative AI foundation models in the first half of last year. Technology companies were investing upwards of $100 million with cloud providers to train a single large language model (LLM). This reflected a “build it, and they will come” approach. It appears the users of these models have arrived.

Colette Kress, NVIDIA’s executive vice president and chief financial officer, told analysts during the annual earnings call that the company estimates 40% of data center workloads employing NVIDIA GPUs were consumed by inference. That is a dramatic shift from early 2023, when most GPU capacity was dedicated to model training. Aside from NVIDIA’s record quarter, this data point also suggests that user applications will soon consume most of the GPU workloads.

Investment in training foundation models reflects generative AI optimism by suppliers. Inference equals generative AI adoption. And it looks like more optimism and adoption are coming. Huang told analysts, “Fundamentally, the conditions are excellent for continued growth.”

The Giant of Generative AI

OpenAI still has a lead in foundation model technology and market share. Based on the strength of its exclusive OpenAI arrangement, Azure maintains a lead in generative AI cloud computing. However, both of these companies face fierce competition every day. While AI model developers and cloud hyperscalers compete for customers, NVIDIA is just cashing checks.

The reasons are twofold: scalability and economics. NVIDIA’s H100 chips can run the largest generative AI training and inference workloads faster than competitors and do it at a lower cost. There are some promising chip alternatives in development, but there is nothing comparable to NVIDIA GPUs today for the largest generative AI workloads.

Mark Zuckerburg announced Meta would purchase 350,000 NVIDIA GPUs in 2024 to test its models and run its own generative AI inference for Facebook, Instagram, WhatsApp, and the company’s other internet properties. That is a $10 billion purchase at current H100 prices. The cost could be higher if the company switches the order to the forthcoming GH100s. Amazon, Microsoft, and Google will likely spend even more in 2024 to keep up with demand.

In addition, there is a new class of customers for sovereign AI. This refers to governments setting up their own data centers with NVIDIA GPUs so they can ensure their country has access to generative AI computing resources.

This dominance is also starting to spill over into NVIDIA’s software products. The professional visualization software business, which includes Omniverse, reported $463 million in revenue. NVIDIA also has its own foundation models and supports open-source model development, testing, and tuning through the NeMo framework.

The ChatGPT moment that created an industry shockwave at the end of 2023 and spilled into 2024 ensured that OpenAI would become the key catalyst for generative AI adoption. It also was the biggest gift anyone could have offered to NVIDIA investors. The stock climbed five times over the past year, and the company is approaching a $2 trillion valuation, making it the third most valuable company in the world. That is a giant by any definition.

Microsoft Introduces a New Red Teaming Toolkit for Generative AI Security and Safety Testing

Microsoft announced a new automation framework for adversarial testing of generative AI systems. PyRIT (Python Risk Identification Toolkit) is designed to help security professionals and machine learning engineers identify risks in generative AI systems. Many people are familiar with guardrails used to monitor AI system output for alignment with safety …

Meta to Buy 350k NVIDIA GPUs to Train AI Models Like Llama 3 for AGI

Mark Zuckerberg took to Instagram today to reveal Meta’s plans to accelerate the development of (artificial) general intelligence (AGI). While he used the term “general intelligence” and not artificial general intelligence, he seems focused on what would traditionally be called AGI.