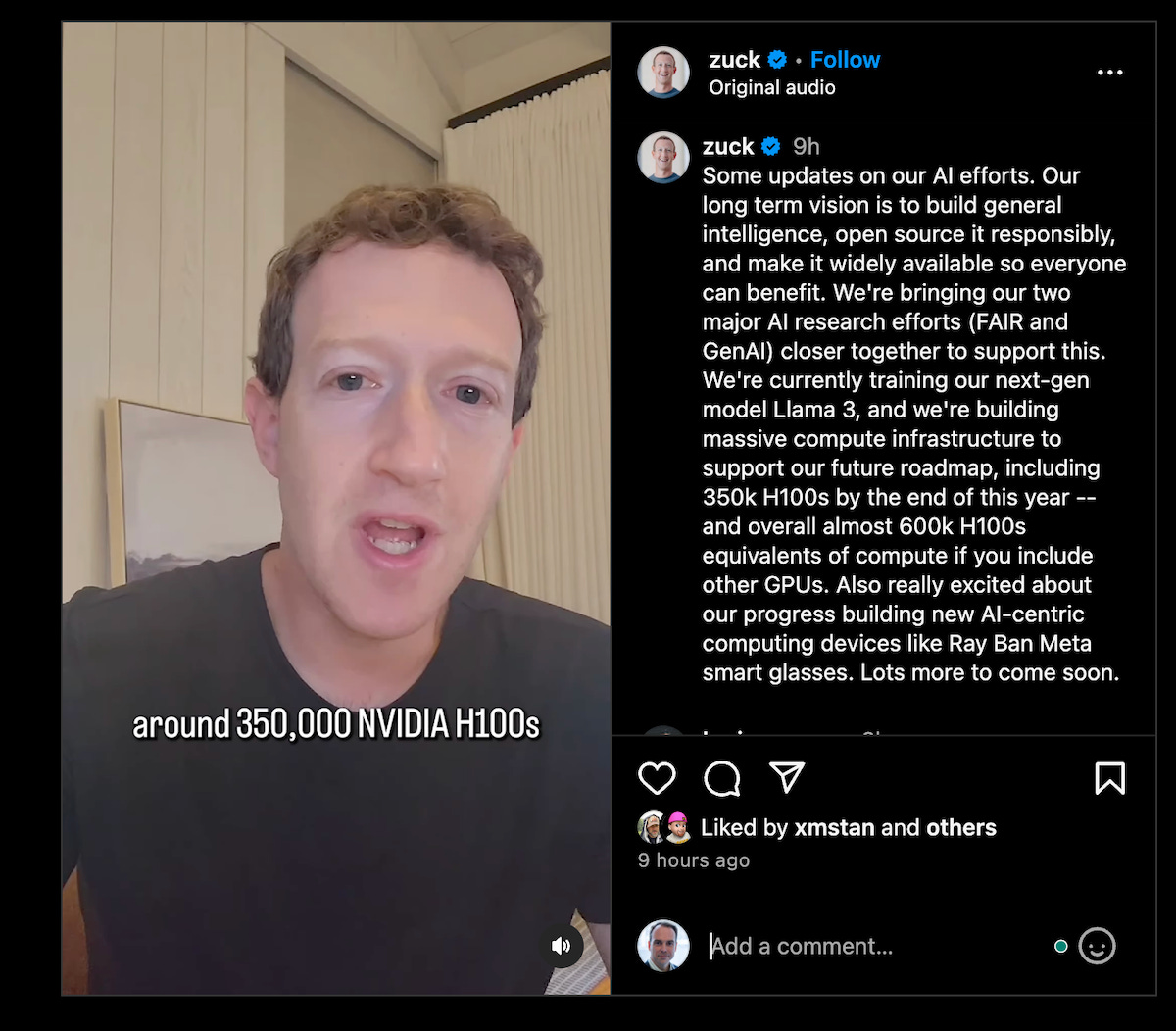

Meta to Buy 350k NVIDIA GPUs to Train AI Models Like Llama 3 for AGI

More models are coming and they will be integrated with smart glasses

Mark Zuckerberg took to Instagram today to reveal Meta’s plans to accelerate the development of (artificial) general intelligence (AGI). While he used the term “general intelligence” and not artificial general intelligence, he seems focused on what would traditionally be called AGI.

He also indicated that Meta’s next-generation large language model (LLM) Llama 3 is in training, and the company intends to release more models. That may indicate Meta plans to expand its LLM portfolio, but most likely refers to multimodal image and video models as well as speech recognition and translation models. All of these exist today but are not available at all or are only available for non-commercial use.

The biggest near-term news is that Meta will acquire 350,000 NVIDIA H100 GPUs and combine that with what Zuckerberg says will be a capacity of about 600,000 NVIDIA H100 GPU equivalents.

We are building an absolutely massive amount of infrastructure to support this. By the end of this year, we are going to have around 350,000 NVIDIA H100s or around 600,000 H100 equivalents of compute if you include other GPUs. We are currently training Llama 3 and we’ve got an exciting roadmap of future models.

$10B in NVIDIA GPUs

The NVIDIA H100 GPUs are currently selling for $30,000 - $35,000. The 350,000 figure represents a $10 billion commitment at full price and that is a partial investment by the company considering the broader 600k of H100 computing equivalent capacity.

Don’t assume Meta received a big discount for its bulk order. H100 GPUs have been on backorder since its launch, and NVIDIA has a lot of economic leverage since it cannot fulfill all of its orders.

Also, for context, Thurrott reported in November that Azure had acquired about 150,000 NVIDIA H100 chips in 2023, which was more than the number received by AWS and Google Cloud. It may be that AWS, Google Cloud, and Azure all have greater capacity than Meta at the end of 2024. However, the capacity that Zuckerberg is referencing is in the same league as a cloud hyperscaler, and it is presumably only for use by Meta researchers and the company’s products.

AGI is the Goal—Assistants Everywhere

The purpose of this massive computing capacity is related to the AGI goal. General intelligence, super intelligence, or AGI are not well defined terms, but it is safe to say the ideas either refer to humanlike intelligence or superhuman intelligence in most cases. Zuckerberg commented:

It’s become clearer that the next generative of services requires building full general inetlligence. Building the best AI assistants, AIs for creators, AIs for businesses, and more. That needs advances in every area of AI—from reasoning to planning to coding to memory and other cognitive abilities.

It isn’t all about assistants, but it may be largely about assistants. Whether you call them copilots or assistants or generative AI-enabled features, everyone is focused on the smart assistant that helps users complete tasks in the same way that an expert human might provide a service. The excitement is related to the significant capabilities of ChatGPT but with higher accuracy, fewer hallucinations, and more capabilities.

Smart Glasses and the Future

Zuckerberg also tied this all together with Meta’s corporate positioning by relating the investment in generative AI with the metaverse. Whether this is a meaningful point or not may depend on your definition of the term metaverse. Still, he brings this smart glasses idea back to assistants.

People are also going to need new devices for AI and this brings together AI and metaverse. Because over time, I think a lot of us are going to talk to AIs frequently throughout the day. And I think a lot of us are going to do this using glasses because glasses are the ideal form factor for letting an AI see what you see and hear what you hear, so it’s always available to help out.

This is a practical viewpoint. If smart glasses can simply observe your surroundings and, with the help of vision and sound recognition, provide proactive or context-based assistance, that will increase the benefits to users. In fact, it was a central selling point of Google Glass way back in 2013.

It is also true that this could be a high-value set of features. The track record to date of would-be smart glasses products has been generally bad to mediocre, with Snap Spectacles being an exception, albeit with a very limited feature set. Maybe Meta will be the first company to crack the code of functionality, price point, and social acceptability. It surely has promise, and Meta could reap significant rewards if it became even more integrated into users’ daily lives.

The Race for AGI or Just Required Capacity?

An obvious question is what all of that GPU capacity is for. Is this Meta’s estimation of what it will take to deliver AGI? The company’s chief AI scientist, Yann LeCun, has been circumspect on the thesis of AGI through brute force computing.

Alternatively, we could simply view this as Meta being one of the largest consumers of computing capacity globally simply to run Facebook, Instagram, WhatsApp, and its other properties. Generative AI will put a new strain on that system because of Meta’s massive scale, so it just needs more computing capacity than everyone else.

Regardless, the buying spree is a bold move by Meta and suggests 2024 will be another big year for NVIDIA and maybe generative AI more broadly…but definitely for NVIDIA. 😀

Deceptive LLMs, Model Poisoning, and Other Little Known Generative AI Security Risks

Many people think prompt injections represent the primary threat to generative AI foundation model security. Well-crafted prompts can indeed be used for inappropriate data exfiltration and other unwanted model behavior. However, there are more pernicious threats that few people have considered.