Generative AI Video Competition Heats Up --ByteDance + TikTok Will Be a Key Player

Will text-to-video be the story of 2024? Could ByteDance?

TikTok parent ByteDance published a new research paper and GitHub post regarding its latest text-to-video generative AI model. MagicVideo-V2 compares favorably to leading offerings from Runway, Pika, and Stable Video Diffusion from Stability AI. You can see example output from MagicVideo-V2 in the video capture along with comparisons to other leading text-to-video solutions.

The examples suggest ByteDance’s MagicVideo-V2 is competitive with the leading text-to-video solutions and may have a lead in some styles. This is not particularly surprising given the company is investing heavily in generative AI research, is focused on the video market, and has access to a lot of video training data.

However, that is only part of the story. ByteDance is laying the foundation to become a global leader in generative AI foundation models. TikTok is only one avenue of distribution for the company to pursue.

Generative Video AND Images

According to the research paper, the technology goes well beyond text-to-video. It provides end-to-end video generation with text-to-image as a component solution. That suggests TikTok’s text-to-image features are also likely to be upgraded soon.

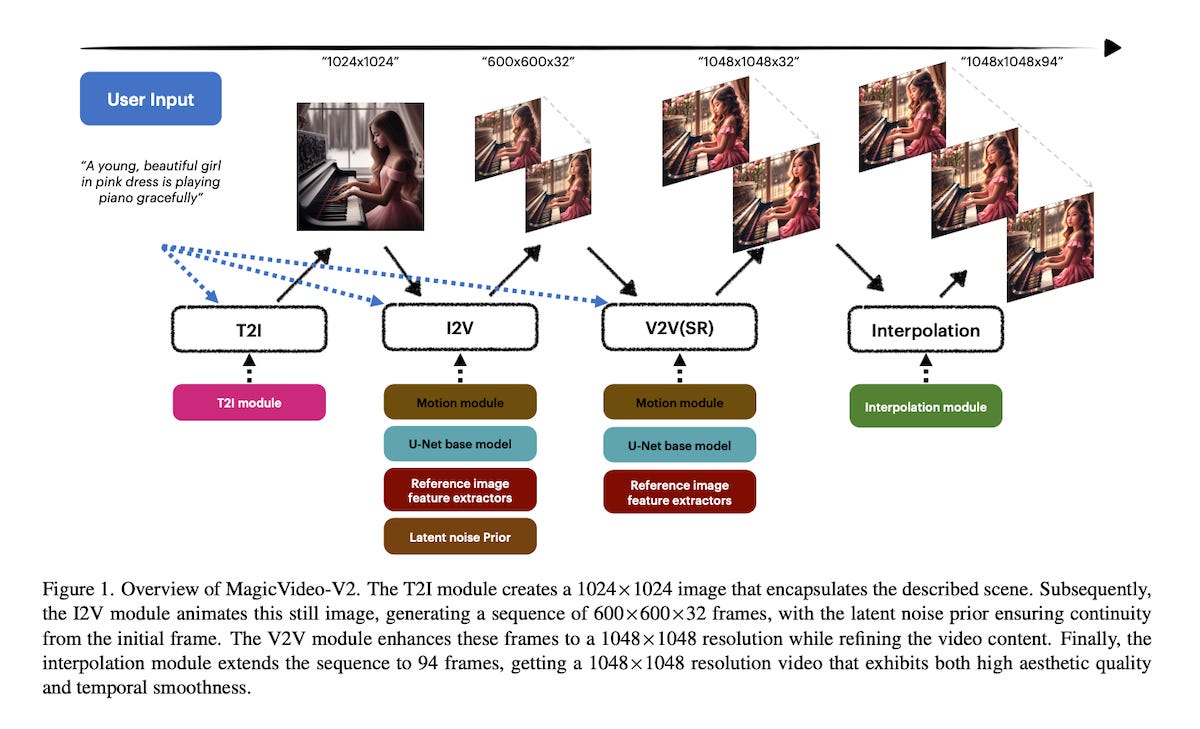

The proposed MagicVideo-V2 is a multi-stage end-to-end video generation pipeline capable of generating high-aesthetic videos from textual description. It consists of the following key modules:

Text-to-Image model that generates an aesthetic image with high fidelity from the given text prompt.

Image-to-Video model that uses the text prompt and generated image as conditions to generate keyframes.

Video to video model that refines and performs superresolution on the keyframes to yield a high-resolution video.

Video Frame Interpolation model that interpolates frames between keyframes to smoothen the video motion and finally generates a high resolution, smooth, highly aesthetic video.

The T2I (text-to-image) module takes a text prompt from users as input and generates a 1024 × 1024 image as the reference image for video generation. The reference image helps describe the video contents and the aesthetic style. The proposed MagicVideo-V2 is compatible with different T2I models. Specifically, we use a internally developed diffusion-based T2I model in MagicVideo-V2 that could output high aesthetic images

The Quality Verdict

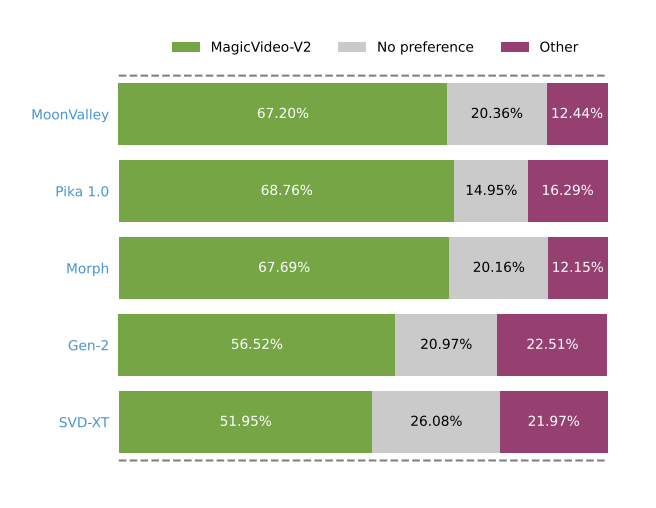

ByteDance researchers enlisted a group of human evaluators to rate MagicVideo-V2 compared to other leading text-to-video solutions.

A panel of 61 evaluators rated 500 side-by-side comparisons between MagicVideo-V2 and an alternative T2V method. Each voter is presented with a random pair of videos, including one of ours vs one of the competitors, based on the same text prompt, for each round of comparison. They were presented with three assessment options-Good, Same, or Bad-indicating a preference for MagicVideo-V2, no preference, or a preference for the competing T2V method, respectively. The voters are requested to cast their vote based on their overall preference on three criteria:

1) which video has higher frame quality and overall visual appealing.

2) which video is more temporal consistent, with better motion range and motion validility.

3) which video has fewer structure errors, or bad cases.

The results demonstrate a clear preference for MagicVideo-V2, evidencing its superior performance from the standpoint of human visual perception.

There is no information about the background of the human raters, whether the results are comprehensive, or if the styles and outputs were cherry-picked to create comparisons of “known good” videos. However, the data and the shared videos suggest a high-performance model that compares favorably with leading alternatives and may even demonstrate a technology lead.

If the output quality is comparable to or better than other leading solutions, this will offer ByteDance several options. It could:

Develop the technology solely for use within the TikTok app, using it as a differentiator.

Offer an API to third-party applications and compete directly with Stability AI and Runway (and likely entrants OpenAI, Google, and Meta).

Create a competing video/image development application to go up against Pika and Runway. And it could take on Midjourney, which is rumored to be working on a text-to-video solution to complement its industry-leading text-to-image service.

TikTok’s Generative AI Play

TikTok parent ByteDance does not want to depend on generative AI foundation models created by other companies or direct competitors. In addition to Pika, Runway, and Stability AI, text-to-video innovators include Google, Meta, NVIDIA, and OpenAI. Of these seven companies, only one is not a U.S.-based business.

Given the likelihood of U.S. restrictions on providing technology to TikTok and the potential for similar actions out of the UK, it would be foolish of the company to think it can rely on the current crop of foundation model players with a text-to-video solution.

In addition, ByteDance is large enough to fund its own generative AI research. That offers it the option to create novel innovations that TikTok competitors don’t have access to, as well as save on costs that would go to foundation model partners and open up new generative AI foundation model business lines.

Some recent generative AI activity shows ByteDance’s commitment to the technology is broad-based. TikTok announced Creative Assistant in September 2023 as a copilot for creators.

TikTok Creative Assistant is designed to spark creativity and be a launchpad for curiosity. It works alongside you, integrated in TikTok Creative Center, the platform where TikTok creative ideas are born.

If you're new to TikTok, Creative Assistant can guide you through TikTok creative best practices and advise you on how to get started.

If you're conducting research on the creative landscape on TikTok, Creative Assistant can showcase and analyse inspirational and top ads based on data sources on Creative Center.

When you're facing writer's block, Creative Assistant can brainstorm TikTok ideas with you, write and help refine your TikTok scripts.

In December 2023, The South China Morning Post reported that ByteDance “is working on an open platform that will allow users to create their own chatbots.” A ByteDance spokesperson confirmed with FastCompany that the information came from an internal document related to the company’s business objectives. According to FastCompany:

Broadly speaking, the platform seems to compete with the ChatGPT tools that OpenAI revealed in November…ByteDance has also kept busy, launching its own rival chatbot, Doubao, this summer. The Morning Post said it learned ByteDance is also at work on an alternative to popular AI image generators like Midjourney, Stable Diffusion, and DALL-E. Having an image generator built natively into an app like TikTok, which has more than 3.5 billion downloads, would certainly give critics a brand-new reason for pause since it could equip an even larger pool of bad actors with a convenient way to flood the platform with deepfakes.

What it Means

It is unclear how ByteDance will use its newly developed generative AI foundation models. However, the latest research suggests ByteDance may become an important competitor in the generative AI market.

TikTok is a formidable distribution network, and simply having generative AI capabilities for the popular social media app justifies the investment. However, Synthedia expects ByteDance to take the capabilities directly to market and become the leading Chinese company serving non-Chinese businesses with generative AI services.

Rabbit Launches R1 Device for GenAI-Enabled Experiences and Adds $10M in New Funding

At CES today, Rabbit launched its first product based on the concept of a large action model (LAM). A LAM is a large language model (LLM) optimized to take action on behalf of users by controlling various software solutions. Jesse Lyu, Rabbit’s founder and CEO, said in the company’s launch video: