Bard Becomes Gemini, Ultra is Here, and Other Ways Google is Mirroring OpenAI's Strategy

Google is now ready to compete with OpenAI & Microsoft. Can it generate interest?

Gemini Ultra, Google’s answer to GPT-4, has arrived. OpenAI quickly integrated GPT-4 into ChatGPT after its launch, and Microsoft followed suit with Bing Chat and Microsoft Copilot for the 365 productivity suite (now both branded as Copilot). Following a similar path, Google also announced that Gemini Ultra will be integrated into its ChatGPT alternative Bard and replace the name of the version for Google Workspace, previously called Duet AI.

The quality of the Gemini Pro and Ultra models appear to be strong enough that Google is finally ready to take on ChatGPT and its many progenitors. Up until this point, Google seemed only to want to speak about Bard with the media and Duet AI with developers. The solutions became available broadly in the second half of 2023 but received no significant promotion. While ChatGPT didn’t see much promotion, it had the benefit of being a viral hit. Microsoft, by contrast, was happy to talk about Bing Chat and Copilot. So, the newly renamed Gemini chat assistant could become ChatGPT’s biggest competitor.

However, the bigger significance of this week’s announcement may be the claims made about the Gemini Ultra model performance. AI foundation model developers have strived for a year to match GPT-3.5 and none have effectively matched GPT-4. While GPT-5 may be coming soon, parity or superiority to GPT-4 is a notable accomplishment. Since GPT-3.5 and GPT-4 power so many genertive AI applications, having a true alternative with comparable or better performance could materially change the market.

Gemini Ultra Performance

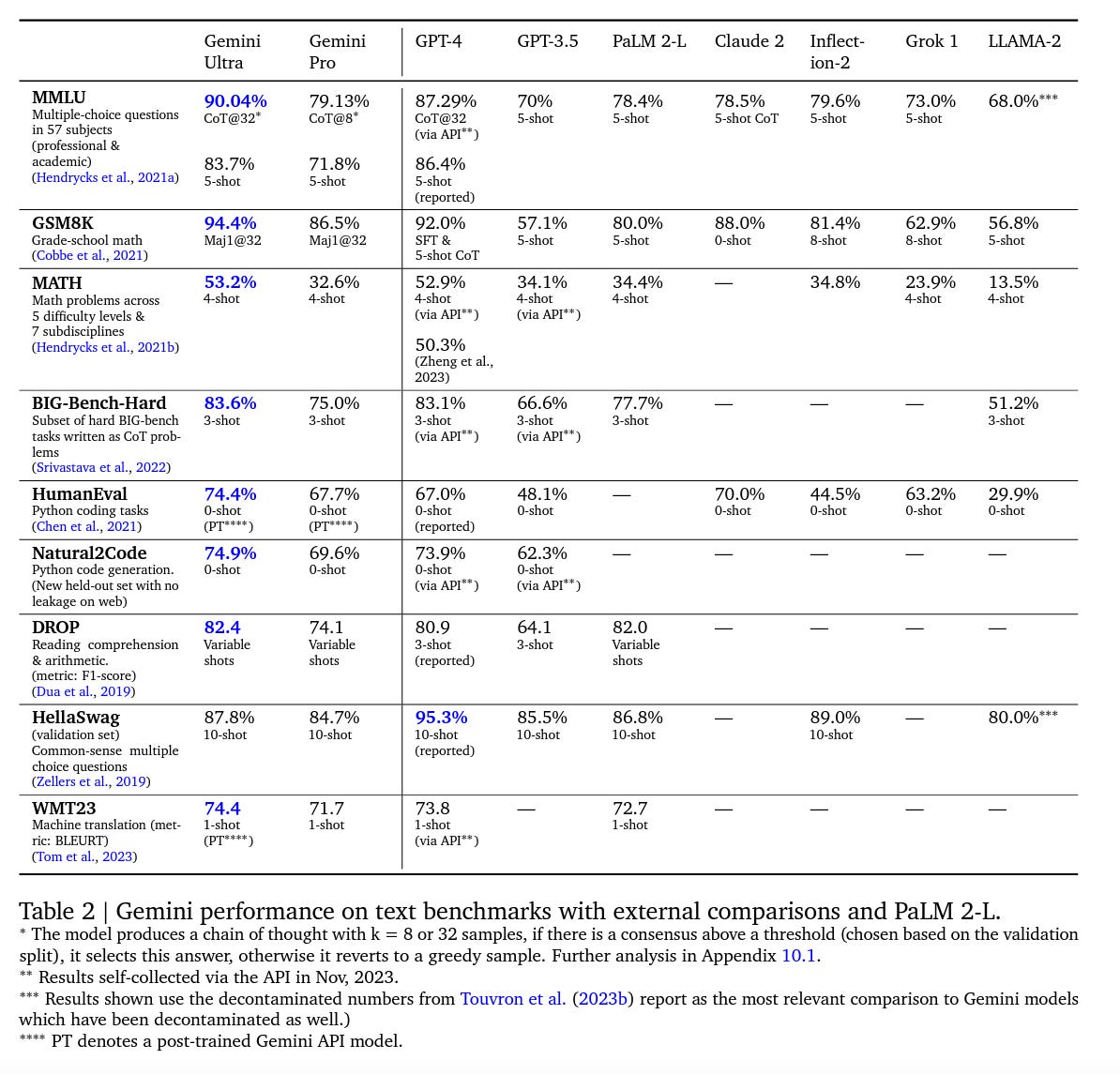

Similar to the research paper related to the Gemini Pro model released in December, Google makes some bold claims about performance comparisons with other available foundation models. Granted, some of those claims were called into question a week later by researchers from Carnegie Mellon University (CMU). Still, it is important to look closely at what the foundation model developers say about their products and the benchmark results they publish. Where the paper compares to “the best model” or “state of the art,” the implied comparison is OpenAI’s GPT-4 model. According to the paper related to Gemini Ultra and the updated Bard:

Our most capable model, Gemini Ultra, achieves new state-of-the-art results in 30 of 32 benchmarks were porton, including 10 of 12 popular text and reasoning benchmarks, 9 of 9 image understanding benchmarks, 6 of 6 video understanding benchmarks, and 5 of 5 speech recognition and speech translation benchmarks. Gemini Ultra is the first model to achieve human-expert performance on MMLU (Hendrycks et al., 2021a)—a prominent benchmark testing knowledge and reasoning via a suite of exams—with a score above 90%. Beyond text, Gemini Ultra makes notable advances on challenging multimodal reasoning tasks. For example, on the recent MMMU benchmark (Yue et al., 2023), that comprises questions about images on multi-discipline tasks requiring college-level subject knowledge and deliberate reasoning, Gemini Ultra achieves a new state-of-the-art score of 62.4%, outperforming the previous best model by more than 5 percentage points. It provides a uniform performance lift for video question answering and audio understanding benchmarks.

Google shows Gemini Ultra outperforming GPT-4 in eight of ten “text benchmarks,” and Gemini Pro besting GPT-3.5 in six of eight. Ultra also surpasses public benchmark data in eight of eight image understanding benchmarks. This is all consistent with the paper released in December 2023.

The URL for the Google Gemini research paper published in December 2023 was updated with a revised research paper for February 2024. I have the original copy of the December release and can confirm that changes were made to the prose in the paper, but not to the originally reported data in the data tables. There is also a new section 6 in the 2024 version of the paper the focuses on Post-training Models approach.

This new section includes a discussion on tools use by models using Gemini extensions. The use of tools significantly increased the the Pro model’s reasoning scores for GSM8K and MATH benchmarks, and factuality and knowledge retrieval scores for the NQ and Realtime QA benchmarks. According to Google, models with access to “tools” perform better than those without 78% of the time.

The data continues to show Google’s Gemini Ultra and Pro models are very competitive with or superior to OpenAI’s GPT-4 and GPT-3.5 models, respectively. However, CMU researchers set out to replicate the paper’s benchmarks and compared GPT-3.5 with Gemini Pro as it was the only model available in December. Those researcher found that Gemini Pro showed inferior results to GPT-3.5 in all 12 benchmarks it evaluated.

These benchmarks spanned text generation and analysis, coding, translation and agents. The Gemini Pro results were materially lower than GPT-3.5, which suggests we should wait for an external validation on Gemini Ultra now that it is available for use.

Gemini Advanced

Along with the new model arrived a new Bard that employs the Gemini Ultra model. Now branded Gemini Advanced, the new solution will attempt to compete directly with ChatGPT’s Plus edition that leverages GPT-4. It will carry the same ChatGPT Plus cost of $20 per month, after two free months.

This new product will also offer access to Gemini in Google Docs and Gmail as well at some future point. This makes it a cross between ChatGPT Plus and Microsoft’s Copilot for 365. According to the announcement:

Gemini Advanced is far more capable at highly complex tasks like coding, logical reasoning, following nuanced instructions and collaborating on creative projects. Gemini Advanced not only allows you to have longer, more detailed conversations; it also better understands the context from your previous prompts.

…

This first version of Gemini Advanced reflects our current advances in AI reasoning and will continue to improve. As we add new and exclusive features, Gemini Advanced users will have access to expanded multimodal capabilities, more interactive coding features, deeper data analysis capabilities and more. Gemini Advanced is available today in more than 150 countries and territories in English, and we'll expand it to more languages over time.

Gemini Advanced is available as part of our brand new Google One AI Premium Plan for $19.99/month, starting with a two-month trial at no cost. This plan gives you the best of Google AI and our latest advancements, along with all the benefits of the existing Google One Premium plan, such as 2TB of storage. In addition, AI Premium subscribers will soon be able to use Gemini in Gmail, Docs, Slides, Sheets and more (formerly known as Duet AI).

The service is also coming to smartphones as a standalone app, through Google Assistant, and embedded in the iOS Google app. In other words, Gemini is becoming the replacement for both Bard and Google Assistant as Synthedia predicted in 2023 😎. Of course, we also suggested in December that Bard be renamed to Gemini in an effort to assistant Google’s brand deparment. But I digress. The announcement added:

With Gemini on your phone, you can type, talk or add an image for all kinds of help while you’re on the go: You can take a picture of your flat tire and ask for instructions, generate a custom image for your dinner party invitation or ask for help writing a difficult text message. It’s an important first step in building a true AI assistant — one that is conversational, multimodal and helpful.

On Android, Gemini is a new kind of assistant that uses generative AI to collaborate with you and help you get things done.

If you download the Gemini app or opt in through Google Assistant, you'll be able to access it from the app or anywhere else you normally activate Google Assistant — hitting the power button or corner swiping on select phones, or saying “Hey Google.” This will enable a new overlay experience that offers easy access to Gemini as well as contextual help right on your screen — so you can, for instance, generate a caption for a picture you've just taken or ask questions about an article you're reading. Many Google Assistant voice features will be available through the Gemini app — including setting timers, making calls and controlling your smart home devices — and we’re working to support more in the future.

Google is slowly moving toward a one assistant offering. Bard was a test bed for refining the generative AI capabilities for “knowing” and “pseudo-reasoning.” Combining that with the “doing” capabilities of Google Assistant is a solid go-to-market approach, especially when you consider that OpenAI is still struggling with adding “doing” capabilities to ChatGPT.

The key questions now will be:

Whether the Gemini assitant apps have substantially improved performance and factuality over Bard to begin building cognitive trust with users.

Whether Google leverages its biggest asset by integrating Gemini assistant apps into daily search that improves the quality on what was rolled out with Search Generative Experience (SGE).

Whether users will switch over from ChatGPT or new users decide Google’s option represents a good time to try out products in the generative AI assistant segment.

Whether users will pay $20 monthly to access Google’s most advanced models.

Whether Google will have a Super Bowl ad promoting Gemini!

Gemini Ultra Leadership

A notable element of Google’s Gemini paper released this past week with the introduction of Ultra, is that the paper’s header lists Google Deepmind. The contributors and acknowledgements summarizes the contributions as coming from Gemini Team and Google. There are 35 leads listed for Gemini and 46 for the Bard team. That is followed by a lists of over 1,200 “core contributors” and “contributors.” After that the top leadership is acknowledged.

The Gemini program leads are listed as Demis Hassabis, a co-founder of Deepmind, and Koray Kavukcuoglu, VP of research at Deepmind. The head of “Gemini Text” is Yonghui Wu, also of Deepmind. There is very strong AI engineering talent across Google. However, it is interesting how many Deepmind engineers were pushed into the forefront for Google’s AI “Manhattan Project” when they were barely involved a year ago. The paper’s acknowledgments reaffirms that Google followed through on its strategy to meld the Google Deepmind, Brain, and AI teams in an effort to catch up. It also will likely gain some market credibility from the higher profile of the Deepmind team.

Google has spent 2023 catching up with OpenAI. It is likely that job is still incomplete. However, the narrowing of the performance gap, was a necessary step in narrowing of the user adoption gap. Google didn’t promote the PaLM model or Bard very much. The level of promtion put behind its Gemini Apps and Models will be a key indicator of the level of optimism behind Google’s flagship generative AI products. Then the market will decide.

CMU Study Shows Gemini Pro Trails GPT-3.5 and GPT-4 in Performance Benchmarks

A new paper published by AI researchers at Carnegie Mellone University (CMU) shows that Google’s Gemini large language model (LLM) benchmark performance is outpaced by GPT-3.5 and GPT-4. Several of the findings run counter to data presented by Google at the time of Gemini’s public launch. The study’s authors report: