Breaking Down Deepfake Detection, Fraud, and Consumer Sentiment

Example videos and audio, market data, and how the technology works

“When you're dealing with a real-time bank transaction, it has to be instantaneous…I can go into detail of what I mean by spatial and temporal artifacts. Those are the things that allow us to detect that this is a deep fake as opposed to a real human…

“So, every single second, you can actually check 8,000 times to see if the vocal tract configuration is what a human would produce. And so if you have 3 seconds, you have 24,000 samples. If you have five seconds, you have 40,000 samples. And that's on a low-fidelity channel. In the channel that we're speaking of, usually, the frequency is about 16,000…

“That also helps with lip sync because the lip sync has to be right every single second, and every single second is the lip moving in 8,000 micro lip-synchs? You can only see a few things, but the algorithms get to see all of those things and be able to determine if this is a machine or is this human.” Vijay Balasubramaniyan, CEO of Pindrop

Deepfakes and voice clones are becoming more common. The technology has improved, and the barriers to access have fallen. Many AI software providers now offer no-code tools that are free or relatively inexpensive, so everyday consumers have access to these technologies. How to employ these solutions is up to the user. Faceswap, Deep Fake Arts, Wave2lip, and Deepswap were not initially intended for nefarious use, but criminals often use a combination of legitimate and dark web software to achieve their aims.

So, we have deepfakes for good and for bad everywhere. Vijay Balasubramaniyan, co-founder and CEO of Pindrop, joined me to talk about how criminals use the technology, what enterprises are doing to proactively detect its inappropriate use, and some recent data about consumer experience with and sentiment about voice clones and deepfakes.

How Deepfakes and Voice Clones Are Detected

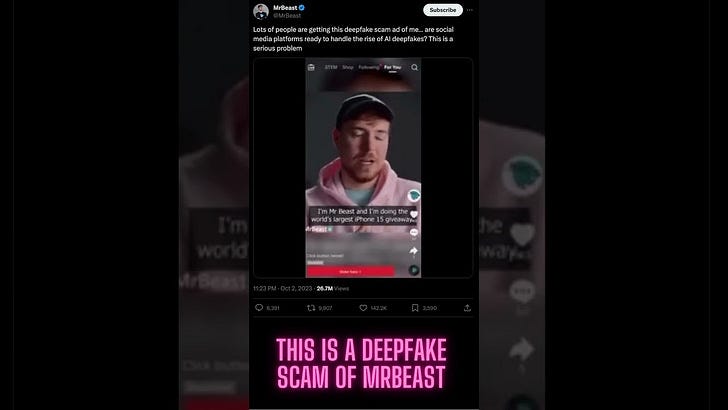

You have probably read some articles about the risk deepfakes pose or seen examples of fraudulent versions of actor Tom Hanks pitching a dental plan and YouTube creator MrBeast seemingly offering free iPhones on YouTube. Vijay offers a rare insight into how this technology is used for fraud and what techniques differentiate the real from the fake.

One of the more interesting stories is that of Giraffe Man. It illustrates how vocal markers can be used to evaluate the physical properties of voices. According to Balasubramaniyan:

Every conference room in Pindrop is named after a fraudster. So we have Chicken Man and Pepe but one of those conference rooms is Giraffe Man because when we analyzed this person's audio we quickly realized that the only person who could have produced this piece of audio is someone with a seven-foot long neck.

The idea of liveness detection has two elements. First is the analysis of whether a human could reasonably have made the vocal sounds. Giraffe Man’s biomarker characteristics were inconsistent with the natural length of human vocal cords. Second, there is the infinite variability of human speech where someone may say the same words many times using the same voice, but the delivery will never be quite the same. Machines are likely to replicate certain aspects of speech in a single conversation, giving away its synthetic origin.

Beyond this, Balasubrimaniyan also discusses over 200 voice biomarkers and 1,300 other signals that can be used to rate the likelihood that any given call is legitimate or fraudulent. Every technology is employed for both positive and negative ends. Generative AI is no exception. Unsurprisingly, what AI creates it can also detect.

Deepfake Protection Trends

There are now tools to help protect against fraudulent use of deepfakes and voice clones. Consumers are concerned about it. In several recent conversations with executives at large companies, I have heard interest in the topic and relatively high levels of concern. However, there is little indication of action.

I suspect this will become a significant trend in 2024 and 2025 as deepfake detection becomes a recognized standard of care every company is expected to implement. A top priority will be voice verification. Is it a human voice, and is it the right person? It is important to protect consumers from harm and companies from the cost of fraud and the impact of reputational damage. This will lead to an increase in the use of voice authentication technology.

Visual verification will not impact as many organizations. The use cases for misuse are less common. However, this will be primary for social media and the news media. Many media companies will not act until they experience the inevitable mistake of publishing deepfake-enabled misinformation for a story that is too high profile to escape public notice. They will then implement deepfake detection technology as part of a PR crisis communications plan and hope to reduce the risk of future incidents.

You can learn more in Synthedia’s 79-page report on consumer sentiment regarding deepfakes and voice clones by downloading it for free here.

MrBeast and Tom Hanks Followers the Latest Victims of Deepfake Scams

Top YouTube creator MrBeast (aka Jimmy Donaldson) has called out social media giants for not actively policing their platforms for scams that employ deepfake technology. The most recent issue arose when an ad on TikTok appeared to show MrBeast offering to give away new iPhones for just $2. The ad transcript says: