ChatGPT 4o is the Voice Assistant We Always Wanted, with Two Exceptions

Is ChatGPT about to hit escape velocity?

OpenAI announced ChatGPT 4o as the latest update to the company’s popular generative AI-enabled assistant. It is an extraordinary update to the chat solution that shocked users with its unmatched erudition in November 2022 and led to unprecedented adoption of AI technology. The 4o (i.e., “four-oh”) update is sure to shock people once again.

It is a real-time voice assistant with barge-in (interruption), reasoning capabilities, and extremely high-quality and expressive synthetic speech. It also has vision capabilities that enable the assistant to view the world through a smartphone camera and allow the user to ask questions about what is in their physical environment.

2023 may have been mostly about the model wars. OpenAI’s ChatGPT upgrade may kick off a new round of assistant wars among generative AI technology providers. Then again, it may just turn out to be an amazing demo that doesn’t entice users beyond the novelty of the initial trial. This is likely to be a true test of whether consumers really want an intelligence assistant.

If consumers do want an intelligent assistant, ChatGPT-4o appears to have nearly every quality they have always wanted. There are some meaningful shortcomings, but overall, uses are likely to be astounded at what the new ChatGPT version can do.

New Capabilities For Everyone

The new capabilities were displayed through several livestream demonstrations and pre-recorded examples. Some of the use cases demonstrated:

Personalization through memory

Real-time language translation

Listening to and participating in a team meeting and summarizing it at the end

Explaining how to solve a linear equation

Viewing a Pythagorean math problem with visuals and helping a student learn and ask questions in real-time

Read a chart and tell you about it

Create a chart from data in a CSV file

The ability to carry on a conversation with a customer support representative

Web browsing

Identify the calories in a food item from a photo

Identifying the emotional state of the user

Several high-quality voices that are expressive

The ability to change the talking speed of a response

A desktop app for Mac users is also supposed to be released today, but it has not shown up yet in the App Store. Notably, rumors are circulating that ChatGPT functionality is expected to be added to the iPhone later this year to enhance Siri.

I recommend you check out several of the use case video recordings here. The examples involving voice and vision are definitely worth a view. Two of the three most impressive video demonstrations are included below. The third is called “Customer Service Proof of Concept” and can be found on the overview page in the video carousel.

The same page also includes several examples of capabilities. The rotating gif of the OpenAI model is an output from a set of ChatGPT 4o prompts. The robot writer’s block and the font creation are also impressive feats for AI models that struggled with these tasks up through this past weekend.

What’s Missing

The ChatGPT 4o upgrade appears to be very impressive. However, it has two shortcomings today. The first is the ability to execute tasks in other applications. Plugins were supposed to fulfill this need until OpenAI realized the approach was not working and deprecated the feature. Siri, Google Assistant, and Alexa are “doing” assistants. They don’t know much, but they can control many applications. ChatGPT knows a lot and will soon learn a lot more, but it doesn’t execute tasks outside of its “knowing” focus.

The other issue is that ChatGPT 4o, as demonstrated, was way too verbose. The voice assistant providers faced this same challenge many years ago and began pairing away at verbosity. Amazon and Google even introduced different versions of a brevity preference. ChatGPT’s new barge-in feature is essential because the assistant likes to ramble on. That makes for an interesting demo but definitely slows things down if your primary objective is to employ a productivity assistant.

Brevity should be easy to teach the assistant through memory and explicit instructions. It will be interesting to see if the model will enable a user to set different verbosity levels based on interaction mode or use case. If you are chatting with an assistant, longer responses are not a particularly big problem and often provide a benefit because we can read faster than a synthetic voice can speak. However, when using voice, brevity becomes essential for productivity use cases but might not be ideal for immersive entertainment or companionship.

Integration with applications will be a harder problem but not insurmountable. Key challenges with application integration are that not every application has APIs, many that have APIs enable only limited functionality, and business agreements are often required before integration. ChatGPT has enough users and is likely to have many more following the 4o release, so many applications will be incentivized to support their users through integration.

Escape Velocity?

An obvious question to ask is why the ChatGPT 4o update offers such a step-up for free users and only a higher use limit for paying subscribers. That limit is probably the answer. OpenAI is hoping to position ChatGPT as the everything assistant that users adopt now and can’t think of living without going forward.

The memory feature combined with consumer habit formation could establish an enduring advantage for whatever generative AI assistant gains users first. ChatGPT has an early advantage simply because so many people have tried it. However, there are many other options that are comparable to or better than the free tier, which uses the competent but uninspiring GPT-3.5 Turbo model. Consumers have a choice. Thus, the strategy: capture users now. The best way to do drive user adoption is to provide an overwhelmingly more advanced assistant than competitors.

Loyal users are likely to employ ChatGPT frequently. The higher the usage, the more reliant they become, and the more likely they are to upgrade to paid to avoid usage limits. This is a good strategy. The company is betting that the efficacy of its product will induce people to want to pay for it so they can use it more.

The desktop application is likely to accelerate this loyalty formation and conversion to a paid subscription. While some of the ChatGPT 4o demos were for consumer use cases, many were work-oriented. Work use cases often have a high volume of concentrated use of generative AI assistants. That will make it harder for work users to stay on the free plan. At some point, it will be more convenient to pay for it.

ChatGPT seemed to stall out in terms of user adoption. Hundreds of millions of users tried ChatGPT in the first half of 2023, which converted to 100 million weekly active users as of November. Mira Murati, OpenAI’s chief technology officer, said during the announcement live stream that more than 100 million use ChatGPT. There was no modifier such as “paid users” or “weekly active users.”

There was a sharp increase in paid users after the launch of GPTs, which were restricted to subscribers. Murati commented that users had created more than one million GPTs and that the team had been working on bringing advanced capabilities to free users, such as vision, document uploads, and image generation. “With the efficiencies of 4o, we can bring these tools to everyone. So, starting today, you can use GPTs in the GPT store...Now our builders have a much bigger audience.”

New features matter, but OpenAI also needs to think about the frequency of use. If your assistant turns on music, places phone calls, and sets alarms, you are employing it several times per day. You may not need to summarize a large document or get help writing an email daily. However, you can change use frequency by adding more supported use cases and more personalization. Broader multimodal use cases, upgraded vision capabilities, and customization enabled by memory features may transform ChatGPT from an occasional app to a multiple-times-per-day app.

The more certain way to drive higher use frequency will be to add “doing” capabilities, but that will take some time. For now, ChatGPT is looking to become the dominant “knowing assistant” before Gemini can get established or Alexa and Siri can become smarter.

Competitive Impact

It will be interesting to see what Google announces at its I/O developer conference this week. There will likely be some similar features for Gemini Advanced users based on the Gemini Ultra multimodal model. However, there is little chance that Google planned to offer all of the advanced features to free users. It may be rethinking that strategy right now.

Also, Microsoft may decide to follow OpenAI’s lead and bring the GPT-4o model to Copilot during its annual BUILD conference for developers next week. Google is facing the type of competitors that it has often represented in the past to its rivals.

Another direct impact of the GPT-4o model will be a more powerful base-level model to support any organization’s copilot or assistant needs. GPT-4o clearly looks like the new standard among “good enough” models that balance price, performance, and latency. And that “good enough” rating looks like it may be applied to the leading frontier model, that is until GPT-5 arrives.

Beyond head-to-head competition, there will be an important indirect impact. The quality of ChatGPT’s voice user interface and features will raise consumer expectations around general-purpose generative AI assistants and those offered by companies as customer interfaces. Once consumers are aware of what the state-of-the-art is like, they will be more attuned to the shortcomings of assistants built on lesser technology. Since ChatGPT will offer 4o features to all users, consumers are sure to establish new expectations over time.

What’s Next

Mira Murati closed the livestream announcement by commenting, “This feels so magical, and that’s wonderful. But we also want to remove some of the mysticism from the technology and bring it to you so you can try it for yourself. Today has been very much focused on the free users and the new modalities and new products. But we also care a lot about the next frontier. So, soon we will be updating you on our progress towards the next big thing. “

What is the next big thing? It is likely to be GPT-5. However, with Sora and ChatGPT 4o debuting unexpectedly, OpenAI may have more surprises in store for users before the next model family release. With that said, the updates associated with the GPT-4o model are impressive enough that you can expect the advances offered by GPT-5 are likely to shock users and developers all over again.

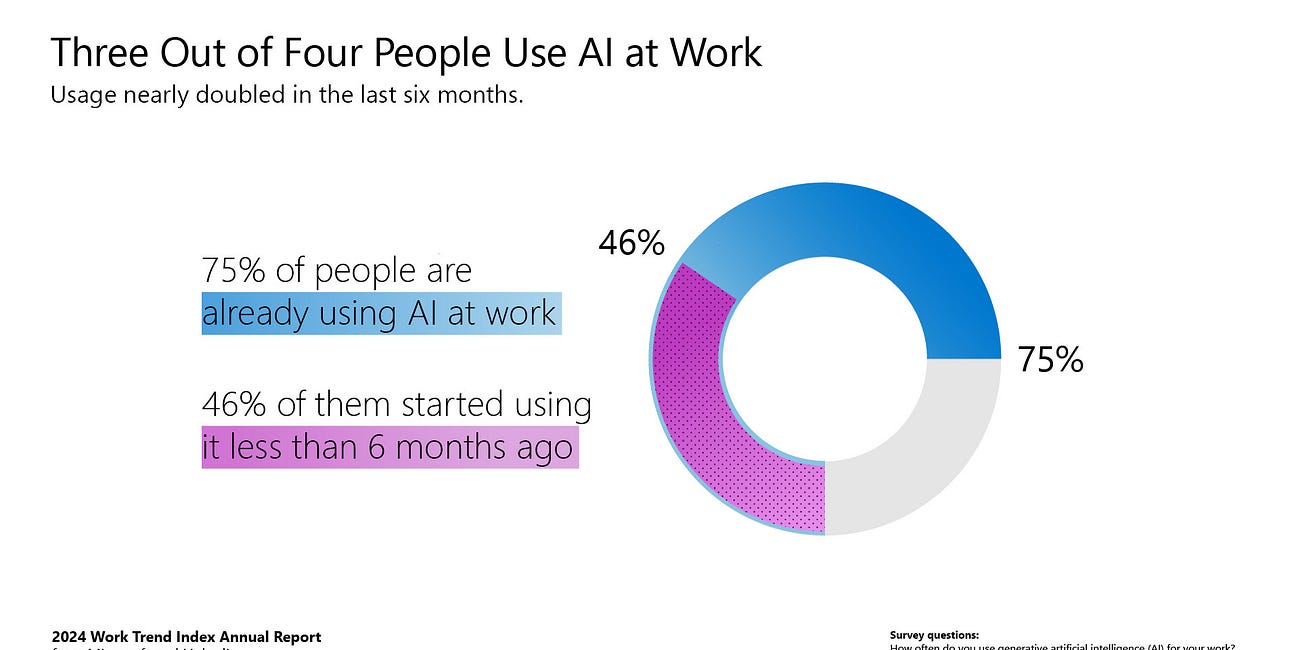

Employees Are Bringing Their Own AI to Work Regardless of Company Support

Microsoft’s 2024 Work Trend Index Report surveyed 31,000 people across 31 countries and uncovered new information related to AI adoption by business users. A key finding was that 75% of knowledge workers worldwide are already using generative AI. While some executives lament the slow uptake by workers of internally supplied generative AI solutions, they…

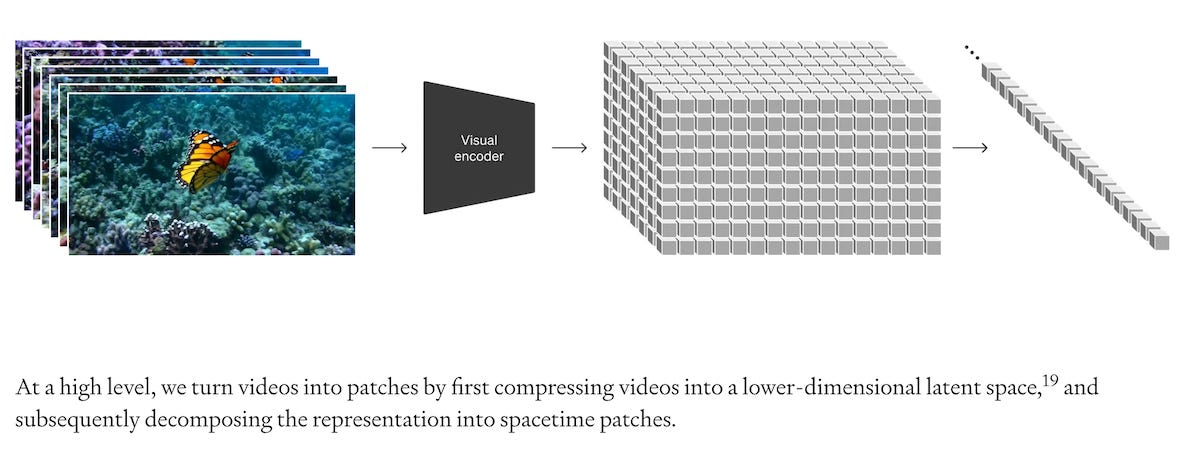

OpenAI's Sora Text-to-Video Demonstrations are Insanely Good

Earlier today, OpenAI debuted Sora, the company’s first text-to-video AI model. It is not yet available to the general public, but the announcement said it is going through testing. While the model supports a variety of styles, it clearly excels in photo-realism, as seen in the video embedded above.

Great coverage, Bret. I’m a little bit suprised by how suprised people are with the voice interface. Pi, the assistant of Inflection, has had this level of fluency, natural voice and inflection, and quick response times for many months, but I gues it is significantly less well-known as OpenAI’s ChatGPT.