Galileo's Hallucination Index and Performance Metrics Aim for Better LLM Evaluation

OpenAI, unsurprisingly, leads the performance benchmark.

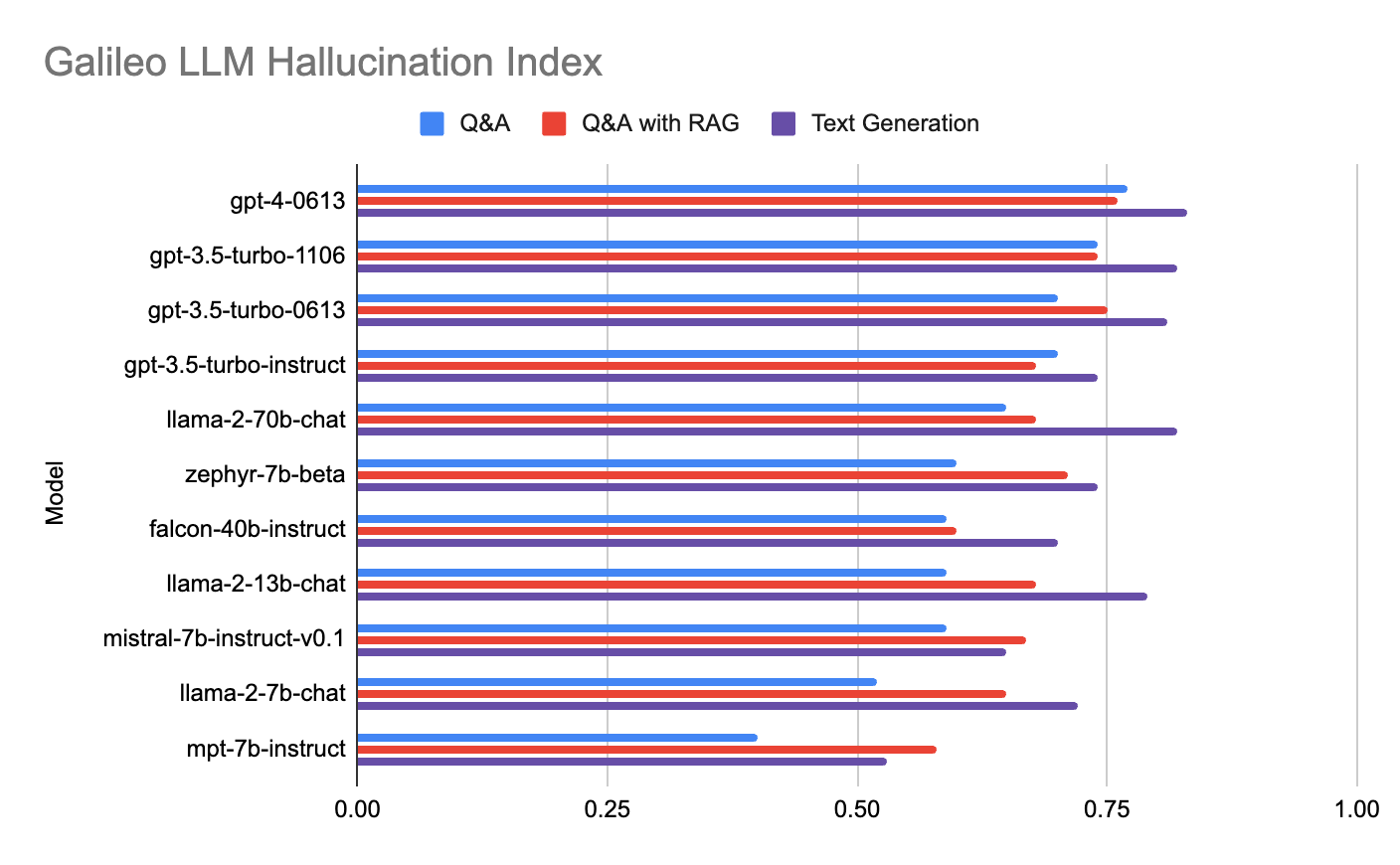

Galileo, a machine learning tools provider that helps companies evaluate and monitor large language models (LLM), has introduced a new LLM Hallucination Index. The Index evaluated 11 leading LLMs to compare the probably of correctness for three common use cases:

Q&A without Retrieval Augmented Generation (RAG)

Q&A with RAG

Long-form Text Generation

The first and third categories rely on the LLM’s own knowledge base to answer questions or generate text. For these evaluations, the metric is based on “correctness,” which is the probability the LLM response is factually accurate. A higher number represents a higher probability of correctness; the lower the number, the more likely a hallucination will occur.*

The RAG-enabled Q&A function uses a knowledge base outside the LLM’s training data or session context. This measure focuses on the response’s “context adherence,” representing the probability of fidelity to the information provided in the RAG’s knowledge base. Galileo used a variety of well-known datasets for testing and employed human reviewers to assess metric performance.

*LLM hallucinations are defined as outputs that are factually incorrect and do not exist in the training dataset or session context.

Results

You can see from the consolidated data in the chart above that OpenAI’s GPT-4 is the top-performing model in all three tests. You can also see that the GPT-3.5 models are generally the second-best performing models. If you were wondering whether all of the focus on OpenAI was based on the benefits of early market entry and superior marketing, this data might persuade you there is also a performance angle.

However, you will also see that Meta’s Llama 2 70B and Mistral 7B showed nearly comparable performance to OpenAI models in the text generation evaluation. Hugging Face’s Zephyr 7B was also a strong alternative for RAG-based solutions. Vikram Chatterji, Galileo co-founder and CEO, told Synthedia in an interview:

The Hallucination Index shows you have options. You don' have to use the OpenAI models. They are the best, but there are other models that are very good. For Q&A, Llama 2 70B is very good. The other recommendation is that you don't have to go with correctness that is the highest—balance that with cost.

Indeed, Galileo’s hallucination metrics are a subset of the total features and measures the company offers. Latency and cost are also important considerations, along with other use-case-specific requirements such as code quality, summarization, text evaluation, reasoning, and math. Few enterprises have expertise in model evaluation, benchmarking, or monitoring. These capabilities are going to rise in importance as companies move beyond proof-of-concept pilots and shift to production solutions at scale.

Measuring and Monitoring LLM Performance

Chatterji first encountered the model evaluation and monitoring problem while working at Google on LLM customization for companies. He and some colleagues recognized that performance related to the target use case should be weighted more heavily than general benchmarks. That led to the founding of Galileo.

Chatterji offered a more in-depth discussion of LLM benchmarking and a demo of Galileo’s metric-tracking software at the Synthedia LLM Innovation Conference.

From Experimentation to Data-Driven Decisions

Discussion of LLMs rarely goes deeper than surface-level commentary. This situation cannot last as enterprises commit tens of millions of dollars to their generative AI capabilities and begin using the technology for mission-critical processes.

Measuring LLM performance today is not standardized, nor are bespoke tests typically well-conceived for enterprise requirements. There are individual benchmark tests that provide value, but not for every user or every use case. Most are designed to meet the needs of academics and model developers so they can assess the general capabilities of different foundation models or fine-tuned versions.

Enterprise data science and MLOps teams need tools that enable them to select appropriate benchmarks and run them at a reasonable cost. Hallucinations are just one model performance metric, but an important one. Many organizations and use cases can accept some errors produced by LLMs. However, reducing these errors provides significant value. Galileo’s Hallucination Index is useful as a starting point because it indicates an LLM’s propensity to hallucinate. If you begin with a lower hallucination probability, that will likely carry over to better performance after re-training, fine-tuning, and prompt engineering techniques are enabled.

It is also indicative of what is starting to become a trend. Enterprise experimentation with LLMs to determine fit and impact will necessarily shift to a need for more performance data. Enterprises will need to justify the selection of one model over another and track model performance over time. Otherwise, everyone will just be flying blind, or, at least, trying to navigate through a fog and hoping for the best.

Inflection AI Announces Inflection-2 LLM with Significantly Improved Performance

Inflection AI, the maker of the personalized chatbot Pi, has announced Inflection-2, its latest large language model (LLM), which the company says is better than Inflection-1 and Google’s PaLM 2. Although the company did provide one comparison with GPT-4 and GPT-3.5 in the blog post announcing the…

Anthropic's Claude 2.1 LLM Has a 200K Context Window, API Tools and Poses a New Challenge to OpenAI

Anthropic has introduced Claude 2.1. Its latest large language model (LLM) boasts “an industry-leading 200K token context window,” lower hallucinations, tool APIs, and system prompts. It also arrives with a new price reduction for LLM use. This announcement follows OpenAI’s introduction of a 128K context window for GPT-4 that bested Anthropic’s previous…