Gizmodo the Latest Media Publisher to Notch a Generative AI Fail

The company writes off the errors as the price of experimentation

Variety reported earlier this week that Gizmodo’s io9 entertainment section published a factually incorrect story titled “A Chronological List of Star Wars Movies & TV Shows.” The author was listed as “Gizmodo Bot.”

Among other issues, the article presents the titles in a numbered list that is not actually in chronological order. It also omits any mention of Disney+’s Star Wars series “Andor,” “Obi-Wan Kenobi” and “The Book of Boba Fett” and lists “The Clone Wars” series as coming after the events of “The Rise of Skywalker,” which is incorrect.

“As you may have seen today, an AI-generated article appeared on io9,” James Whitbrook, deputy editor at io9 and Gizmodo, tweeted about the situation. “I was informed approximately 10 minutes beforehand, and no one at io9 played a part in its editing or publication.”

Whitbrook said he sent a statement to G/O Media along with “a lengthy list of corrections.” In part, his statement said, “The article published on io9 today rejects the very standards this team holds itself to on a daily basis as critics and as reporters. It is shoddily written, it is riddled with basic errors; in closing the comments section off, it denies our readers, the lifeblood of this network, the chance to publicly hold us accountable, and to call this work exactly what it is: embarrassing, unpublishable, disrespectful of both the audience and the people who work here, and a blow to our authority and integrity.”

AI vs Journalism

Gizmodo is owned by G/O Media, which also publishes The Onion, Deadspin, Jezebel, and other titles. The erroneous story has become a flashpoint of conflict between the publisher and some of its journalist staff. The Washington Post wrote in its report on the story:

A few hours after James Whitbrook clocked into work at Gizmodo on Wednesday, he received a note from his editor in chief: Within 12 hours, the company would roll out articles written by artificial intelligence. Roughly 10 minutes later, a story by “Gizmodo Bot” posted on the site about the chronological order of Star Wars movies and television shows.

Whitbrook — a deputy editor at Gizmodo who writes and edits articles about science fiction — quickly read the story, which he said he had not asked for or seen before it was published. He catalogued 18 “concerns, corrections and comments” about the story in an email to Gizmodo’s editor in chief, Dan Ackerman.

…

The story was written using a combination of Google Bard and ChatGPT, according to a G/O Media staff member familiar with the matter.

…

The irony that the turmoil was happening at Gizmodo, a publication dedicated to covering technology, was undeniable. On June 29, Merrill Brown, the editorial director of G/O Media, had cited the organization’s editorial mission as a reason to embrace AI. Because G/O Media owns several sites that cover technology, he wrote, it has a responsibility to “do all we can to develop AI initiatives relatively early in the evolution of the technology."

…

Mark Neschis, a G/O Media spokesman, said the company would be “derelict” if it did not experiment with AI. “We think the AI trial has been successful,” he said in a statement. “In no way do we plan to reduce editorial headcount because of AI activities.” He added: “We are not trying to hide behind anything, we just want to get this right. To do this, we have to accept trial and error.”

…

Organizations are incentivized to use AI in generating content, NewsGuard analysts said, because ad-tech companies often put digital ads onto sites “without regard to the nature or quality” of the content, creating an economic incentive to use AI bots to churn out as many articles as possible for hosting ads.

AI Written Stories Are Not New

The fact is that AI has been writing news articles for many years. Wordsmith by Vista Equity Partners-owned Automated Insights is used by the AP to generate 4,400 earnings releases per quarter. In 2018 alone, 5,000 summaries were generated by the software related to NCAA Division I men’s basketball.

News written by software is not going away. The advent of highly capable large language models (LLM) means that generative AI can write more types of articles than in the past. A key problem is that the news organizations are treating it as a fully automated solution, when, in reality, the risk of errors of fact and context requires a human in the loop.

News organizations have editors review articles written by journalists. Those journalists have a professional incentive to deliver factually correct stories, yet editors still review the articles. Why would news organizations apply a lessor standard to stories generated by AI, which has no incentive to deliver factual accuracy?

Publishing content is not the same thing as journalism. Many publishers are looking for more pages of content that enable them to drive more page views and serve more ads. This at least partially explains the cavalier attitude of several publishers we chronicled recently in our stories about Gannett and Axel Springer.

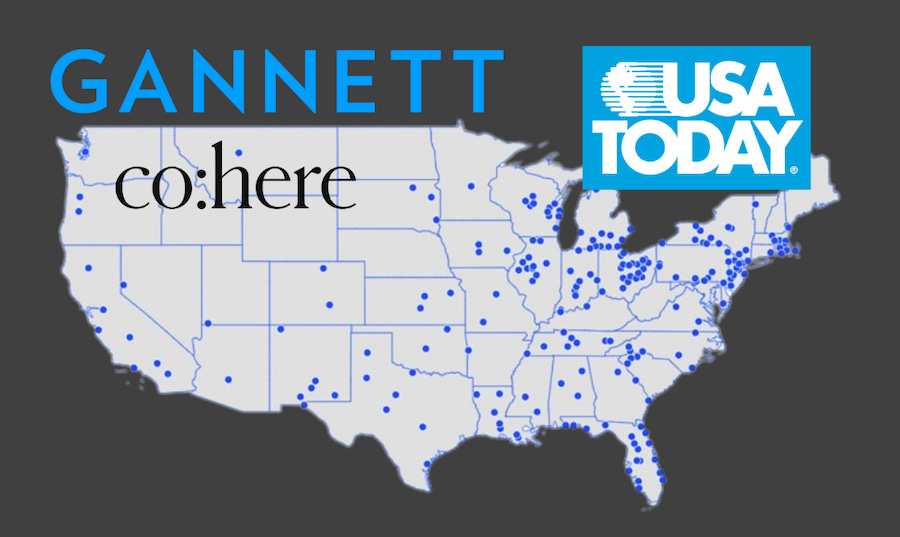

USA TODAY Publisher Gannett Taps Cohere for Generative AI Driven News Summaries

Gannett is the largest U.S. news publisher, with over 200 daily publications. The company has faced a series of strikes by unionized journalists this month spurred by delays in contract negotiations. It also plans to implement generative AI for automating news summaries and other writing tasks.