Meta Llama 3 Launch Part 1 - 8B and 70B Models are Here, with 400B Model Coming

Setting a new standard for open-source LLMs and challenging the frontier models

Meta launched the Llama 3 large language model (LLM) today in 8B and 70B parameter sizes. Both models were trained on 15 trillion tokens of data and are released under a permissive commercial and private use license. The license is not as permissive as traditional open-source options, but its restrictions are limited.

The company also announced it was training a 400B parameter model that will be released later. According to the announcement:

Today, we’re excited to share the first two models of the next generation of Llama, Meta Llama 3, available for broad use. This release features pretrained and instruction-fine-tuned language models with 8B and 70B parameters that can support a broad range of use cases. This next generation of Llama demonstrates state-of-the-art performance on a wide range of industry benchmarks and offers new capabilities, including improved reasoning.

…

The text-based models we are releasing today are the first in the Llama 3 collection of models. Our goal in the near future is to make Llama 3 multilingual and multimodal, have longer context, and continue to improve overall performance across core LLM capabilities such as reasoning and coding.

…

In line with our design philosophy, we opted for a relatively standard decoder-only transformer architecture in Llama 3. Compared to Llama 2, we made several key improvements. Llama 3 uses a tokenizer with a vocabulary of 128K tokens that encodes language much more efficiently, which leads to substantially improved model performance. To improve the inference efficiency of Llama 3 models, we’ve adopted grouped query attention (GQA) across both the 8B and 70B sizes. We trained the models on sequences of 8,192 tokens, using a mask to ensure self-attention does not cross document boundaries.

Beyond the models, Meta also announced:

A novel LLM benchmark - Part 2

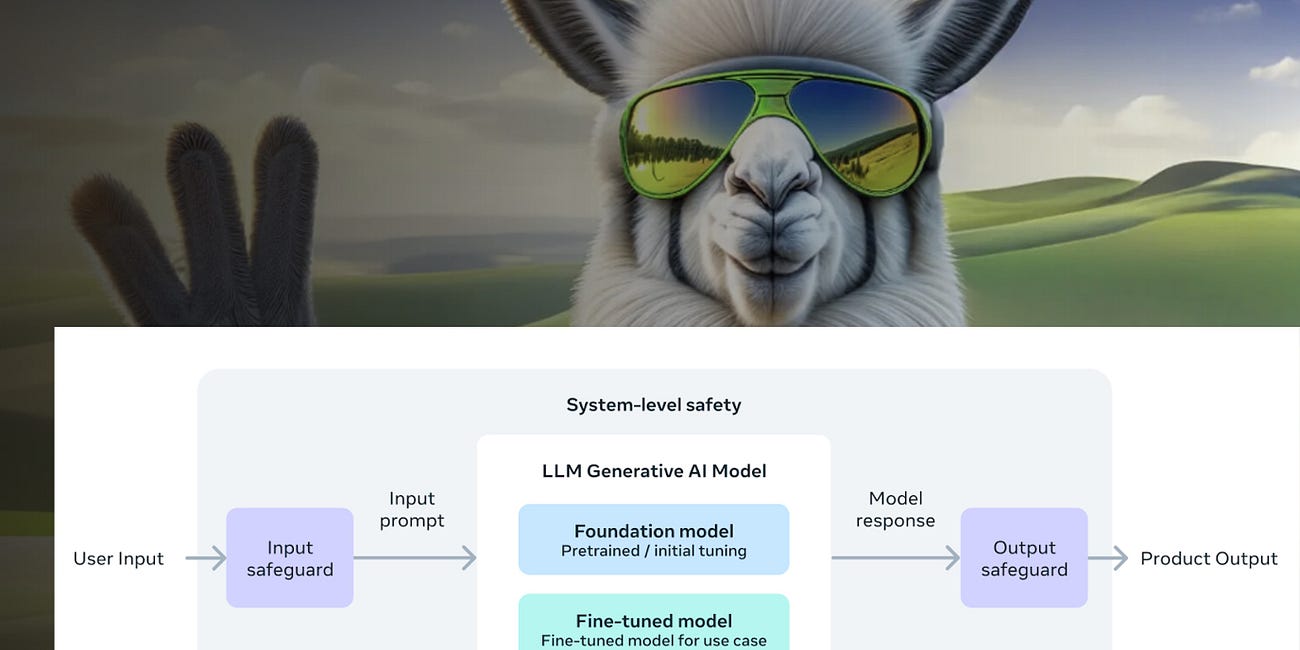

A new AI safety framework - Part 2

Meta AI includes Llama 3 and expanded access across web and apps - Part 3

Performance

We can acknowledge the usual caveats around cherry-picked public benchmark results and look at what Meta published. First, the company provided data on both the Pre-trained model and the Instruct model. I would have preferred to see data for all 11 benchmarks for both models, but Meta did provide MMLU comparison for both. The rationale is most likely based on the expectations of different models. Still, it is always best to see like-for-like comparisons.

The Pre-trained Llama 3 8B model outperformed Mistral 7B and Google’s Gemma 7B across MMLU, AGIEval English, Big-Bench Hard, ARC-Challenge and DROP for the published figures provided by the model developers. Notably, Meta also benchmarked these models internally and provided measured performance that was higher than the published data. The 8B model still beat the small language model alternatives for the measured results, in all but ARC-Challenge, where Meta achieved far superior performance over the published results.

Llama 3 70B model showed higher performance across these same benchmarks in comparison to Google Gemini Pro 1.0 and Mistral 8x22B. The different comparison set is related to the closest perceived matching to model size.

The 8B Instruct model also outpaced Gemma 7B-It and Mistral 7B Instruct, across the MMLU, GPQA, HumanEval, GSM-8K and MATH LLM benchmarks. The 70B Instruct model was stronger than Gemini Pro 1.5 and Claude 3 Sonnet in MMLU, HumanEval, and GSM-8K. However, it feell a couple of points short of Gemini Pro 1.5 in GPQA and MATH.

While the Llama 2 8B Instruct model shows significantly better benchmark performance than Gemma and Mistral, the 70B model was only marginally better than Gemini Pro 1.5 and Claude 3 Sonnet in three categories, marginally worse in one, and significantly worse in one. Granted, Gemini and Claude are both proprietary models.

The obvious omission are the Grok-1 open-source model. This was likely bypassed because it was not publicly available until about a month ago. Grok-1 and 1.5 have published MMLU scores below those of Llama 3 70B Instruct.

Training Data

Meta CEO Mark Zuckerberg commented in an Instagram post that the Llama 3 8B model is almost as powerful as the Llama 2 70B model. A key ingredient of that improvement is an increase in the size and quality of the training data. According the the official launch announcement.

To train the best language model, the curation of a large, high-quality training dataset is paramount. In line with our design principles, we invested heavily in pretraining data. Llama 3 is pretrained on over 15T tokens that were all collected from publicly available sources. Our training dataset is seven times larger than that used for Llama 2, and it includes four times more code. To prepare for upcoming multilingual use cases, over 5% of the Llama 3 pretraining dataset consists of high-quality non-English data that covers over 30 languages. However, we do not expect the same level of performance in these languages as in English.

To ensure Llama 3 is trained on data of the highest quality, we developed a series of data-filtering pipelines. These pipelines include using heuristic filters, NSFW filters, semantic deduplication approaches, and text classifiers to predict data quality. We found that previous generations of Llama are surprisingly good at identifying high-quality data, hence we used Llama 2 to generate the training data for the text-quality classifiers that are powering Llama 3.

…

To effectively leverage our pretraining data in Llama 3 models, we put substantial effort into scaling up pretraining. Specifically, we have developed a series of detailed scaling laws for downstream benchmark evaluations. These scaling laws enable us to select an optimal data mix and to make informed decisions on how to best use our training compute. Importantly, scaling laws allow us to predict the performance of our largest models on key tasks (for example, code generation as evaluated on the HumanEval benchmark—see above) before we actually train the models. This helps us ensure strong performance of our final models across a variety of use cases and capabilities.

We made several new observations on scaling behavior during the development of Llama 3. For example, while the Chinchilla-optimal amount of training compute for an 8B parameter model corresponds to ~200B tokens, we found that model performance continues to improve even after the model is trained on two orders of magnitude more data. Both our 8B and 70B parameter models continued to improve log-linearly after we trained them on up to 15T tokens. Larger models can match the performance of these smaller models with less training compute, but smaller models are generally preferred because they are much more efficient during inference.

Yann LeCun indicated in a live stream interview to the Imagination in Action Conference at MIT Media Lab today that Meta would have used more data if it were available. According to LeCun, “there is only so much data you can get.”

LeCun also mentioned that not too long ago, researchers thought there was an optimal amount of training data that would be far less for models of the size Meta released today. However, the research teams found the improvements continued as more data was added to training. This is consistent with Synthedia’s earlier analysis in 2023 showing the sharp rise in data training sets driving more model improvement than rises in parameter counts.

Going 400B

Meta’s new Llama 3 400B parameter model will be the company’s largest to date. It was not released today because it is still training. However, Meta researchers did evaluate the partially trained model in the Pre-trained and Instruct versions on April 15th and reported the performance numbers. Unsurprisingly, they are better than the 70B models. However, it is hard to know how much better they will become after the full training cycle in complete.

The more significant aspect of this news is that Meta has a 400B frontier foundation model in its near-term product pipeline. That is far smaller than OpenAI’s GPT-4 model with a reported 1.8T parameters, but larger than GPT-3.5 and likely more efficient. LeCun’s comments at the Imagination in Action Conference suggests the 400B model is a unified dense model, and not sparse or mixture of experts as many recent models have been. Meta’s announcement stated:

The Llama 3 8B and 70B models mark the beginning of what we plan to release for Llama 3. And there’s a lot more to come.

Our largest models are over 400B parameters and, while these models are still training, our team is excited about how they’re trending. Over the coming months, we’ll release multiple models with new capabilities including multimodality, the ability to converse in multiple languages, a much longer context window, and stronger overall capabilities. We will also publish a detailed research paper once we are done training Llama 3.

The multimodal and multi-language promise suggests that the Llama models are now prepared to take on the proprietary frontier models from Anthropic, OpenAI, and Google. It also indicates that Llama 3 400B may soon offer developers an open-source model comparable with the best proprietary models available today.

Meta Llama 3 Launch Part 2 - New Model Security and Performance Benchmarks

The biggest Llama 3 announcements were around the updated foundation models. However, Meta also made several other announcements of significance. These include the expanded availability of Meta AI (coming in Part 3 of this series), along with a new performance benchmark and cybersecurity evaluation suite for large language models (LLM).

Meta Llama 3 Launch Part 3 - Meta AI Upgrade, Broader Distribution & Strategy

Meta’s Llama 3 announcements (Part 1, Part 2) were coupled with broader availability for the new large language models (LLM) via the Meta AI assistant. This is now available in 14 countries via Meta’s social media apps and a new web app. According to the Meta AI

4 Shortcomings of Large Language Models - Yan LeCun, Research, and AGI

Large language models (LLM) offer seemingly magical capabilities often mistaken for human-level qualities. However, Yan LeCun, Meta’s top AI scientist and Turing Award winner, recently laid out four reasons why the current crop of LLM architectures is not likely to reach the goal of artificial general intelligence (AGI). During an interview on the Lex F…