Mistral's 8x22B LLM Mixes Performance with Efficiency

The new LLM segmentation begins!

Mistral revealed key performance data and model characteristics today for the newly released Mixtral 8x22B open-source large language model (LLM). While the new model performs very well on select benchmarks compared to open-source peers, the company stresses a non-functional metric: efficiency.

For the MMLU benchmark, it outperformed all of its cited peer models for correct responses, though it appears to fall slightly short of the new Grok-1.5 model from X.ai. It also fell short in two tests, HellaSwag and Winogrande, to Cohere’s Command R+, which is accessible under a Creative Commons license. However, Mistral will point you to the chart above that compares the company’s models to open-source alternatives on a scale of active parameters and MMLU performance.

The active parameters figure relates directly to the amount of computation activated during inference. You should note that Meta’s small Llama 2 models are very efficient but delivered materially lower MMLU performance. Llama 70B was weaker in performance and accessed more parameters. Mixtral 8x22B has more parameters than Llama 2 70B, but since it uses a Mixture of Experts (MoE) architecture, only 44B are active for any inference job.

Efficiency

The core argument is that efficiency is important because it is a key driver of inference cost. More active parameters per query translate into more costs.

We build models that offer unmatched cost efficiency for their respective sizes, delivering the best performance-to-cost ratio within models provided by the community.

Mixtral 8x22B is a natural continuation of our open model family. Its sparse activation patterns make it faster than any dense 70B model, while being more capable than any other open-weight model (distributed under permissive or restrictive licenses).

Fewer active parameters also typically result in faster response times, as well. This will become important as businesses scale their generative AI use cases. Coupling high generalized performance with computational efficiency will be meaningful at a league scale of operation.

Multi-lingual Proficiency

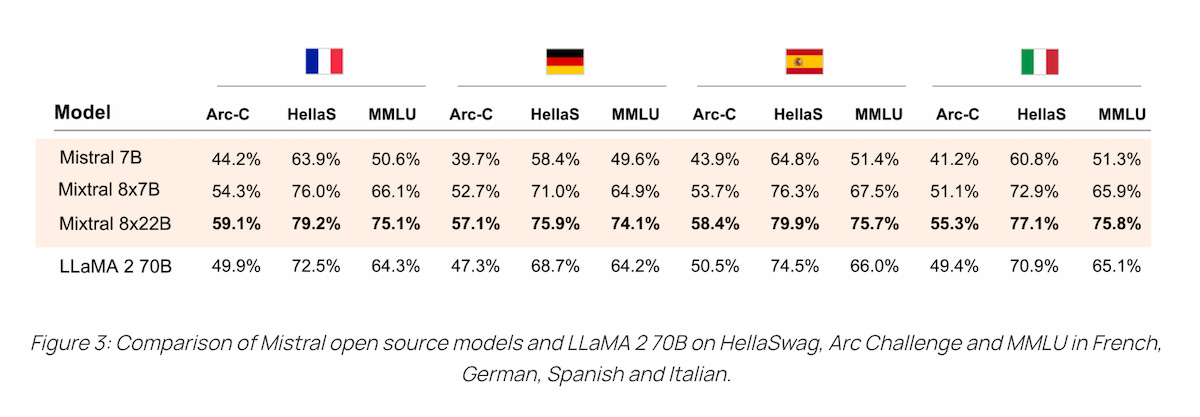

A secondary focus for the announcement is MMLU performance across languages. Mixtral 8x22B outperformed Llama 2 70B in Arc-C, HellaSwag, and MMLU in French, German, Italian, and Spanish. Given Mistral’s French heritage and high profile within the European Union, it is logical for the company to highlight its language facility.

LLM Segmentation

Last year, LLM conversations generally revolved around model parameter counts and benchmark scores. The benchmark scores remain, while the focus on parameters has become more balanced, with references to architecture, training data, and licensing. However, as LLMs are used in more production environments, deliberate segmentation is starting to take place. Mistral and Cohere are actively courting this niche segmentation for their models.

Arthur Mensch, a Mistral co-founder, commented during an interview on the Unsupervised Learning Podcast in March:

I'm pretty sure that we can make things more efficient than just plain transformers that spend the same amount of compute on every token…We're going to be more efficient. We're going to deploy models on smaller devices. We're going to improve latency, make models think faster. And when you make model think faster you're opening up a lot of applications.

Groq (not to be confused with Grok) stresses low latency performance. Cohere focuses on enterprise retrieval augmented generation (RAG). Mistral highlights performance per parameter or the optimal quality and cost tradeoff. Mensch said the company’s strength is in model training, where Mistral is eeking out incremental performance gains per active parameter.

This efficiency may be particularly important precisely because Mistral is an open-source model. Most companies are choosing open-source for reasons of control, but they will also be cognizant of the added cost of hosting and managing their own model. Inference efficiency may be a deciding factor. Well, that and a strong multilingual performance.

Grok-1.5 Closes Gap with OpenAI, Google, and Anthropic, Aces Long Context Window Retrieval

X.ai announced the Grok-1.5 large language model (LLM) Thursday, and it reflects a significant performance and feature upgrade over the now open-source Grok-1 model. Caveats aside about what benchmark results LLM developers choose to present, MMLU, MATH, GSM8K, and HumanEval results rose from Grok-1 to Grok-1.5, from 73% to 81.3%, 23.9% to 50.6%, 62.9% …

Databricks Claims a Performance Lead with a New Open LLM

Databricks has announced a new open large language model (LLM) called DBRX that it says handily beats the Llama 2, Mistral, and Grok-1 open models in performance benchmarks. DBRX is a 132 billion parameter mixture-of-experts LLM trained on 12 trillion data tokens.