Databricks Claims a Performance Lead with a New Open LLM

MosaicML and the well-regarded MPT models get an upgrade to DBRX

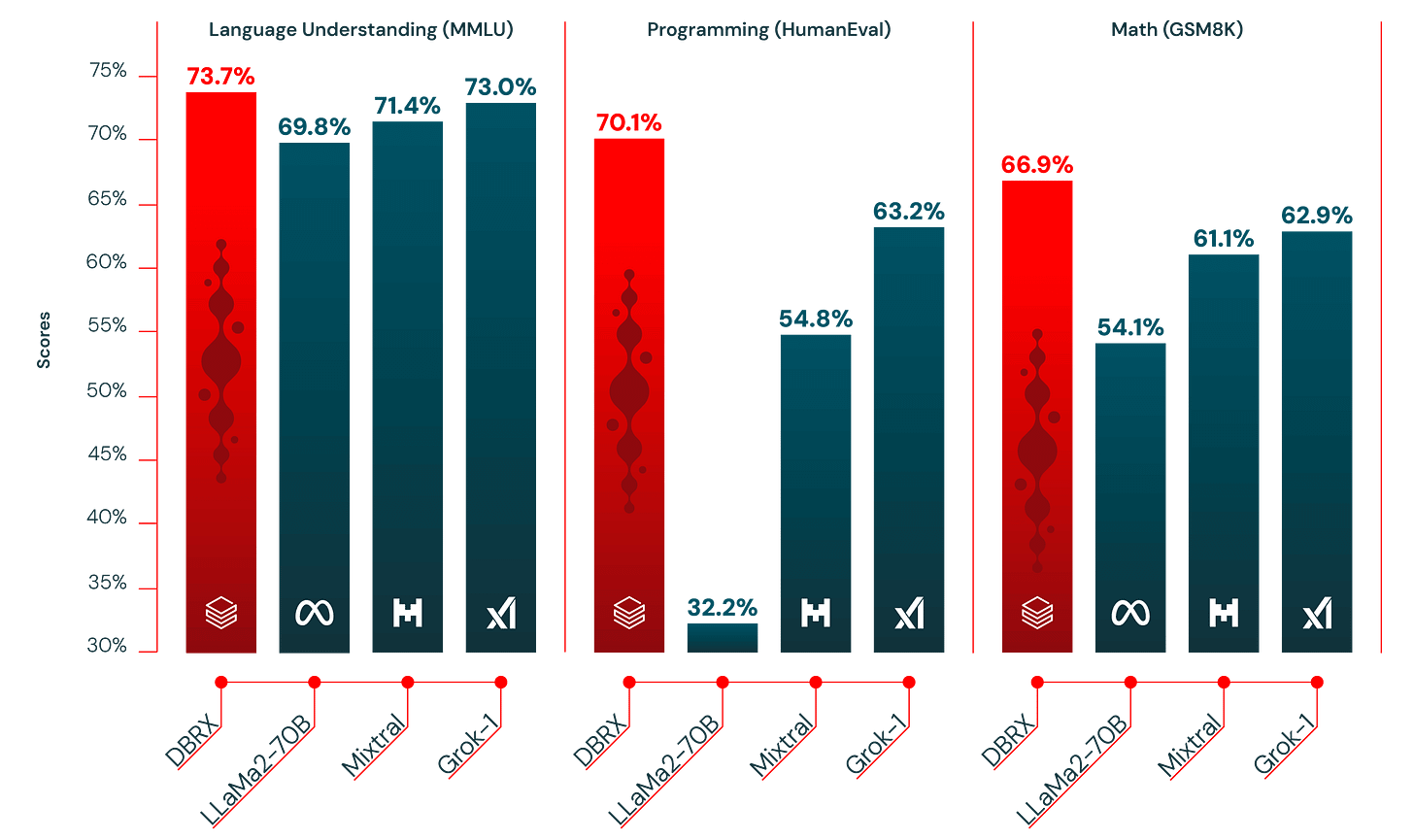

Databricks has announced a new open large language model (LLM) called DBRX that it says handily beats the Llama 2, Mistral, and Grok-1 open models in performance benchmarks. DBRX is a 132 billion parameter mixture-of-experts LLM trained on 12 trillion data tokens.

The benchmark data provided show DBRX marginally beating Llama 2, Mixtral 8x7B, and Grok-1 in the MMLU “language understanding” benchmark. However, the performance gaps in coding (HumanEval) and math (GSM8K) benchmark categories are wider. Databricks’ Mosaic research team says in a blog post:

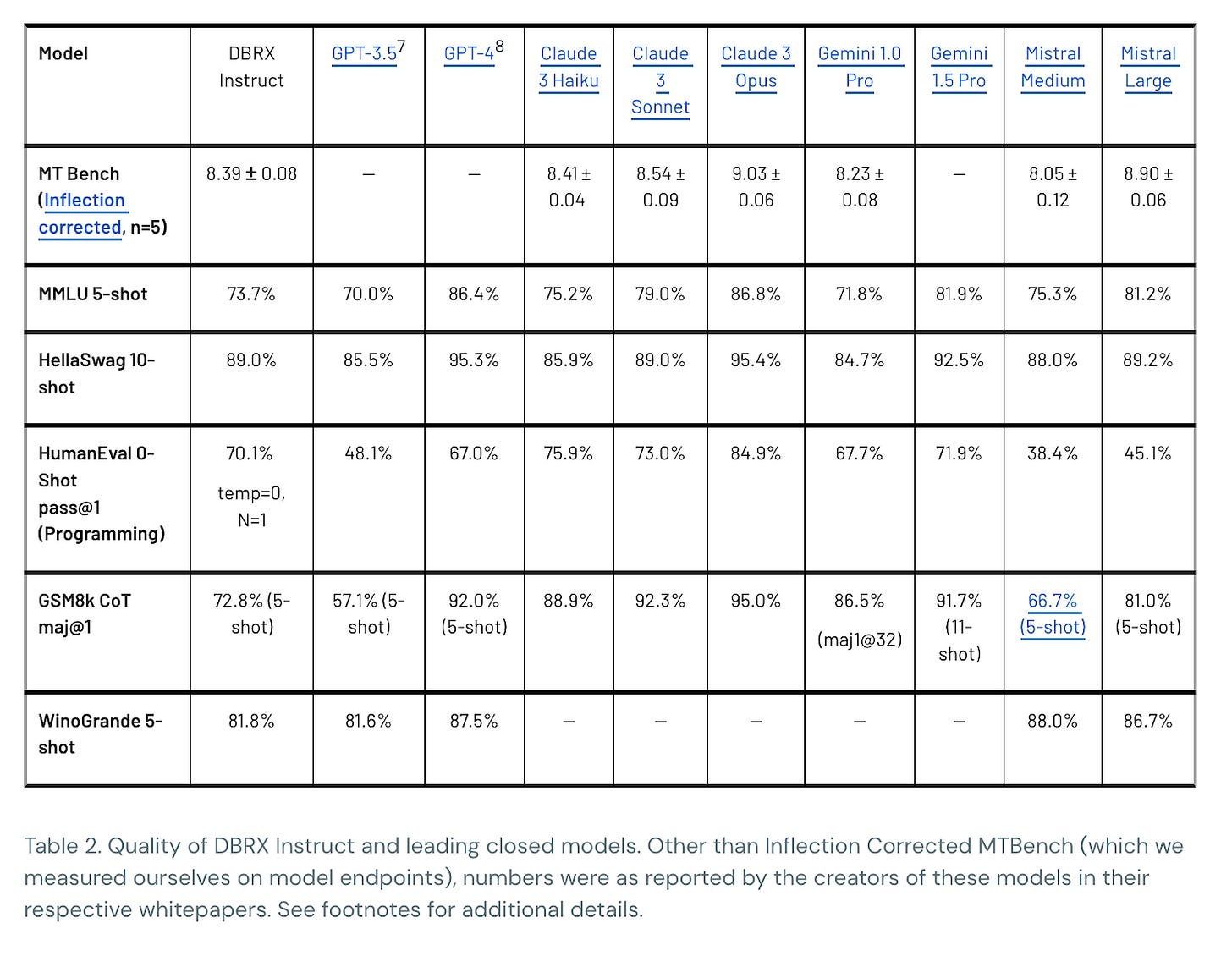

According to our measurements, [DBRX] surpasses GPT-3.5, and it is competitive with Gemini 1.0 Pro. It is an especially capable code model, surpassing specialized models like CodeLLaMA-70B on programming, in addition to its strength as a general-purpose LLM.

…

DBRX is a transformer-based decoder-only large language model (LLM) that was trained using next-token prediction. It uses a fine-grained mixture-of-experts (MoE) architecture with 132B total parameters of which 36B parameters are active on any input. It was pre-trained on 12T tokens of text and code data. Compared to other open MoE models like Mixtral and Grok-1, DBRX is fine-grained, meaning it uses a larger number of smaller experts. DBRX has 16 experts and chooses 4, while Mixtral and Grok-1 have 8 experts and choose 2. This provides 65x more possible combinations of experts and we found that this improves model quality…

DBRX was pretrained on 12T tokens of carefully curated data and a maximum context length of 32k tokens. We estimate that this data is at least 2x better token-for-token than the data we used to pretrain the MPT family of models.

This is not Databricks' first LLM. In June 2023, the company paid $1.3 billion to acquire MosaicML, the creator of the MPT open-source models that have regularly topped Hugging Face’s Open LLM Leaderboard. The Mosiac team created DBRX.

Taking on Open Models

DBRX looks like an upgrade from the earlier MPT models. The company released data that show it ahead of Mistral Instruct, Mixtral Base, Llama 2 70B chat, Llama 2 70B Base, and Grok-1 in four of the six benchmarks used to rank models on the Open LLM leaderboard. It ranked second best in the other two benchmarks.

While Synthedia has been critical of many foundation model developers for cherry-picking their results from a shifting list of public benchmarks, Databricks is following the standard set by Hugging Face. That is a welcome change. It also provides figures on additional benchmarks, including what it calls Gaunlet v.03. The company indicated this benchmark is a compilation of 30 “diverse tasks.”

Taking on Open Models

The bigger challenge is taking on the leading proprietary foundation models. Data show that DBRX is as strong or stronger than the second-tier models, such as GPT-3.5 and Gemini 1.0 Pro. However, it trails the frontier models, such as GPT-4 and Claude 3 Opus. This is generally what open model developers target. Capable models that fit in the “good enough” category but carry the added benefit of favorable economics.

Yet Another MoE

DBRX is a mixture-of-experts (MoE) foundation model, similar to recent introductions from Mistral, Grok-1, and Gemini. These all follow in the footsteps of GPT-4, the 1.8T parameter model which has stood as the frontier LLM industry benchmark for the past year.

The MoE approach is another indication that the industry has moved squarely into the model architecture phase of LLM improvement gains. Parameter counts represented the first phase. NeMo Megagtron from NVIDIA and Microsoft were among the first to show diminishing margin returns from adding more parameters.

This was followed over the last 18 months by a sharp rise in the size of training datasets. More data indeed provides superior performance even for models with lower parameter counts. As those figures rose into the trillions and surpassed 10 trillion tokens, architectures based on MoE and multimodality started to emerge as driving the next step up in performance. OpenAI has maintained its lead with GPT-4 largely because it made each of these shifts earlier than its competitors.

Databricks’ announcement also points to the emerging phase of focus for foundation model developers: data quality. The “carefully curated” dataset mentioned by the Mosaic team reflects a shift that OpenAI’s Sam Altman mentioned in the Lex Fridman podcast. Altman talked about data organization, data collection, data cleaning as among the many elements that drive performance. It is worth noting that data management is definitely in Databricks’ area of competency.

Databricks Open License

Once again, we return to the difference between open and open-source licenses. DBRX is not open-source. Instead, it is an open license that allows permissive commercial and private use but is more restrictive than traditional open-source licenses. One of those restrictions is conformity with Databricks’ acceptable use policy.

Conformance with a company’s acceptable use policy is a reasonable request for a model developer to make and will not be a problem for most of the users that adopt the model. However, given that there are comparable or better models, such as Grok-1, which carries a true open-source Apache 2.0 license, is it really worth the risk of using a model with a restrictive license?

One other amusing (or maybe practical) restriction is what I call the TikTok exclusion. The license terms state:

If, on the DBRX version release date, the monthly active users of the products or services made available by or for Licensee, or Licensee’s affiliates, is greater than 700 million monthly active users in the preceding calendar month, you must request a license from Databricks, which we may grant to you in our sole discretion.

Meta includes a similar clause in its Llama 2 license. Essentially, the open model license developers do not want the social media giants to use their LLM without seeking permission and likely commit to commercial terms.

Databricks Strategy

Why does Databricks offer LLMs? The answer is simple. Databricks provides data management products, while LLMs employ a lot of data for training, access data in inference operations, and generate data. It is logical to extend data management features to capabilities that consume and generate a lot of data.

Of course, the company could simply re-offer LLMs from other model developers. The key value proposition is that companies can own and control their data while doing the same for their generative AI models. It may be convenient for current customers to use a Databricks model, but it is not required. Regardless, generative AI is creating more of a need for data lakes and new data to feed information into them. All in all, generative AI is a very positive development for Databricks.

Amazon Completes $4B Anthropic Investment, Links Up with Accenture

Amazon committed up to a $4 billion investment in Anthropic in September 2023. That announcement coincided with the first tranche of $1.25 billion. Amazon announced this week that it had added $2.75 billion to complete the $4 billion investment option.

Inflection Reveals a New Model Rivaling GPT-4, Pi Daily Users, and Different Assistant Strategy

Inflection has announced its new large language model (LLM), Inflection 2.5. The company’s self-reported benchmark results show that Inflection 2.5 is nearing the performance of OpenAI’s GPT-4. This is among several recent announcements claiming equal or superior performance to GPT-4, which has generally been viewed as the leading generative AI model av…