New Report - Deepfake and Voice Clone Consumer Sentiment and Experience

Nearly 80 pages and over 50 charts on awareness, encounters, and concerns

Synthedia, Voicebot.ai, and Pindrop just completed a new report based on a survey of over 2,000 U.S. adults. The rise of deepfakes and voice clones has delivered realism, entertainment value, and everyday humor. The technology also has a downside. Vijay Balasubramaniyan, CEO and co-founder of Pindrop, commented on the rising technology quality, saying:

While many fans of Star Wars, America’s Got Talent, online games, and countless humorous YouTube videos have enjoyed the emergence of voice clones and deepfakes, there is also a rising use of these technologies in fraud, disinformation, and harm to personal reputations. The technologies are so good, and we are inundated with so much digital media that people now struggle to differentiate the real from the fake. A Pindrop study showed that people can identify a deepfake with just 57% accuracy. That is only 7% better than a coin toss.

Consumers have a conflicted view of the technology. They have a high level of concern and a slightly net negative sentiment. However, the most concerned consumers also expressed the most positive sentiment.

The Deepfake and Voice Clone Consumer Sentiment Report 2023 is by far the most comprehensive review of consumer experience and feelings toward the technology developed to date. It includes over 50 charts and nearly 80 pages of analysis and resources. It also breaks out eight real-world deepfake and voice clone examples that represent both positive and negative uses of the technology. You can learn more about the report and download it by clicking the button below.

High Deepfake and Voice Clone Awareness

You may be surprised to learn that over half of U.S. consumers know deepfakes (54.6%), and the total is even higher for voice clones (63.6%). That higher awareness is despite the fact that voice clones are a subset of the deepfake category. We believe this is partly driven by the broad awareness of the term “clone” as a modifier. Deepfake is a niche portmanteau that combines the terms deep learning and fake and may not be as easy to recall.

The four top channels for deepfake and voice clone exposure were all social media sites: YouTube, TikTok, Instagram, and Facebook. Those were followed by traditional media sources with video games and other channels further down the list.

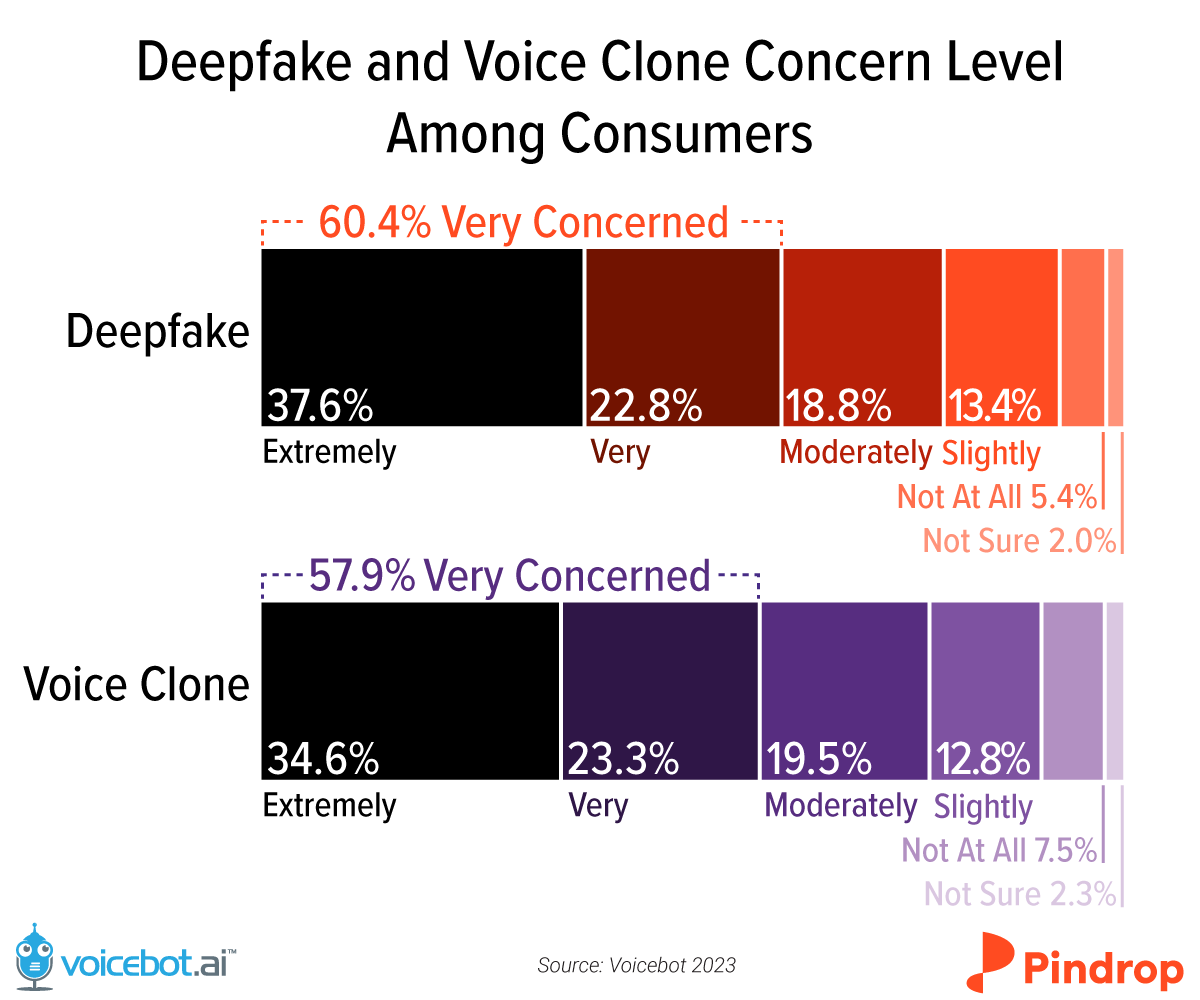

Deepfake Concern is High

Over 90% of U.S. adults harbor at least some concern over deepfakes and voice clones. The “very” and “extremely” concerned levels are slightly higher for deepfakes at 60.4%, compared to 57.9% for voice clones. However, it is fair to say both are very high.

The technologies are also polarizing in terms of sentiment. Eighty-seven percent of consumers expressed either a positive or negative sentiment of deepfakes, with just about 12% expressing a neutral position. The most common responses were “extremely positive” and “extremely negative,” both at about 22%.

A similar pattern emerged around voice clones. Eighty-three percent were in the positive or negative camp, with the two extremes once again the leading responses, though voice clones have a more negative tinge to the sentiment. The net negative sentiment was about 10% for deepfakes but nearly 15% for voice clones.

Concerns are High Across Industries

Consumers are most concerned about the fraudulent use of deepfakes and voice clones in banking by a significant margin. That is followed by a cluster of other industries over a six percentage point spread, including politics and government, disinformation in the news media, healthcare, and insurance. Concerns were far lower for other industries.

Digging deeper, we found that consumers were most confident that banks, insurance companies, and healthcare providers were taking steps to protect their customers from the inappropriate use of these technologies. In fact, only 31% of consumers expressed low confidence. This confidence may be misplaced, given the newness of the detection technologies.

Consumers expressed higher concern about the news media and social media. Low confidence scores ranged between 39% and 45%.

In addition, concerns rise with consumer income. The higher the income, the more likely they are to express concern. Higher-income consumers are also more likely to have confidence that their service providers in financial services, healthcare, and media are taking steps to address the rising problem. That may turn out to be an important incentive for driving enterprise adoption of deepfake and voice clone detection solutions. It is very likely there is a mismatch today between the protections these companies have implemented and what their customers believe are in place.

What Consumer Experience and Sentiment Tell Us

These technologies are neither good nor bad. It all depends on how they are applied. Easier and lower-cost access is accelerating adoption, particularly in media, but also for personal use. We estimate that over 11 million people know someone who has had a deepfake or voice clone made without their consent. Plus, we have now seen and read about many examples of scams employing deepfakes and voice clones. This is very likely to rise sharply over the next year.

Enterprises will be forced to adopt new detection capabilities to reduce fraud and identity theft incidents. There also may be a growing market for consumers to protect themselves.

At the same time, social media creators, gamers, game makers, and Hollywood will all double down on deepfakes and voice clones. The technology is too compelling to ignore, for good or for bad.

I have questions and would love to read the full report by the download link keeps sending me back here.

Great insights and to be frank a little worrying.