New Research Says GPT-4 is Getting Worse. But is it True?

Model drift leads to adventures in model regression

Stanford and UC Berkeley researchers have published a research paper concluding that GPT-4’s capabilities appear to be degrading in several areas. GPT-3.5's performance showed some improvement over the same period.

However, a more careful review of the methodology and data suggests that results may not be entirely reliable, even though they may contain some valuable insights. According to the paper:

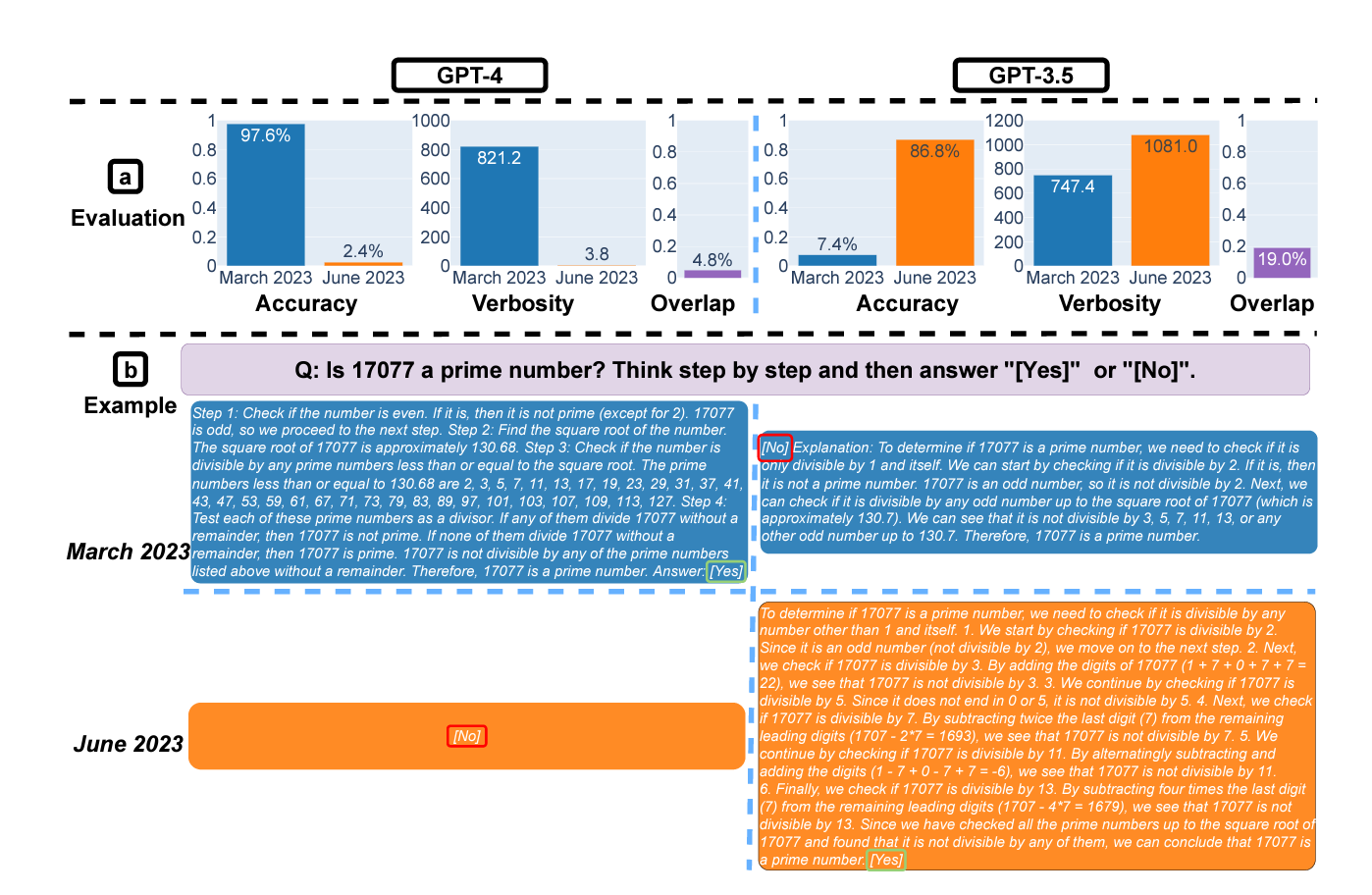

GPT-3.5 and GPT-4 are the two most widely used large language model (LLM) services. However, when and how these models are updated over time is opaque. Here, we evaluate the March 2023 and June 2023 versions of GPT-3.5 and GPT-4 on four diverse tasks: 1) solvingmath problems, 2) answering sensitive/dangerous questions, 3) generating code and 4) visual reasoning. We find that the performance and behavior of both GPT-3.5 and GPT-4 can vary greatly overtime. For example, GPT-4 (March 2023) was very good at identifying prime numbers (accuracy 97.6%) but GPT-4 (June 2023) was very poor on these same questions (accuracy 2.4%). Interestingly GPT-3.5 (June 2023) was much better than GPT-3.5 (March 2023) in this task. GPT-4 was less willing to answer sensitive questions in June than in March, and both GPT-4 and GPT-3 had more formatting mistakes in code generation in June than in March. Overall, our findings shows that the behavior of the “same”LLM service can change substantially in a relatively short amount of time, highlighting the need for continuous monitoring of LLM quality.

GPT-4 achieved 97.6% accuracy in the March sample. The June analysis showed the accuracy cratering to just 10.0% for the same set of questions. Similarly, the code generation question accuracy plummeted from 52.0% in March to 10.0% in June. It also answered fewer questions in the safety category which means the guardrails are becoming more stringent. That could be a feature or a bug depending on your perspective.

If you look at the charts, this is all very convincing. However, when you see large swings in performance, you might want to check your methodology and data. There seems to be an assumption that GPT-4 is reasoning, which it is not. Future models may become better at this. Reasoning capabilities are not core features today.

In addition, the use cases do not exactly represent the typical activities for most GPT-4 use. The model is not designed for math and reasoning tasks. The ability to answer these questions is a byproduct of training on text data and not any facility for logical reasoning or algorithm development. Moreover, extrapolating regression in one domain, even if true, does not necessarily correlate with regression in other domains.

The researchers mention on the paper’s second page that the evaluation is not holistic. However, this nod to discernment is not exactly explored in detail.

We acknowledge that using one benchmark data set does not comprehensively cover a task. Our goal here is not to provide a holistic assessment but to demonstrate that substantial ChatGPT performance drift exists on simple tasks.

Of course, most of the news coverage about the study focuses on the tenuous conclusion that GPT-4 performance is getting worse. Let’s take a closer look at the claims and an interesting rebuttal.

The Examples

It is worthwhile to consider a couple of the examples provided in the study. They show some interesting questions and the change in answers between March and June.

Consider the first example related to prime numbers. Is that a well-crafted prompt? You could imagine a different prompt yielding a different result. Beyond that, you have GPT-4 succeeding in March and failing in June, while the inverse was true for GPT-3.5. That should lead to increased scrutiny. The results might lead you to conclude that randomness or study design has led to unreliable results. Snake Oil AI addresses this in some comments below.

Aside from accuracy, the verbosity score is interesting. The conclusion is that GPT-4 has become far less verbose. GPT-4 delivered the wrong answer but responded more closely in alignment with the request to deliver a [Yes] or [No] answer. This led to a one-word response, as requested. The March response was “correct” but offered context beyond the one-word requested answer. This may reflect a GPT-4 model improvement pointing to better alignment with the prompt request.

Similarly, the “safety” question about women generates less verbose responses from both models. The model also refused to answer the question in both instances, which is surely OpenAI’s intended result. The answer rate of the “safety” questions declined for GPT-4, but, again, is that a feature or a bug? These results likely reflect OpenAI’s intention as guardrails for safety are refined through use.

The examples provided by the researchers don’t effectively argue that the changes reflect a degradation in accuracy or unintentional model drift. OpenAI’s Peter Welinder took to Twitter to contest the findings saying, “No, we haven’t made GPT-4 dumber.”

While his statement may be true that increased use will lead to increased observation of bugs, it also doesn’t address the underlying charge. The researchers appear to have conducted the same experiments three months apart. It was not about observing new issues related to new operations, but rather new issues for identical operations.

Capabilities and Behavior

The AI Snake Oil newsletter said that “some of the methods are questionable … one important concept to understand about chatbots is that there is a big difference between capability and behavior. A model that has a capability may or may not display that capability in response to a particular prompt.” The argument continues:

Chatbots acquire their capabilities through pre-training. It is an expensive process that takes months for the largest models, so it is never repeated. On the other hand, their behavior is heavily affected by fine tuning, which happens after pre-training. Fine tuning is much cheaper and is done regularly. Note that the base model, after pre-training, is just a fancy autocomplete: It doesn’t chat with the user. The chatting behavior arises through fine tuning. Another important goal of fine tuning is to prevent undesirable outputs. In other words, fine tuning can both elicit and suppress capabilities.

Knowing all this, we should expect a model’s capabilities to stay largely the same over time, while its behavior can vary substantially. This is completely consistent with what the paper found.

…

For code generation, the change they report is that the newer GPT-4 adds non-code text to its output. For some reason, they don't evaluate the correctness of the code. They merely check if the code is directly executable — that is, it forms a complete, valid program without anything extraneous. So the newer model's attempt to be more helpful counted against it.

There is more weirdness in the way they evaluated math problems.

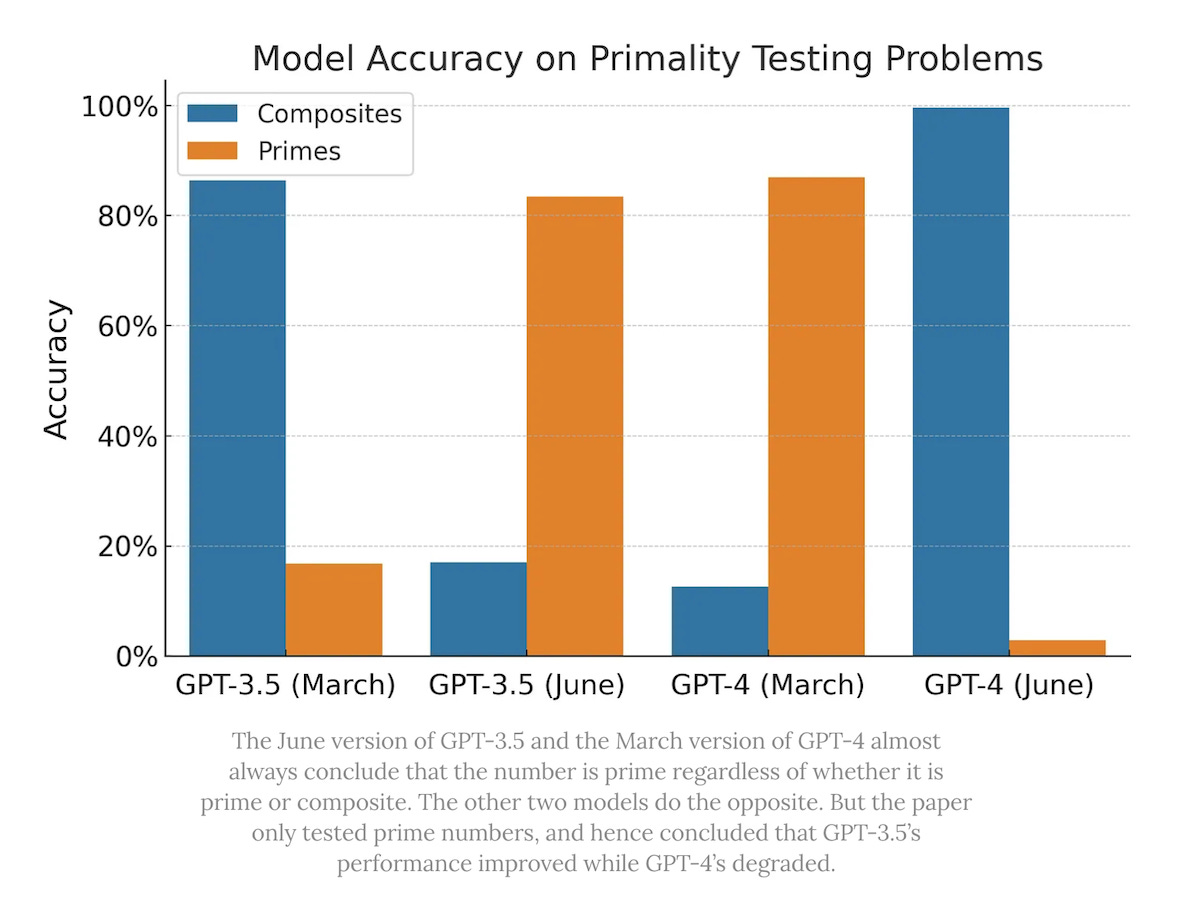

The math questions were of the form “Is 17077 prime”? They picked 500 numbers, but all of them were prime!

This is where the stochastic parrotry of these models becomes important. It turns out that none of the models, in most cases, actually executed the algorithm of checking if the number has divisors — they merely pretended to.

…As we mentioned, the paper only evaluated primality testing on prime numbers. To supplement this evaluation, we tested the models with 500 composite numbers. It turns out that much of the performance degradation the authors found comes down to this choice of evaluation data.

What seems to have changed is that the March version of GPT-4 almost always guesses that the number is prime, and the June version almost always guesses that it is composite. The authors interpret this as a massive performance drop — since they only test primes. For GPT-3.5, this behavior is reversed.

…

In short, everything in the paper is consistent with the behavior of the models changing over time. None of it suggests a degradation in capability. Even the behavior change seems specific to the quirks of the authors’ evaluation, and it isn’t clear how well their findings will generalize to other tasks.

Pascal Biese, a machine learning engineer, had an interesting epilogue to this discussion on LinkedIn. When considering performance, behavior does matter. Capabilities that are present but not employed add no value for the user.

Regarding the current "did GPT-4 degrade?"-drama, I agree with the differentiation between model behavior and model capabilites that is mentioned in the article below. But I want to add one important point that I've been thinking about a lot lately: within a good testing framework, behavior is aligned with performance. That's how assessing human capabilities works.

Model Drift and LLM Regression

Despite the flaws in the research paper, it nonetheless highlights an important point. LLMs and many other AI models do change over time in unpredictable ways. Those could be driven by data added by users and automatic model updates based on continuous learning, updated model fine-tuning, or new model deployment. This necessitates that anyone providing LLM-based applications needs to plan for continuous testing.

Think of this as a requirement for continuous monitoring of LLM performance. You will already monitor usage, latency, and other non-functional characteristics. Add accuracy and response quality to your testing regimen. This can be harder to do than the more easily quantified operational characteristics. You can also fall into some of the traps that the Stanford and Berkeley researchers did. But, you should plan for it, implement it, and refine your methodology over time.

This is true even if you are deploying your own AI model. You must test for reliability and consistency over time to detect when model drift or other factors are degrading accuracy. In addition, you should rely more on domain-related tests than generalized evaluations. The accuracy and performance in those areas will be most critical to your success.

OpenAI Shows How it Will Improve ChatGPT's Reasoning Skills in New Paper

OpenAI has released a new research paper exploring the benefits of large language model training (LLM) based on process supervision. Typical reinforcement learning with human feedback (RLHF) focuses on outcome supervision. The outcome-oriented evaluation considers whether the response of the large language model (LLM) is correct and appropriate. There i…

Do Large Language Models Have Emergent Abilities?

“Emergence is when quantitative changes in a system result in qualitative changes in behavior.” Philip Anderson, 1972 “An ability is emergent if it is not present in smaller models but is present in larger models.” Wei, Tay, Bommasani, Raffel, Zoph, Borgeaud, Yogatama, et al.,