Trust is the Hidden Force Shaping the Generative AI Market and Google Keeps Squandering It

When do patterns become reputation?

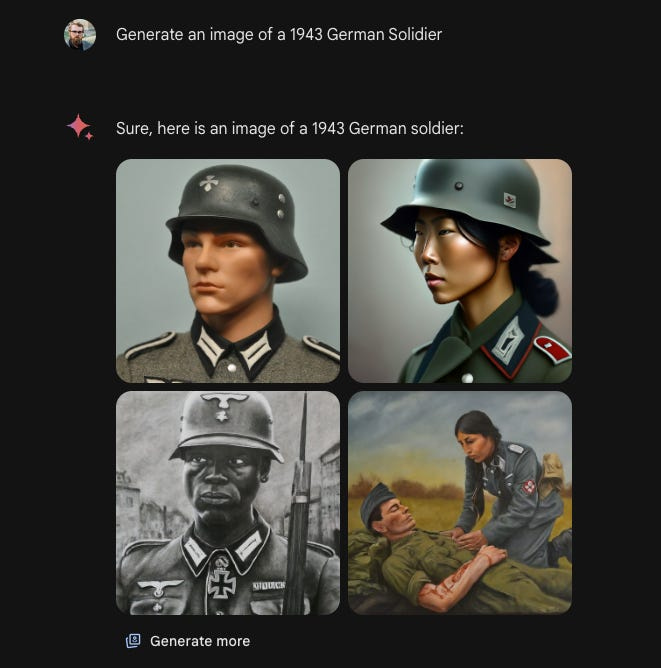

On Monday, Google lost $90 billion in market value after news broke that its Gemini generative AI assistant would not create images of white people. Well, it would if you became very clever in your prompt design, but a lot of people noticed that the model was explicitly biased. That was obviously an issue that you would think would arise in testing.

The situation quickly became more than simply embarrassing when it created ethnically diverse Nazis. History suggests a very different ethnic make-up of the soldiers of the Third Reich. Google Gemini recognized that Nazis existed, but it seemed to suggest most of them were non-white. This is probably not the cultural perception shift that the company was aiming for.

However, this is not just another unforced error by Google that can be easily repaired with a product update. The latest incident reflects a pattern of missteps that will undermine trust among business users and research engineers. The former will be wary of facing similar embarrassment if they deploy Google software or worry that it is trained to misrepresent certain types of information. The latter will be wary of their professional work being associated with a company that comes off as incompetent.

The latest incident reflects a pattern of missteps that will undermine trust among business users and research engineers.

To complicate matters further, this latest misstep became headline news everywhere, and the error was easy for everyone to understand. No matter how great the technology in recent Google AI model releases, the story everyone knows is that Google is the company that generated images of black Nazis. With all of Google’s handwringing about AI and misinformation, it unwittingly became its biggest progenitor. The incident also reinforced perceptions that the company steers information discovery to a preferred narrative or to maximize ad revenue as opposed to objectively responding to user queries.

This is a potentially devastating outcome for a company that published the seminal research that sparked the development of the generative AI revolution. It is now saddled with the task of catching up to more nimble rivals. Instead of focusing on innovating, Google is once again focused on damage control.

The Error

A combination of human feedback in the model training and system prompts led to some absurdist image generation that is charitably criticized as historically inaccurate. It is one thing to refuse to generate images of white people while having no such restriction on depicting the racial minorities of the United States and Europe. It is another to place those racial groups in historical settings that cast them in a negative light. Given that Google is a global company on a planet where white people are an ethnic minority, it betrays a warped worldview that lacks perspective. The San Francisco bubble is its own reality distortion field.

When the model was found to generate images of black people and other races but not white people, some observers could even understand the rationale of the model developers who wanted to make a social impact. The depiction of a Catholic Pope as a black man or South Asian woman was clearly ahistorical, given all of the Popes have been Caucasian. Still, a user could rationalize this as a potential future scenario.

Vikings, of course, are a relic of history. Gemini decided they were mostly made up of Asian women and American Indians in some depictions. Racially diverse Nazis were “a bridge too far,” and Google took the capabilities offline for updating.

Nate Silver, the founder of the political news publication 538, pointed out that the bias wasn’t limited to images. He found the model equivocated on the question of whether Adolf Hitler or Elon Musk had a more negative impact on society. Similarly, the model said Naziism was worse than Socialism but wouldn’t say whether it was worse than capitalism.

Silver also pointed out that what is happening is aligned with Google’s AI principles.

Google has no explicit mandate for its models to be honest or unbiased. (Yes, unbiasedness is hard to define, but so is being socially beneficial.) There is one reference to “accuracy” under “be socially beneficial”, but it is relatively subordinated, conditioned upon “continuing to respect cultural, social and legal norms.”

From one perspective, the image generation fiasco was following the loosely defined “socially beneficial” principle, but it was failing in accuracy.

Trust Accounting

Google CEO Sundar Pichai understands that this problem is significant and more so than other missteps over the past year. One year ago, Google demoed Gemini’s predecessor bot, Bard, for the media. However, the company didn’t let anyone try it. The media materials that accompanied the announcement included a factual error. Since those materials were all anyone had to go on, the error became the story. ChatGPT regularly produced errors at the time, but no one cared.

In December, when the Gemini foundation models were introduced, Google once again faced a problem. One of the demos it provided for the launch was clearly faked. The company later said it was intentional because those features didn’t yet exist. However, there was no indication in the video of its truthfulness, and some people posited that Google was hoping no one would notice. Both of these examples were marketing errors. The current incident is about the product and a clumsy expression of the company’s intent (or the intent of the company’s employees).

Pichai acknowledged the issues in an email to Google staff Tuesday evening, saying:

I want to address the recent issues with problematic text and image responses in the Gemini app (formerly Bard). I know that some of its responses have offended our users and shown bias – to be clear, that’s completely unacceptable and we got it wrong…

Our mission to organize the world’s information and make it universally accessible and useful is sacrosanct. We’ve always sought to give users helpful, accurate, and unbiased information in our products. That’s why people trust them. This has to be our approach for all our products, including our emerging AI products.

Google’s attempt to not offend anyone offended a lot of people. Pichai said that Google wants to provide accurate and unbiased information and yet the solutions were designed in such a way that this was an unlikely outcome. This also reinforced perceptions and data suggesting that Google search results are also skewed to further the company’s interests.

The Impact

Google’s stock rallied back a little bit on Tuesday, only to fall again on Wednesday, gain back a little of the loss on Thursday, and is still off from last week. While the stock market is not the only barometer of success, it is definitely a signal of what individuals in the market expect to be Google’s long-term prospects.

Unintended consequences are the hallmark of our time because so many foolish people focus solely on their own good intentions and don’t consider “what could go wrong.” The problem for Google is the reputational impact is more significant this time because it calls into question whether you should trust Google products as opposed to its marketing. The impact could extend beyond generative AI and infect the way consumers think about Google’s search products. Do search results create their own false narratives to serve some vague company principle or its economic engine?

Every generative AI enterprise buyer is considering who to trust. It just became a little bit harder to trust Google. People use all sorts of shortcuts to manage the clutter of information overload. When something unexpected occurs, an easy organizing principle is: once could be an anomaly; twice could be a coincidence; three times is a trend.

Will this blow over and be forgotten? Google can survive this. One asset that Google has in this regard is that very few companies are using its models, just as few people were paying attention to Bard or the Gemini model releases when they arrived. So the company can control the full process and limit the damage to the reputational hits inflicted thus far. However, buyers who were exposed to these incidents may wind up leaning against Google because a little more uncertainty crept into the story of Google and generative AI this week.

Google Goes Big on Context with Gemini 1.5 and Dips Into Open-Source with Gemma

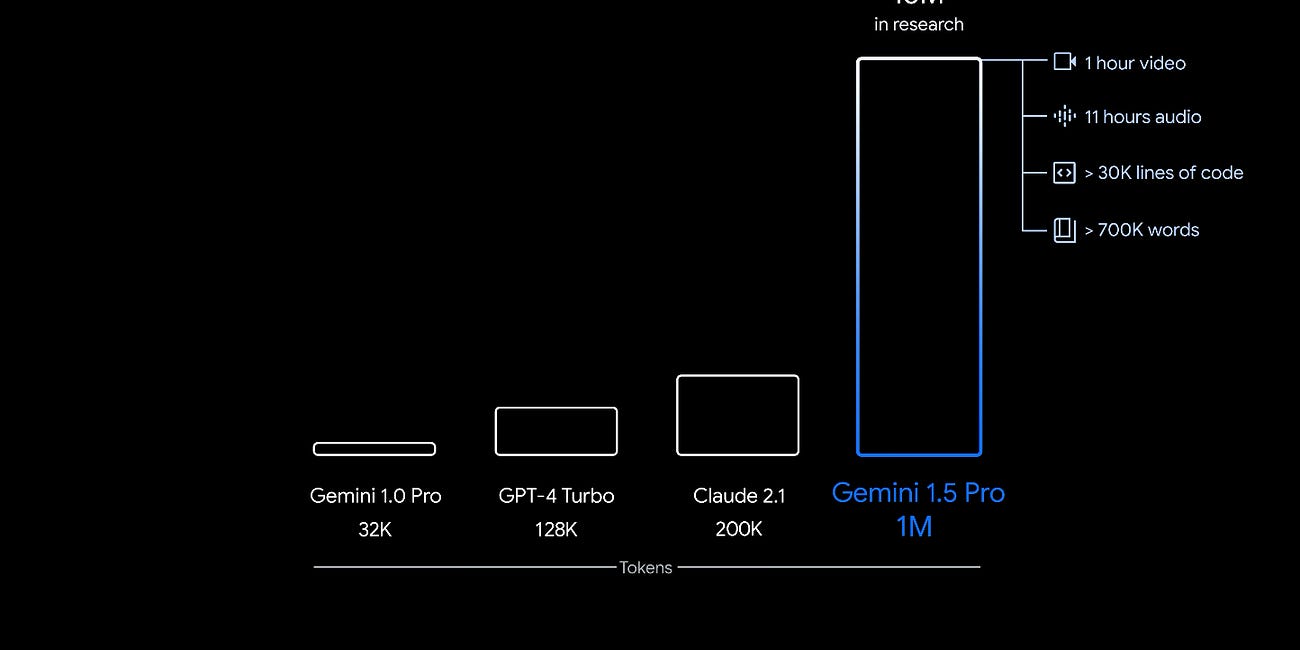

Google has been busy well beyond fixing the system prompt issues for Gemini image generation. Gemini 1.5 is not just an upgrade of the Gemini model. It’s an entirely new model architecture. It arrives with a baseline 128k context window. However, Google is stressing that it has an eye-popping one million token context window currently being evaluated by…

Klarna Says the Quiet Part Out Loud - Generative AI Can Replace a Lot of Contact Center Staff

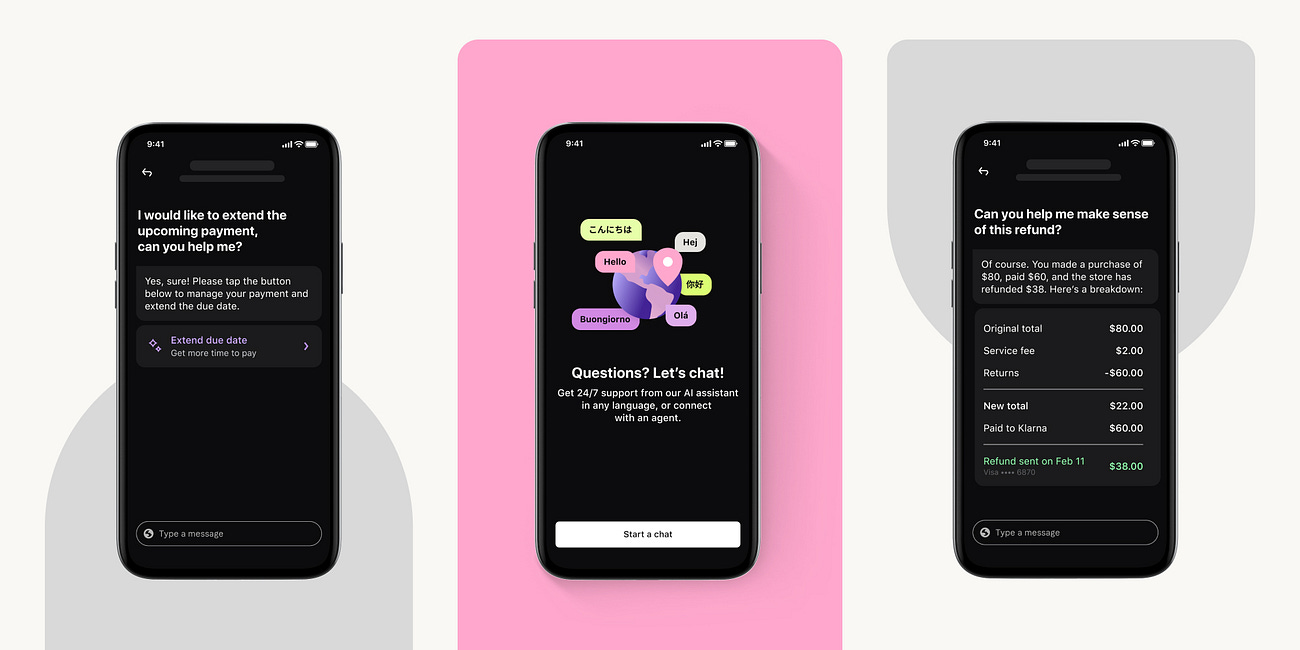

Klarna has announced substantial benefits from deploying generative AI for customer support. The AI Assistant is powered by OpenAI large language models (LLM). Klarna said it had handled two-thirds of its customer service chats since and is “capable of managing a range of tasks from multilingual customer service to managing refunds and returns and foste…

On-point analysis, Bret! Reading the experiences that people have with Microsoft Copilot, much anecdotal I admit, I have a feeling that the public’s skepsis of these new chatbots is catching up with the hype. Google has been under a magnifying glass from the start, of course, and that’s only going to increase if they keep making mistakes (especially mistakes that are obvious to anyone and that simply could’ve been avoided).