Amazon Reshuffles LLM Ecosystems with an [up to] $4B Investment in Anthropic

The strategic implications are far-reaching for AWS and the LLM market

Amazon will invest up to $4 billion in Anthropic and have a minority ownership position in the company.

Anthropic and Amazon announced a new partnership today that includes the former committing to running its primary training and inference workloads on AWS, and the latter investing “up to $4 billion,” for a minority stake in the company. There is a lot to unpack in this announcement, or should I say announcements. Anthropic published a blog post, and Amazon a press release. The key topic breakdowns include:

The news and its significance for Amazon

Impact on the cloud ecosystem battles around generative AI

Anthropic’s rapidly rising valuation and implication for LLM providers

Amazon’s Chip Gambit

While the specific details of the deal are not yet public, the statements from Amazon and Anthropic shed a lot of light on the objectives and some mechanics. Amazon’s announcement indicates what the company sees as most important.

Anthropic will use AWS Trainium and Inferentia chips to build, train, and deploy its future foundation models, benefitting from the price, performance, scale, and security of AWS. The two companies will also collaborate in the development of future Trainium and Inferentia technology.

AWS will become Anthropic’s primary cloud provider for mission critical workloads, including safety research and future foundation model development. Anthropic plans to run the majority of its workloads on AWS, further providing Anthropic with the advanced technology of the world’s leading cloud provider.

Anthropic makes a long-term commitment to provide AWS customers around the world with access to future generations of its foundation models via Amazon Bedrock, AWS’s fully managed service that provides secure access to the industry’s top foundation models. In addition, Anthropic will provide AWS customers with early access to unique features for model customization and fine-tuning capabilities.

Amazon will invest up to $4 billion in Anthropic and have a minority ownership position in the company.

Amazon developers and engineers will be able to build with Anthropic models via Amazon Bedrock so they can incorporate generative AI capabilities into their work, enhance existing applications, and create net-new customer experiences across Amazon’s businesses.

The first mention is Amazon’s Trainium and Inferentia deep learning ASICs. A leading large language model (LLM) provider using these chips is strategically important to Amazon. The chips are proprietary to AWS and are positioned as a cost-effective alternative to NVIDIA GPUs. However, there is strong market confidence in NVIDIA chips and little market knowledge or experience with Amazon’s chips. Bringing Anthropic to this infrastructure with its 100k token context window, fast processing, and Claude 2 rival to ChatGPT could deliver instant credibility.

Generative AI is currently a chip-limited market. Demand is high, and the availability of NVIDIA A100s and H100s is limited. That results in high price points for acquiring the NVIDIA chips and high costs for running AI training and inference jobs. With Anthropic as a reference case study, this may be enough to convince other foundation model developers and enterprises deploying their own LLMs to use AWS infrastructure. This is similar to how Google positions its TPU chips, though the company seems to be pushing them less aggressively than AWS and just signed a new deal to host an instance of NVIDIA’s DGX cloud services.

If Amazon’s gambit with Anthropic works, it could draw more users to its infrastructure and reduce the number of NVIDIA chips required to serve customer demand. That will reduce its operating costs and potentially enable AWS to offer an additional price advantage for training and inference. In addition, because of the AWS alternative, lower demand for NVIDIA chips may help bring prices down for the top-end GPUs, which Amazon would also benefit from as it will still acquire them in large quantities.

In addition, Anthropic will become a chip-development collaboration partner with Amazon. That direct collaboration could be critical in building even more efficient ASICs optimized for deep learning workloads.

The Cloud Wars and LLM Proxy Battles

Another layer in this strategy is related to the cloud hyperscaler wars. AWS, Azure, and Google Cloud are competing fiercely for their share of the generative AI training and inference demand. The market share gained now is likely to be sticky. And you don’t want existing customers of your cloud believing they must dabble with a competing cloud provider to access generative AI solutions.

This is already happening to a degree with OpenAI’s current “semi-exclusive” agreement with Azure. Shortly after announcing Azure OpenAI Services, the company announced over 4,500 customers. Users can still access OpenAI directly, but many enterprises prefer to use a cloud platform for ease of scaling and management, and the GPT foundation models are unavailable on AWS and Google Cloud.

AWS offers the Amazon-developed Titan LLM, but there is no indication that it has generated much user interest. I outlined in an August Synthedia post, Amazon’s three-layer generative AI strategy that included:

AI-optimized Computing Infrastructure

Foundation Models

Applications

The computing infrastructure benefits are highlighted above, and Amazon is using the application layer and expecting third parties to do so as well.

At the foundation model layer, Amazon has pursued “an everything else strategy.” That is everything besides OpenAI’s GPT model family and Google’s in-house models. Azure offers access to Meta’s Llama 2 and TII Falcon open models in addition to OpenAI, which is considered its in-house model since it owns a reported 49% of the company. Google offers multiple models managed through its Vertex AI model garden but has its own PaLM model and is working on a significant upgrade named Gemini.

If it turns out the proprietary OpenAI and Gemini models are superior to “everything else,” that could be problematic for Amazon. Anthropic is the most interesting of the independent foundation model makers today, given the large context window, Claude 2, and strong performance. Google has already invested hundreds of millions of dollars into Anthropic. If it took Anthropic off the market with another large investment like Microsoft did with OpenAI, Amazon would have fewer options to pursue.

The announcements don’t say that Anthropic will be exclusive to AWS. However, it intends to run all its training and inference on AWS infrastructure as its “primary provider.” Google Cloud is not going to be pushing Anthropic use given that scenario despite pre-announcing availability during the August Cloud Next conference. Google will probably not suffer a lot from this strategic move by Amazon, but AWS should gain.

All three leading cloud hyperscalers now have a primary foundation model to offer. AWS is likely to be less overt than Azure and Google Cloud in pushing a “preferred model” and be viewed as more hospitable to the other model providers. However, it is hard to see anything other than a first-among-equals approach in the future.

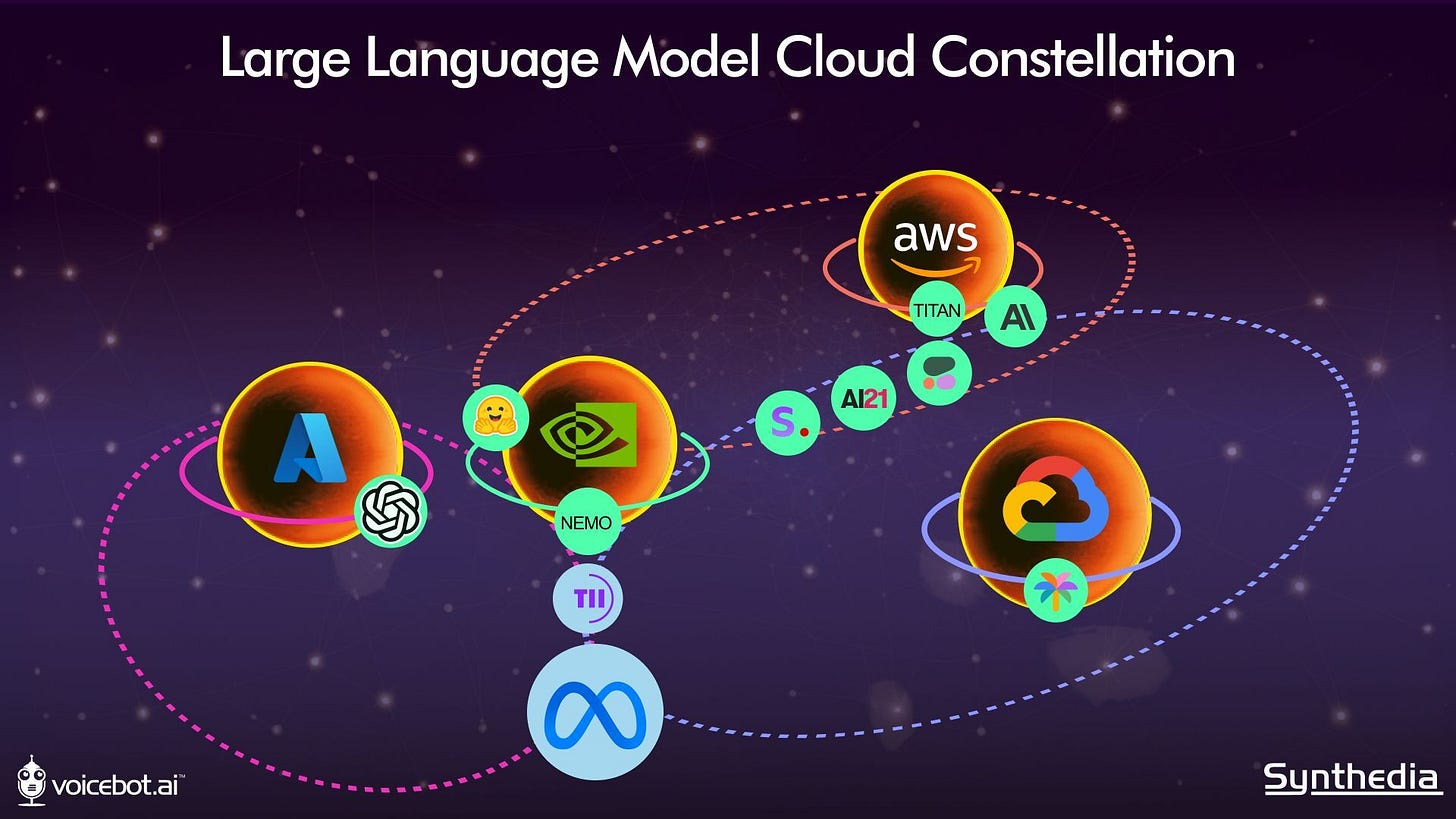

The image above provides the revised version of Synthedia’s LLM Cloud Constellation. You can see how quickly the market is evolving; Google was not working with NVIDIA’s DGX (they are now) in the previous edition, and Anthropic’s orbit is getting pulled in more closely to AWS. Key changes since our June version also include the addition of Llama 2 and Falcon and leading “open model” options. An open model is not the same as open source. Anyone can use them, but there are restrictions.

The key question for the other foundation model providers is whether they will remain floaters or get drawn in more closely to one of the cloud hyperscaler orbits.

Anthropic’s Rapidly Rising Valuation

Another interesting item from the announcements is the mention of “up to $4 billion” and “a minority ownership position in the company.” This means that Anthropic’s current valuation is more than $8 billion.

While OpenAI ultimately fetched a reported $27-$29 billion valuation from Microsoft, Cohere raised earlier this year at $2 billion and AI21 Labs $1.4 billion. Those are also large valuations and intriguing companies (particularly AI21), but investors value Anthropic far higher today. That suggests it is considered the closest independent foundation model provider to OpenAI.

The “up to” modifier is also interesting. This suggests there are tranches for the investment based on milestone accomplishments for AWS or Anthropic. In addition, the investment almost certainly includes the contribution of no-cost or low-cost AWS computing, as opposed to all cash. The investment amount may be based on how much AWS is charging for similar services over time, which adds up to equity. If costs come way down, then equity would not accrue as quickly. It may also mean that the valuation at any given time is dependent on progress against Anthropic’s business plan.

Regardless, this announcement is good news for Anthropic. It locked in a high valuation, a large amount of computing resources, and maybe some cash as well. It also added another tech giant champion to its team, which should help it gain trust among enterprise users and provide easy access to existing AWS customers. And it avoided the fate of becoming a floater where competition is likely to be more fierce for relevance and attention.

The deal also works for Amazon by giving its AI chips a prominent endorsement and guaranteed training and inference volume. And it ensures Anthropic will not simply become another asset that Google Cloud can use to compete for AI business. The market is coming into sharper view every week that passes.

Let me know what you think in the comments below. Was this a good move by Amazon? How about by Anthropic? What do you see as potential downsides of the deal?

Amazon CEO Lays Out Generative AI Strategy, Says Everyone in the Company is Using the Tech

Amazon was slower than its rivals to go all-in on generative AI, but it now appears the largest battleship in cloud computing is heading squarely in that direction. Executive realignment and increased investment are clear evidence of this change. Rohit Prasad, the long-time head scientist for Alexa and business leader for the product unit since last yea…

Microsoft Copilot Strategy Comes into View and Wider Availability Begins This Week

Microsoft announced this week that Copilot will be rolling out with the Windows 11 upgrade available on September 26th. Not to be confused with the enterprise edition of Copilot, the company is positioning this version as an everyday AI companion that can help users be more productive using a wide array of Microsoft services, including the 365 productiv…