The AI Safety Salvos Against OpenAI Seem Like a Reputation Management Campaign

Keeping the doom alive.

We’ve heard this all before. AI doomers versus AI boomers. In early 2023, the doomers were ascendant. They generally promote the thesis that AI presents an existential threat. Whether or not it's imminent, the disaster will come so suddenly, they say, that we must act preemptively to save humanity.

AI doomers are often technology industry celebrities or hangers-ons who like to be seen as close to the innovation curve. They are not monolithic, as there is some degree of difference in their perceived threat. However, they come off as monolithic and “aligned” around activities such as “pausing” giant AI and ramping up government regulation.

Elon Musk, Geoffry Hinton, Joshua Bengio, Sam Harris, Gary Marcus, and many others are good for a frightening headline about impending doom on a regular basis. OpenAI’s Ilya Sutskever is at least an honorary member of this group. So are a couple of the former OpenAI board members who were ousted after they fired Sam Altman in November 2023. Some people used to put Sam Altman in this category, but the doomer purity clan has decided he was a boomer in disguise.

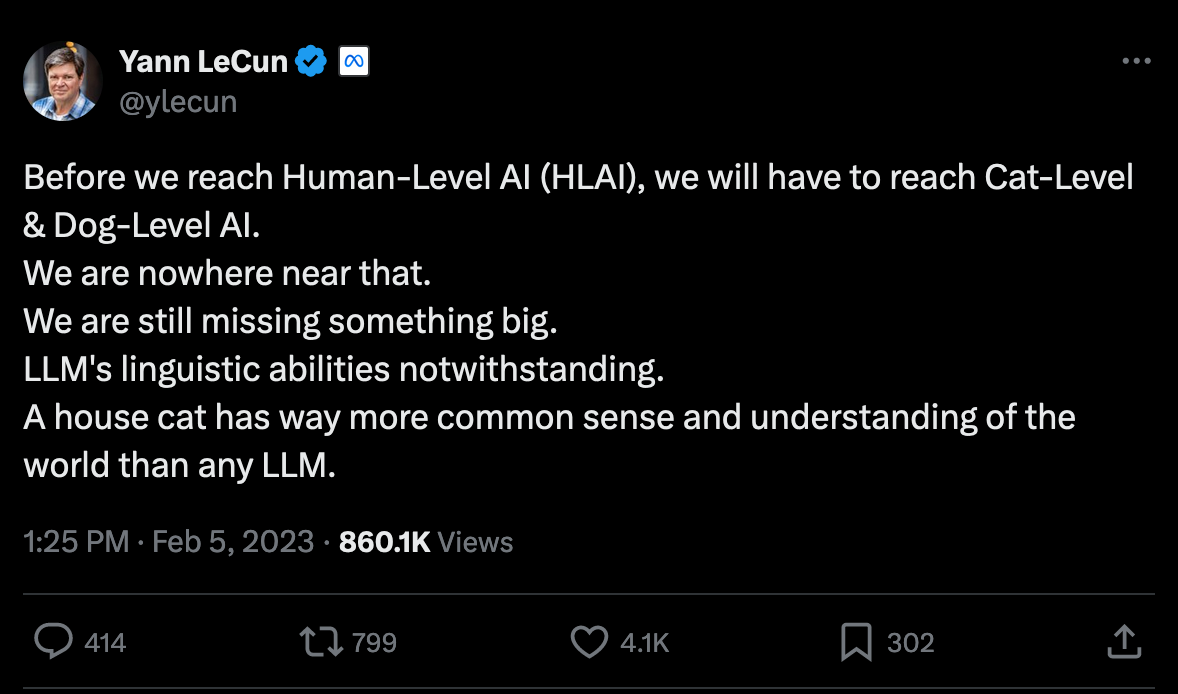

The clear leader of the boomer group seems to be Yan LeCun, who, along with Hinton and Bengio, is the Turing Award winner for contributions to the AI field. However, I’m not sure this is even correct. He is the most outspoken and critical of the doomer crowd, but he seems more anti-doomer than a true AI boomer. Microsoft’s Satya Nadella, AI legend Andrew Ng, elite venture capitalist Mark Andreessen, Jeff Bezos, and many venture-funded startup CEOs are generally the staunchest prophets of inevitable AI benefits.

Against this backdrop, we were treated to several stories over the past two weeks about OpenAI’s abandonment of AI safety as a principle. This created a vehicle for doomer lament, but frankly, these incidents seemed more like an opportunity for ousted and marginalized OpenAI employees to repair their reputation or to signal to fellow doomers that the fight was not over.

Helen Toner

Helen Toner became famous as one of the OpenAI board members who voted to oust Sam Altman as CEO in November 2023. As the saga unfolded, her background attracted heightened scrutiny, particularly in terms of her limited professional experience and a paper she had written critical of OpenAI while she was serving as a board member. She suggested in a recent podcast interview that she was unhappy about Sam’s reaction:

The paper came out Sam started lying to other board members in order to try and push me off the board.

Another grievance was that Altman didn’t inform the board before releasing ChatGPT.

When ChatGPT came out in November 2022, the board was not informed in advance. We learned about ChatGPT on Twitter.

Toner also talks about their concerns about Altman’s control over the OpenAI fund and what she considered a pattern of deceit that undermined the board’s trust in the leadership’s approach to AI safety. She also referenced a complaint about a “toxic atmosphere” at OpenAI.

In retrospect, it seems hard for Toner to justify the drastic decision. She says the board came to a conclusion based on a series of infractions that individually may have been innocuous but together raised concerns.

However, these remarks make it harder to rebut the critiques that she was simply unqualified for the responsibility. She accuses Sam of lying about her and working to remove her from the board. This suggests she had a personal interest in removing Altman from the board before he succeeded in removing her.

Toner also seems miffed that the management team didn’t tell them about a beta product release. Sure, ChatGPT became a cultural phenomenon, but there was no way to know that before it was released. Oversight boards typically do not micromanage product releases. ChatGPT is clearly not AGI, even by broad definitions, nor does it pose any existential threat to humanity. What is the issue?

If Toner had personal reasons that shaded her thinking about OpenAI and Sam Altman in November 2023, those were only amplified in the aftermath of the management shake-up debacle. Her podcast comments seem like a campaign to reset the narrative with her as both a hero and a victim for trying to do the right thing.

Jan Leike

Toner’s podcast appearance seemed to set the stage for Jan Leike’s OpenAI departure and his disparaging remarks about the company’s approach to AI safety. Leike wrote on X.

Yesterday was my last day as head of alignment, superalignment lead, and executive @OpenAI… Stepping away from this job has been one of the hardest things I have ever done, because we urgently need to figure out how to steer and control AI systems much smarter than us.

I joined because I thought OpenAI would be the best place in the world to do this research. However, I have been disagreeing with OpenAI leadership about the company's core priorities for quite some time, until we finally reached a breaking point.

I believe much more of our bandwidth should be spent getting ready for the next generations of models, on security, monitoring, preparedness, safety, adversarial robustness, (super)alignment, confidentiality, societal impact, and related topics.

These problems are quite hard to get right, and I am concerned we aren't on a trajectory to get there.

Over the past few months my team has been sailing against the wind. Sometimes we were struggling for compute and it was getting harder and harder to get this crucial research done.

But over the past years, safety culture and processes have taken a backseat to shiny products.

Many employees depart companies, and some make critical remarks about their former employers. However, why should we believe their opinions are akin to facts? His comments were tailor-made for juicy headlines. There is just no evidence other than his opinion that his views represent the entire story. That said, he did get largely favorable news coverage, which raised his profile as someone who was with OpenAI, was fighting for AI safety in opposition to the goals of company management, and is on the side of AI safety.

The difficulty here is that it is hard to know who is right. Employees who aren’t successful in persuading their manager to push a specific objective become enamored of themselves as whistleblowers with truths that must be heard. Sometimes, those truths turn out to be false. Other times, the conflicts are wars of opinion.

Regardless, comments like those of Toner and Leike quickly received backing from the AI doomers. They already believe OpenAI is bad and largely uncontrollable. The latest comments from former insiders are seen as a verification of their existing negative sentiment. Therefore, they must be right.

Tactical Mistakes

The doomers made several tactical mistakes in pushing their AI pause and related concerns. That assumes their actual goal is AI safety more than posturing and headline hunting.

Exposing self-interest - Many vocal AI doomers have a financial interest in slowing down OpenAI. Others benefit from additional media mentions.

Attacking open-source - Open-source makes it hard to control access and use. Many doomers embraced this and lost the backing of open-source developers. However, their goal to control AI model access and use demands that open-source projects are curtailed. This stance is unpopular with many people that don’t believe AI should be controlled by a few large technology companies.

Making claims that frighten without offering empirical evidence - It is also problematic that AI doomers don’t have evidence for their claims of impending disaster. The public eventually wants evidence that the approach and policy are true and practical.

These tactical mistakes have made it harder for doomers to make progress. That means they must look to negative commentary from former employees to serve as new “smoking guns” justifying action now.

The Real AI Safety

It is difficult to say whether the doomer crowd is helping or hurting the AI safety cause. AI safety has many important elements that don’t raise the specter of imminent human extinction but instead pertain to more likely near-term harms. However, it is hard for these perfectly reasonable concerns to generate significant discussion because they pale in comparison with a human extinction event.

There is a view among doomers that they alone are warning the world of AI risks that must be taken seriously, and everyone else is in it for greed. However, we have seen that many doomers also benefit from peer-group prestige, and some are in line to benefit monetarily if their desires around AI pause and regulations are followed. Earnest warnings are undermined by what too often appear to be self-serving interests or over-the-top fear-mongering. Messianic fervor doesn’t guarantee persuasion.

What about considering the likelihood of risk? What about providing empirical evidence of the risks they believe are so certain? What about scenario planning? What about making incremental progress?

Yan LeCun offers an interesting perspective that few doomers seem to grasp. What if the singularity is not likely to be a sudden event and instead could only happen over a period of time with various stages of development? According to LeCun, the history of technology innovation and AI innovation follows that pattern far better than a sudden transition hypothesis. If this is true, then the world should have ample time to enact safeguards as AI progresses and introduces new levels of risk.

Many thanks to our title sponsor.

Accolades for OpenAI's GPT-4o Are Rising

Since March 2023, every large language model (LLM) developer has been racing to catch up to OpenAI’s GPT-4. The performance gap was so wide enough that most of OpenAI’s rivals initially focused on simply matching, or slightly outperforming, GPT-3.5-turbo. Progress since late 2023 has accelerated, with many models surpassing GPT-3.5-turbo and Google and …

Google Gemini 1.5 and Flash LLMs Show Significant Advances Hidden in Research

Google is developing a habit of releasing research papers and then simply adding information to the versions hosted in Deepmind servers without versioning. This makes it harder to know when the information was added and what was added. During Google I/O, the company showed off the latest Gemini 1.5 Pro and Flash large language models (LLM) but didn’t me…