The Surprising Tactics of Deepfakes Targeting Contact Centers Today

Liveness detection, deepfakes, and four ways fraudsters use synthetic voices

Pindrop Pulse liveness detection for deepfake fraud attacks was released in January to beta customers, and Synthedia has learned it was being tested with some companies for several months in 2023. While Pindrop uses a variety of techniques to identify fraudulent phone calls into contact centers, Pulse is specifically designed to detect deepfake voice clones. According to the company:

Pindrop’s liveness detection identifies deepfake audio by detecting unique patterns, like frequency changes and spectral distortions, that differ from natural speech. Pindrop’s technology uses artificial intelligence to analyze these patterns, producing a “fakeprint”—a unit-vector low-rank mathematical representation preserving the artifacts that distinguish between machine-generated and generic human speech.

Detection without Noise

Synthedia and Voicebot have tracked the rise of deepfake technology for the past five years, and increasingly, the discussions revolve around the fraudulent use of the technology. Liveness detection techniques appear to be the most compelling solution to detect deepfakes and can be applied in real time or asynchronously to recorded media.

Pindrop reports 99% accuracy against known voice cloning models and 90% for new models, known as zero-day attacks. Notably, both of these figures are for configurations that exhibit less than 1% false positive rates.

False positives are an essential metric that is often ignored when considering deepfake detection. Any system could achieve 100% deepfake protection by simply identifying all inbound calls as deepfakes. If all calls are rejected, no deepfakes will proceed; thus, 100% identification is guaranteed. Granted, the false positive rate would be very high.

Consider that calls identified as deepfakes are typically subjected to additional screening by call center agent specialists, and you quickly understand there are significant costs associated with false positives. The goal is high rates of accurate identification (i.e., true positives) with low rates of labeling legitimate calls as deepfakes (i.e., false positives). Ideally, all of the legitimate calls should go through without falsely labeling them as deepfakes so companies and their customers are not burdened with additional screening steps.

Liveness detection is used to parse the soundwaves from a call conversation and distinguish whether it is being expressed in real-time versus recorded and delivered by a human versus a synthetic speech model. Pindrop created a set of vocal markers associated with leading speech synthesis models that enable it to detect the signature of those models. It has generalized this information so it can detect novel models as well.

While there is a lot of concern about the detection of the visuals of a deepfake video, in many cases, voice is all you need. If the voice is a deepfake, there is a high probability that all or some of the visuals are as well. It is also faster and more cost-efficient to analyze audio data than video.

Four Types of Deepfake Attacks Today

Pindrop also shared some particularly interesting information related to their product launch. According to Amit Gupta, Pindrop’s VP of Product, learnings from beta customer installations in the financial services industry include:

Deepfakes are no longer a future threat in call centers. Bad actors actively use deepfakes to break call center authentication systems and conduct fraud. Our new Pindrop Pulse liveness detection module, released to beta customers in January, has discovered the different patterns of deepfake attacks bad actors are adopting in call centers today.

Synthetic voice was not always for duping authentication: Most calls were from fraudsters using a basic synthetic voice to figure out IVR navigation and gather basic account information. Once mapped, a fraudster called in themselves to social engineer the contact center agent.

This is an interesting finding and does not employ a voice clone to break into a customer account. Fraudsters are using synthetic voices, likely combined with large language models (LLM), to probe the IVR so they can be more efficient when calling in to use other techniques. It is a tool for scalability and efficiency in committing fraud. However, this could be an important additional signal to call centers that fraudulent calls will soon follow.

Synthetic voice was used to bypass authentication in the IVR: We also observed fraudsters using machine-generated voice to bypass IVR authentication for targeted accounts, providing the right answers for the security questions and, in one case, even passing one-time passwords (OTP). Bots that successfully authenticated in the IVR identified accounts worth targeting via basic balance inquiries. Subsequent calls into these accounts were from a real human to perpetrate the fraud. IVR reconnaissance is not new, but automating this process dramatically scales the number of accounts a fraudster can target.

This is another interesting attack technique. After the voice authentication system rejects the synthetic voice, the call is routed to a live agent for verification. This then enables social engineering techniques to be used by a human fraudster with illegally obtained user information.

Synthetic voice requested profile changes with Agent: Several calls were observed using synthetic voice to request an agent to change user profile information like email or mailing address. In the world of fraud, this is usually a step before a fraudster either prepares to receive an OTP from an online transaction or requests a new card to the updated address.

This represents the type of fraudulent use that most people think about in terms of voice clone risk. A replica of a real human’s voice is used to bypass controls or appear more believable to a call center agent. This might be particularly important if the fraud ring is made up of non-native speakers of the consumer’s language. The voice clone will reduce the risk of rejection based on a lack of native fluency.

Fraudsters are training their own voicebots to mimic bank IVRs: In what sounded like a bizarre first call, a voicebot called into the bank’s IVR not to do account reconnaissance but to repeat the IVR prompts. Multiple calls came into different branches of the IVR conversation tree, and every two seconds, the bot would restate what it heard. A week later, more calls were observed doing the same, but at this time, the voice bot repeated the phrases in precisely the same voice and mannerisms of the bank’s IVR. We believe a fraudster was training a voicebot to mirror the bank’s IVR as a starting point of a smishing attack.

This attack is the call center equivalent of fake bank login landing pages. In those instances, a user receives a link to update their bank information and arrives at a fraudulent page that is a near replica of the legitimate bank login page. The familiarity of the page layout and design reduces the likelihood that the customer will think there might be a scam in operation. They then enter their login details, and the fraud perpetrators have their banking credentials.

The IVR replica is the same idea for customers using the call center as their support channel. If the consumer has even a passing familiarity with the IVR experience for their bank, the call will seem normal even if the phone number they used goes to the fraudsters. During the call, the goal will likely be to get the consumer to share personal information that may be used as verification details for their banking accounts. The fraudsters then use that information to execute fraudulent calls to contact centers, employing social engineering techniques armed with accurate information provided directly by the target consumer.

The Deepfake Arms Race

We are currently witnessing a deepfake arms race. Crime rings are employing the latest AI-infused technology to bypass security safeguards or improve their cost-efficient scalability, while companies are looking to protect their customers, employees, and corporate interests. The arrival of liveness detection and related techniques for deepfake identification is leveling the playing field or even tilting the advantage back to the companies and away from the fraudsters.

While deepfake detection is largely a novelty for companies in their call centers today, Synthedia forecasts it will become widely adopted and increasingly be viewed as standard security hygiene over the next five years.

Google Goes Big on Context with Gemini 1.5 and Dips Into Open-Source with Gemma

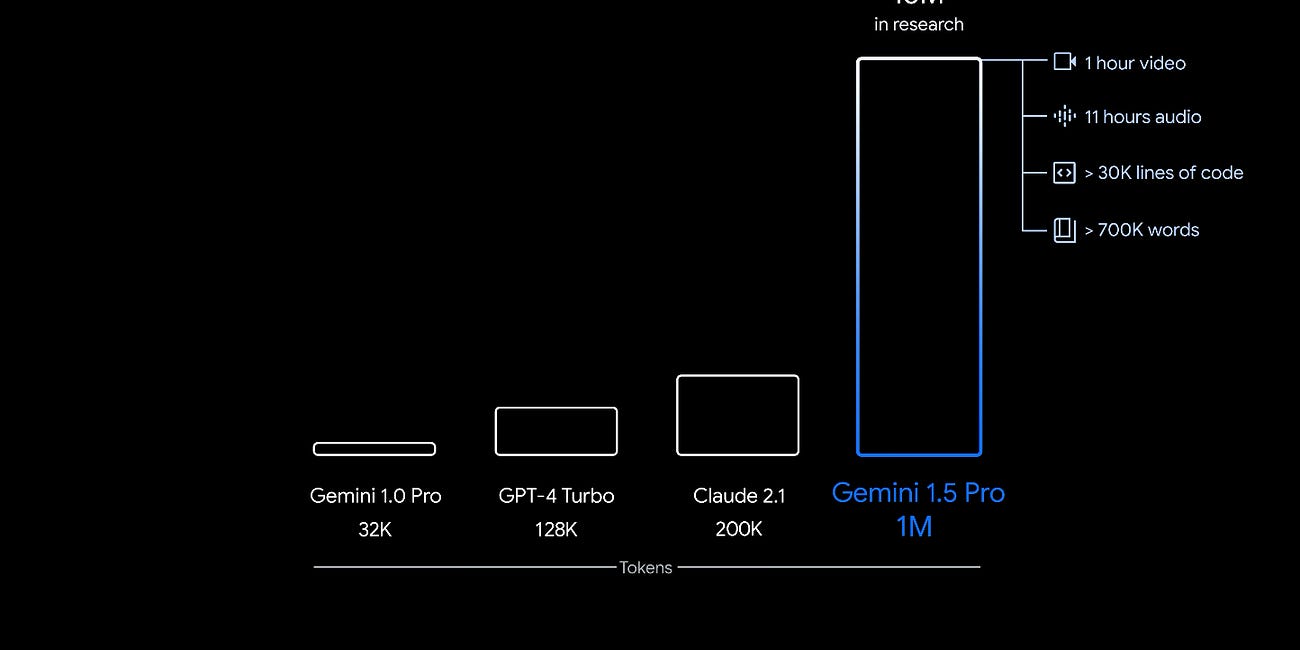

Google has been busy well beyond fixing the system prompt issues for Gemini image generation. Gemini 1.5 is not just an upgrade of the Gemini model. It’s an entirely new model architecture. It arrives with a baseline 128k context window. However, Google is stressing that it has an eye-popping one million token context window currently being evaluated by…

NVIDIA is Officially the Giant of Generative AI

Eleven months ago, Synthedia ran the article headline: NVIDIA is Becoming the Giant of Generative AI. This week, it announced $61 billion dollars in revenue for fiscal year 2024. That is more than double 2023, which was also a record year but ended with a quarter where data center revenue was down slightly from earlier quarters. This was before the Chat…