Mistral Poised to Raise New Funding at $6 Billion Valuation to Drive LLM Innovation

The model wars enter a new phase with the new entrants making waves

The Wall Street Journal reported earlier today that Mistral is about to close a funding round of about $600 million at a valuation of $6 billion. Previous investors LightSpeed Ventures and General Catalyst are said to be leading the round along with participation from DST, according to TechCrunch.

The $6 billion figure is post-money, so it suggests the current valuation is about $5.4 billion, up from an anticipated $5 billion a few weeks ago. More impressively, the new valuation is nearly triple the $2 billion the company secured in a December 2023 funding of $415 million. The new funding will help Mistral stand out from key open-source competitors and may lead to the ability to train larger models.

Mistralmentum

Mistral has been riding a wave of momentum since that funding round. It introduced several open-source large language models (LLM), including Mitxtral 8x7B and 8x22B. It also launched a “flagship” model called Mistral Large and landed a “multiyear partnership” with Microsoft that brought its models to Azure. AWS and Google also provide access to a couple of Mistral’s models and some people believe that company backers were instrumental in scaling back some regulations in the EU AI Act.

The company is not trying to take on OpenAI and Anthropic today. Instead, Mistral is focused on the intersection of LLM performance and computational efficiency. In an interview on the Unsupervised Learning podcast in March, Mistral CEO Arthur Mensch commented:

The important thing for us is total cost of ownership so if you have more flops and the flops at the end of the day are are less expensive that's great because you can train larger models.

Mistral is differentiating based on its cost-efficient models, open-source support, and EU headquarters. These factors have combined with strong performance compared to other small and open-source LLMs to become a popular consideration among enterprise buyers. The company has landed several large customers, particularly in the EU, and many Azure users are considering Mistral, along with Microsoft’s new Phi-3 models, as options to power specialized AI agents and as alternatives to more expensive OpenAI models.

Cash Competition

The LLM performance competition is fierce among the foundation model developers. Each week, new AI models claim to beat OpenAI’s GPT-3.5 or GPT-4 model. News from Google and OpenAI next week is likely to continue the trend. We are also seeing new performance competition categories for open-source, cost-efficient, low latency, and small models. However, another important factor is the competition for cash and access to GPUs for model training.

Another comment by Mensch from the Unsupervised Learning podcast spoke to the importance of cash-on-hand for competing in the LLM market:

We expect to be able to ship better models with better compute going forward. A startup can’t just buy 350k [NVIDIA] H100s. That's kind of expensive. So, there's also a question of unit economics like how do you make sure that $1 that you spend on compute in training eventually accrues to more than $1 in in revenue? Being efficient with the training compute is actually quite key in having a valid business model at the end of the day.

Meta also provides open-source LLMs, and it has purchased the equivalent of more than 350,000 NVIDIA H100s. Mistral may not be able to compete with the type of war chest that Meta can bring to bear, but it still needs to fund model training before it generates revenue from inference jobs.

X.ai and Mistral have shown that LLM innovation cycles are accelerating. Both companies have delivered high-performing models in months and quickly caught up with competitors that have been in the market for far longer. Mistral has momentum. It’s progress also suggests other new entrants could still make a big impact in the LLM segment as long as they can also access large quantities of VC funding.

Mistral Introduces Two New High Performance LLMs and Neither Are Open-Source

There is a lot to unpack in the new AI foundation models Mistral Large and Mistral Small. While Mistral’s earlier models debuted with open-source licenses, the new releases are…

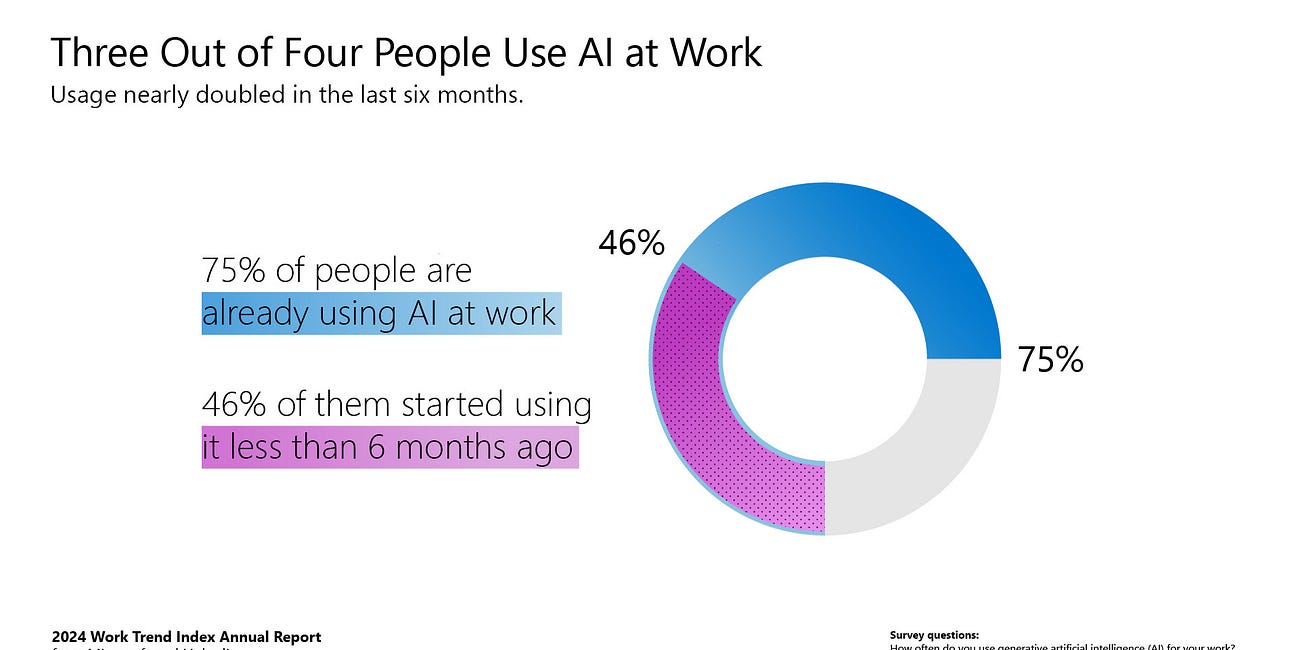

Employees Are Bringing Their Own AI to Work Regardless of Company Support

Microsoft’s 2024 Work Trend Index Report surveyed 31,000 people across 31 countries and uncovered new information related to AI adoption by business users. A key finding was that 75% of knowledge workers worldwide are already using generative AI. While some executives lament the slow uptake by workers of internally supplied generative AI solutions, they…

Meta Llama 3 Launch Part 1 - 8B and 70B Models are Here, with 400B Model Coming

Meta launched the Llama 3 large language model (LLM) today in 8B and 70B parameter sizes. Both models were trained on 15 trillion tokens of data and are released under a permissive commercial and private use license. The license is not as permissive as traditional open-source options, but its restrictions are limited.